How to Defend Against Account Opening Abuse

This is the third post in a three-part blog series about account opening abuse. Part one defined account opening abuse, discussed how it manifests differently across six industries, and explored how these accounts can be reused within broader fraud schemes. Part two explained how fraudsters execute account opening abuse. This blog post, the last in the series, will explain how to defend against and protect your organization from this type of attack.

Remind me: What’s account opening abuse?

Account opening abuse is a type of attack in which cybercriminals create fraudulent accounts or profiles to commit financial fraud, identity theft, or other illicit activities.

This type of fraud usually involves stealing or fabricating personal information with which to open new accounts for online platforms or financial institutions. Bots and automated software programs are often used to expedite the process of fake account creation, which may overwhelm online platforms and make it difficult for security systems to detect and prevent such activity. Fake accounts are frequently an interim step for account promotion abuses or to steal digital assets.

From fake reviews, spam, and phishing attempts to bogus social media profiles, forged bidding wars, the spectrum of fraudulent activity associated with account opening abuse is vast and ever-evolving.

Without a robust defense strategy in place, businesses can easily fall victim to operational disruptions and direct monetary losses, such as chargebacks, fraudulent transactions, and unauthorized withdrawals. Customer information can also be compromised, resulting in legal liabilities and regulatory fines.

Once businesses have recognized the severity of associated risks, they can fortify their defenses with proactive measures, such as account protection, bot management, and advanced authentication methods to stay ahead of account opening abuse.

3 layers of defense against account opening abuse

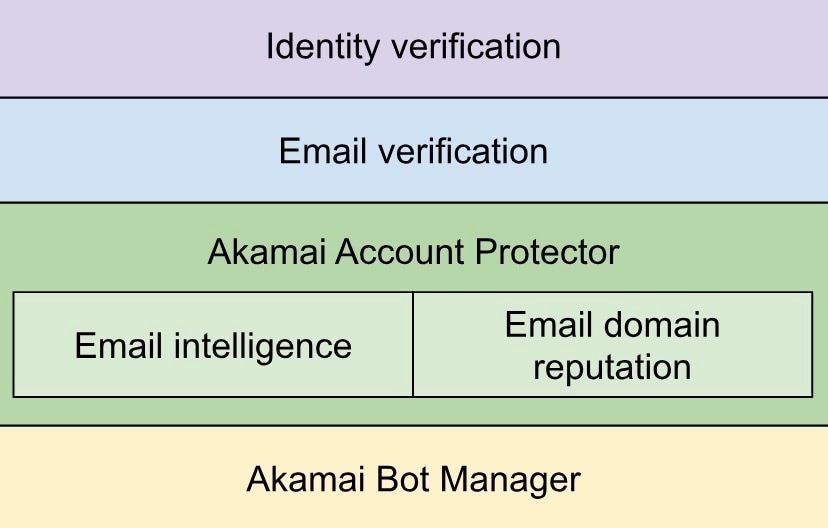

Several layers of defense are required to successfully defend against account opening abuse. The figure takes a closer look at the key strategies that can be implemented to safeguard against this evolving threat: bot management, account protection, and identity verification.

The technology stack that can protect against account opening abuse

The technology stack that can protect against account opening abuse

Bot management

Botnets are commonly used in account opening abuse schemes. Therefore, it’s essential to use a product like a bot manager to detect bots that are creating new accounts in bulk. Fake account creation doesn’t always happen at a high volume, so you may have to fine-tune your bot detections to get the best results.

Account protection

Behavior anomaly detection uncovers abnormalities in traffic patterns during a given time window — for example, it can detect an excessive number of new accounts created from a single IP address, device, network, or session that has only been active for a few seconds.

When opening a new account on a website, the user must provide an email address along with a first and last name. On sites that require a subscription, users may also need to provide a credit card number and billing address. These data points can be used to create new behavioral detection methods.

Email intelligence

Email intelligence is designed to analyze the syntax of the email address and detect malicious patterns.This detection method evaluates the email handle or local part of the email address. Even when the email address is not the primary user authenticator, it’s still required to allow a company to communicate with customers.

Email addresses from account opening abuse campaigns most often show these two characteristics:

Special characters: The syntax of the email address may include special characters like dots, dashes, and plus signs. This technique is called email aliasing, tumbling, or plus addressing.

Unusual syntax: The email addresses may have an unusual syntax, with a combination of random letters and numbers. In some cases, the email handle may consist only of numbers. When only letters are used, the resulting handle is typically nonsensical and unpronounceable.

Email domain intelligence

This detection method consists of evaluating the activity pattern coming from individual email domains. The following two characteristics are common with most attack campaigns:

Email domain spoofing: As in phishing attempts, attackers may create email domains that look similar to the ones used by major email services like gmail.com or hotmail.com. For example, outlook.net and gnail.com look close enough to outlook.com and gmail.com, respectively, to be mistaken for the originals.

Excessive requests: It would be tedious, if not impossible, to get and maintain an exhaustive list of active email domains around the world. But machine learning algorithms can be trained to forecast the expected activity per domain based on historical data. Anything that deviates from the norm may be anomalous.

Detecting synthetic identities

Attackers generally combine data from various sources to create a new identity. Validating each piece of information provided during the registration process can help detect inconsistencies.

For example, most web sites require the user to provide their first and last name. It’s common to find inconsistencies when validating this information against the email address.

When applicable, a user’s phone number can provide insightful information such as the country or type of number (mobile, landline, or burner). This information can be assessed in the overall context of the session to improve risk assessment.

Finally, validating the billing address in the full context of all the other provided data and the typical site audience can also reveal discrepancies.

The account opening abuse lifecycle

Account opening abuse activity can be complex, and it often involves several specialized fraudsters — some of whom supply data to other fraudsters who then open new accounts. Once the accounts are created, fraudsters may leverage them to commit more advanced types of fraud (spam, fake reviews, promotion abuse, etc.).

Or the attacker may simply decide to let the accounts mature (an older account raises fewer suspicions than a newer one) before selling them on the dark web for others to use as part of an advanced fraud scheme. Bots can be used to open new accounts at scale.

Secure the future

Across industries and countries, companies need to protect themselves from account opening abuse. Akamai Account Protector helps teams detect and mitigate threats through well-tuned bot recognition, email intelligence, and behavioral account protection.

Talk to Akamai

If you’d like to speak with someone about account opening abuse, call your Akamai account representative or talk to an expert now.