Conquering Adversarial Bots and Humans to Prevent Account Takeovers

Executive summary

The year 2022 saw an 81% increase in bot traffic targeting financial institutions, with 41% of attackers engaging in account takeover activities.

Account takeover fraud is estimated to cost U.S. customers more than $11.4B annually, making it the most expensive type of identity fraud.

Threat actors have adopted advanced techniques, incorporating machine learning and true human interaction, to avoid being flagged by traditional bot detection tools.

Preventing modern account abuse requires more sophisticated detection techniques that analyze the entire context of a login attempt.

Introduction

Bot management is critically important for protecting against credential stuffing campaigns and preventing account takeover. Advanced bot detection tools collect user interaction signals and device telemetries, and use machine learning to determine if a request came from a human or a bot. But recent advances in artificial intelligence and machine learning have made it easier for threat actors to create incredibly realistic facsimiles of human interaction.

Many bot operators also collaborate with human attackers to further muddy the waters and circumvent bot detection. Thwarting the most sophisticated bot operators now requires learning not only how humans behave in general, but also how individual users behave.

Although a login endpoint may be a small attack surface, the blast radius can be enormous. As criminals scale their attacks using sophisticated botnets and new technologies, the risks of large-scale fraud, financial loss, and reputational damage are greatly increased.

The first attack

In Q4 2022, a North American bank began noticing increased bot activity from a highly distributed botnet, with consistent traffic directed at their online banking login endpoints. Akamai Bot Manager proved highly effective in stopping the attack, automatically mitigating large volumes of malicious requests based on behavioral anomalies.

The threat seemed to subside quickly, with a marked decrease in “high-risk” requests. But upon further inspection, there was a corresponding increase in “medium-risk” requests. An in-depth analysis of the traffic by Akamai security researchers revealed that while the volume of requests had declined, the attack had not gone away.

Improved telemetries and modified traffic patterns

Instead, the threat actors had improved their telemetries, creating very realistic simulations of human interaction. To further muddy the waters, they appeared to be mixing these seemingly human requests with genuine human login attempts. Additionally, they had modified their traffic pattern to be low (volume) and slow (velocity). These changes created much more believable request patterns, and resulted in lower risk scores.

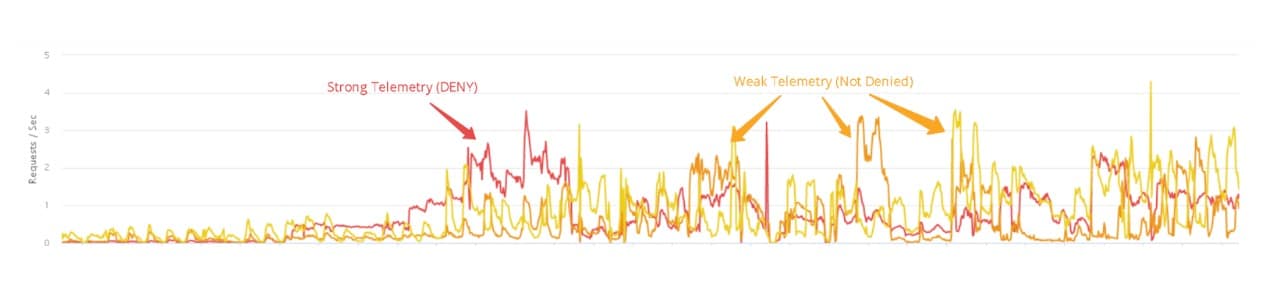

By reviewing the data in Figure 1, we can see the number of high-risk assessments (red) decreased as the number of medium-risk assessments (orange) increased.

Although the best efforts of the attackers were still flagged as suspicious, they were not obviously malicious. If the bank lowered its risk tolerance enough to block these requests, some legitimate users might be subjected to frustrating browser challenges, or might be denied outright. The bank opted to allow these requests, choosing to err on the side of user experience and accessibility.

More security with less inconvenience

The bank wanted to improve their security posture without inconveniencing customers, so they chose to supplement their bot detection capabilities with Akamai Account Protector, an “identity aware” endpoint protection solution. Account Protector monitors the interactions of each account user over time, creating a complete login history for every user, including the devices, locations, times, and networks from which their requests originate.

With a comprehensive understanding of when, where, and how a user typically logs in, even the most convincing automated requests stick out like a sore thumb. Account Protector also aggregates the user profiles to associate with population profiles so even users who don’t have significant history with a particular login site can still be scored accurately.

An approach that works

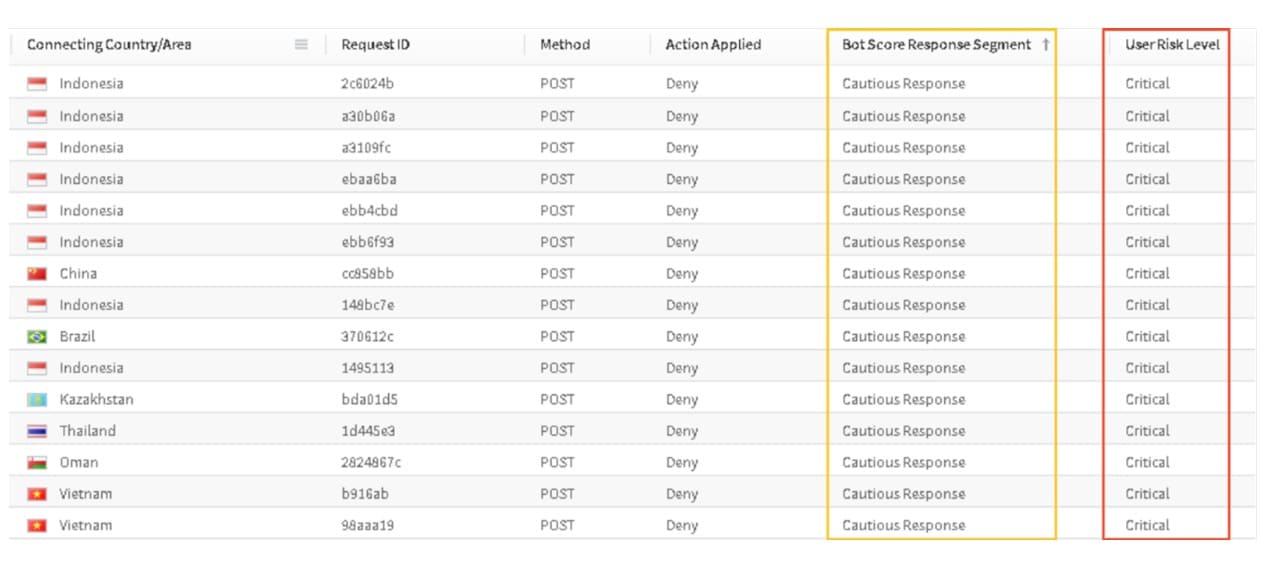

Sample logs proved that the new approach worked (Figure 2). Although detecting automated requests was less effective against these particular threat actors, comparing their malicious login requests with legitimate logins from the same accounts made their attempts at deception obvious. Their requests were blocked, keeping actual customers safer without negatively affecting their experience. Soon, the attack stopped entirely.

The second attack

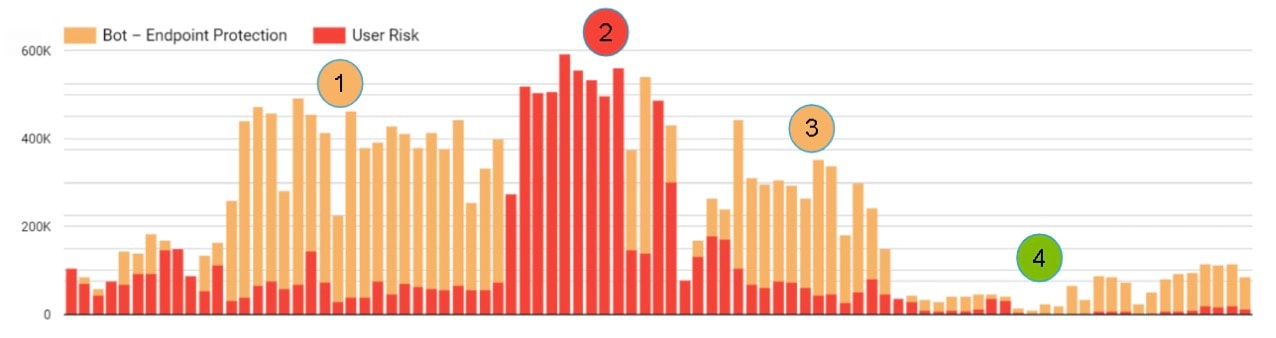

After a few weeks of quiet, the bank experienced a second credential stuffing campaign in Q1 2023. As the attackers modified their tactics, techniques, and procedures, the bank remained one step ahead the entire time, using the opportunity to refine and build on their new approach to account security. This second attack is illustrated in Figure 3 with numbers indicating each wave of the attack.

The four waves of the attack

Wave 1: A very large influx of automated requests was detected and blocked based entirely on behavioral anomalies. Though the telemetries were strong, the high volume of requests and concentration of source networks made the login attempts suspicious.

Wave 2: The threat actors attempted to bypass bot detection by more broadly distributing their identities (increasing device signatures, networks, and IPs). Although this made each request look less suspicious in isolation, they were clearly anomalous when compared with each user’s login history.

Traditional bot detection would struggle to maintain both a secure and user-friendly login experience here, but Account Protector allowed the bank to confidently block these requests without issue.

Wave 3: Realizing that their more distributed campaign was still failing, the attackers shifted tactics again to re-focus on telemetries. They were able to provide even more convincing telemetry data, likely by using machine learning techniques. But the collapsing of traffic back to a smaller set of networks made it easy to detect, even without user profiling.

Wave 4: Seeking to shut down the attack for good, the bank implemented a cryptographic (crypto) challenge response action at their login endpoint. In the event that a request is suspicious but not obviously malicious enough to block outright, the crypto challenge requires client devices to resolve a complex computational equation. It is similar in concept to a captcha (or other browser challenges), but resolved entirely by the device rather than the user.

As the crypto challenge maintains low friction to browsers, its response drastically increases the costs and time requirements for bot operators, and often frustrates them enough to give up or to operate at a loss. Unsurprisingly, reporting indicates that the second campaign largely stopped immediately after the crypto challenge went live.

Evasion attempts

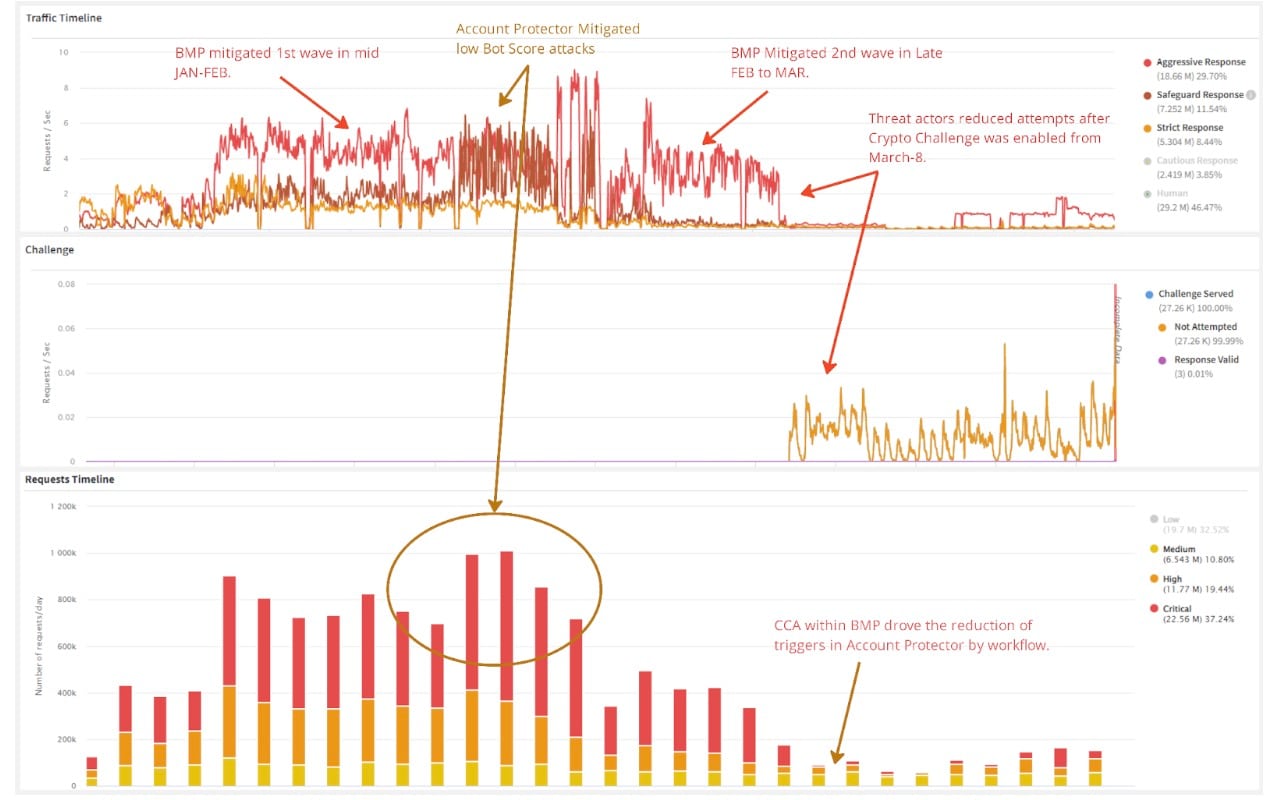

Figure 4 shows a detailed timeline of mitigation during this attack campaign, as threat actors attempted to evade the blocks by pivoting behavior telemetries and identities.

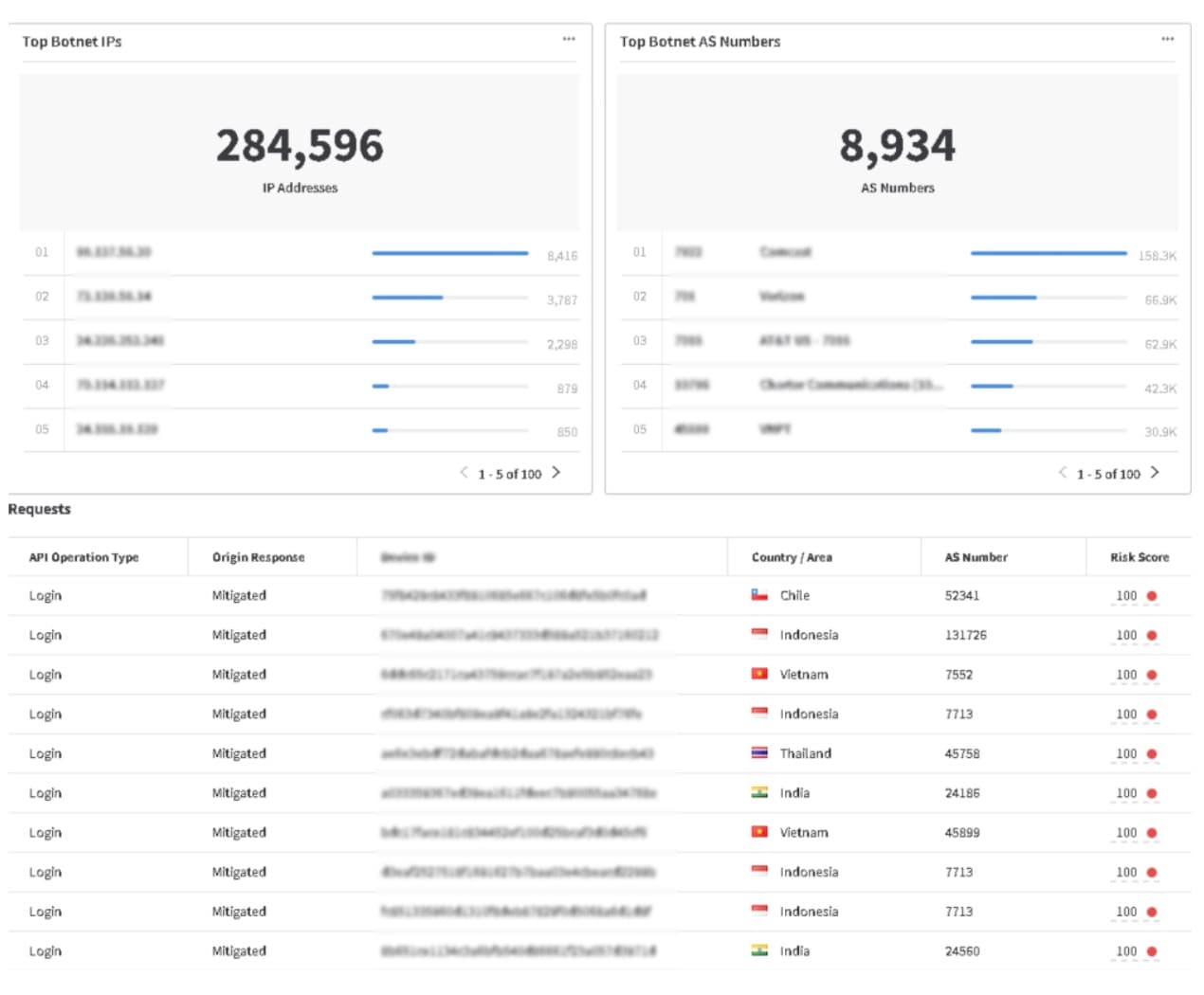

As evidenced by the data, the criminals were very persistent; willing to change their tactics quickly and repeatedly after facing headwinds. Figure 5 helps to illustrate the scale of one of the contributing threat identities during this campaign. The botnet is highly distributed (280,000 IP address) across approximately 9,000 Autonomous System (AS) numbers in many countries, with hundreds of unique connecting “devices.”

Conclusion

For the duration of the two bot campaigns, there were never any significant events or representative patterns that could adequately describe the characteristics of the malicious traffic. In short, the automated requests were almost entirely indistinguishable from legitimate traffic.

Sophisticated bot attacks like this spread quickly — motivated and tech-savvy criminals have adopted the CI/CD method and employed machine learning to drastically improve their telemetries in a very short time span.

Protecting users requires security professionals to quickly shift their tactics as well. Distinguishing between humans and bots is not always feasible. Instead, it is necessary to take a more holistic approach to account security, looking not just at individual requests, but also at each user’s login history.