Render Pages Faster for Optimized Browsing, Part 5 of 5

Web browsers handle every single task on the main thread. Script execution, user interactions, and the rendering process described below all occupy the main thread. This means that browsers can only do one thing at a time. If too much time is spent on script execution and rendering, then user interactions can only occur after the main thread frees up — and if this takes too long, users may become impatient.

The deciding factor becomes when the main thread is first freed up. This could be either before or after the point when all HTML assets become available. For instance, when an image takes a long time to load, the main thread idles for a while. The browser can then decide to start the rendering process. It may need to re-render the page once the image arrives at an indeterminate time, but showing something is always better than showing nothing.

This final article in our five-part series examines what happens once the browser has downloaded usable assets and it's time to display something on-screen.

Rendering from start and executing events

At render start, two JavaScript events may execute. Both are important to web developers. DOMContentLoaded is the first event, which fires when all HTML, CSS, and JavaScript assets have been loaded and parsed, and all JavaScript has executed. This includes any scripts with the defer and async attributes. However, other assets such as images may still be pending.

The load event fires after the DOMContentLoaded event, when all assets present in the HTML — including images — have loaded. The load time metric factors in here because it measures the time from navigation start to the load event.

Typically, web developers run their main scripts when one of these events execute, usually DOMContentLoaded. This means more assets may load afterward. It's only once these assets have downloaded that the full load time metric comes into play.

Thus, the first rendering process is not necessarily the final one. In fact, all following steps are repeated every time any new data arrives — including HTML, CSS, JavaScript, images, video, or SVG.

For example, if a user does something, they want to see the result of their action on screen, which requires re-rendering. But this is a very costly process, so performance engineering partially involves minimizing re-renders.

Constructing object trees to enable rendering

Before the rendering process starts, browsers construct several object trees representing the HTML page to CSS and JavaScript. The most important is the Document Object Model (DOM), which describes all HTML page elements and their relations. Take this code example:

<section>

<div>

<h1>Order 1</h1>

<p>Order description</p>

<button>Change order</button>

</div>

<div>

<h1>Order 2</h1>

<p>Order description</p>

<button>Change order</button>

</div>

</section>

Each HTML element becomes a DOM node specifying its relations to other DOM nodes. For instance, the first <div> tracks how its parent is the <section>, and that it contains an <h1>, a <p>, and a <button> as its children.

This DOM is available to JavaScript, and allows web developers to find and change all DOM nodes. For instance, they can access the <div> and remove the <button> child node. The model changes, and the button is removed from the screen during rendering since it's no longer present in the model.

Understanding element trees

Browsers create several such trees. The rendering tree contains all HTML elements browsers need for rendering. DOMs work similarly, except hidden elements are left out.

section h1 {

display: none

}

If all <h1> tags are hidden, the rendering tree matches the DOM tree save for the H1 elements that don’t need to be rendered. Later in this article, we’ll demonstrate how this tree is actually created slightly later, after the styling phase.

Browsers then create the Accessibility Object Model (AOM) that’s used by assistive technology like screen readers that read web pages aloud to visually impaired people. Assistive technology must understand what to read and how, and AOMs offer them a page model to work with.

For the above code sample, the AOM should read aloud the button text 'Change order' while making it clear that it’s a button the user can activate. It needs more, slightly distinct information than DOMs do.

Finally, browsers create the CSS Object Model (CSSOM) that models the complete page CSS while offering web developers the opportunity to change it. With the CSSOM, web developers can access, change, and remove the section <h1> style rule.

Unpacking the rendering process

At this point, browsers can begin the rendering process. All instructions are now available, and the browser must tell the operating system which color to assign to each pixel. To do so, they must perform lengthy and complex calculations.

Users enjoy seeing things on-screen. Browsers attempt to serve them by rendering as soon as possible. But if a page element changes, browsers must repeat all the necessary phases.

Since rendering is the most costly browser operation in terms of CPU cycles and time, frequent re-renderings are a common source of performance problems. To avoid these problems, you need to understand the rendering process.

Rendering consists of four phases: styling, layout, paint, and compositing. The layout phase is by far the most important for enabling good performance.

Styling HTML elements

Now, the browsers can access the DOM tree that specifies all of a page’s HTML elements. It can also access all page styles. The browser proceeds to match each style to each applicable HTML element.

In principle, this is a straightforward process. Browsers go through all CSS selectors to determine matching DOM elements. They note these styles, but don’t yet attempt to execute them; they must first preview all styles that will be applied everywhere.

Take this CSS rule, for example:

div.specialMessage p {

color: red;

border: 1px solid red;

}

Browsers read selectors from right to left. So, when applying the rule from the above example to the document, browsers locate all <p> tags and choose only paragraphs in a <div class="specialMessage">, then add each to the paragraphs. (There are several optimization opportunities here that this article ignores.)

Improving styling performance

Since browsers begin on the right side and select all the document’s paragraphs, styling performance can be slightly improved by making the rightmost part of the selector more specific. For instance:

div p.specialMessage {

color: red;

border: 1px solid red;

}

Now, browsers start with all <p class="specialMessage"> elements — and there are likely fewer of them, thus slightly improving page performance.

Still, selector performance isn’t a particularly serious issue. A page must contain thousands of HTML elements and hundreds of CSS rules before it becomes necessary to optimize CSS selectors. The average web developer should be more concerned with other, more pressing performance issues.

Once all styling information is available, browsers know which elements are hidden and visible. At this point, they can create the previously mentioned rendering tree, which serves as the input for the next phase.

Laying out a site’s elements

This next step is the most involved one. Browsers know every style for every element, and can now calculate the dimensions and positions of those elements. Individual lines of text are also laid out, meaning browsers must decide where to break off lines. This depends on the amount of text and the element's width.

By default, any HTML element, such as a paragraph, will occupy the smallest necessary height, which is usually determined by the text or image it contains. In addition, any HTML block elements — like paragraphs, lists, and sections — are positioned below the previous block.

Therefore, the placement of an element X will depend on the dimensions of all elements before it. Browsers must calculate all these dimensions before they can place element X at its correct coordinates.

To make these calculations, browsers must check every DOM node and line of text in the document. This takes time — especially if the page contains thousands of DOM nodes. More important, it occupies the main thread, so reacting to user inputs will take longer.

Re-rendering to reflect page changes

Unfortunately, browsers typically perform the rendering process several times. For instance, the initial rendering of the page may begin when some images have yet to arrive. If they do arrive, they get inserted into the DOM and the page is re-rendered.

The Cumulative Layout Shift (CLS) metric operates in this context (Table 1). After re-rendering, each HTML element below the image may change position. This sudden shift can be jarring to a user who's already reading text. CLS attempts to quantify this shift.

Meaning |

Definition |

Other name |

Useful for |

Cumulative Layout Shift |

The amount that a web page’s assets shift throughout its lifecycle. |

— |

Understanding the comfortability of a user’s reading experience |

Table 1: A definition of Cumulative Layout Shift and its main function

As discussed in the second article in this series, specifying image and video widths and heights in the HTML can help alleviate or even remove some problems:

<img src="heroImage.jpg" class=-"heroImage" width="750" height="500">

Repeating the re-rendering process

If scripts add, remove, or even minorly change DOM nodes or styles, the rendering must also be repeated:

<p>Welcome to your personal page for Widgets Acme!

You can create and change your orders here!

You are currently <span id="logInStatus">not logged in</span>.</p>

<section id="orderList">

<div>

<h1>Order 1</h1>

<p>Order description</p>

<button>Change order</button>

</div>

<div>

<h1>Order 2</h1>

<p>Order description</p>

<button>Change order</button>

</div>

</section>

Assume the user logs in and this simple script runs:

logIn(credentials,() = > {

loginStatus.innerHTML = logIn.name;

})

The text changed, so browsers must re-render the entire page. The new text might cause the first paragraph to wrap to a new line, which would increase its height. Then, all subsequent elements would be pushed downward, so their page positions would need to be recalculated. And browsers only find out whether these events actually happened after all calculations have already been made.

Suppose the script also inserts the user's order history into HTML:

logIn(credentials,() = > {

loginElement.innerHTML = logIn.name;

orderList.innerHTML = orders.orderList.toHTML();

})

The script now makes two DOM changes, but they are only applied after the script ends — causing the page to be re-rendered. There are two reasons for this:

While the script runs, the main thread is unavailable, and rendering cannot begin.

That same script might make additional DOM changes.

It's far more efficient to apply these changes once than it is to re-render for each individual DOM change.

Awaiting new data before re-rendering

Now assume that the order history comes from a separate system that may respond at a different time than the order system.

logIn(credentials,() = > {

loginElement.innerHTML = logIn.name;

await const orders = fetch('/orders');

orderList.innerHTML = orders.orderList.toHTML();

})

This simpler implementation may cause problems. The await statement tells the function to pause and wait for the new data to arrive. Since it’s an asynchronous operation, it frees up the main thread, then browsers apply the login name and re-render the page. Once the order data arrives, the rest of the script executes, browsers apply the order list, and re-rendering occurs for the second time. This is redundant and wasteful.

Web developers should wait for both batches of data to arrive before making any changes. This can be done either on the server side or the client side. For example, you can insert an intermediate script that collects both login and order data and changes the DOM only when both batches have arrived.

Forcing the re-rendering process

Methods or properties that gather layout information are at even higher risk than the previously mentioned order history example. One such property is element.offsetWidth, which returns the actual current width of a DOM element. Take a script like this one:

logIn(credentials,() = > {

let loginParagraph = loginElement.parentNode;

loginElement.innerHTML = logIn.name;

if (loginParagraph.offsetWidth > 500) {

document.body.classList.add('longParagraph');

}

orderList.innerHTML = orders.orderList.toHTML();

})

The script changes some text in the DOM while occupying the main thread. Browsers don’t yet re-render because the main thread is currently occupied and the script may make more DOM changes.

The script then requests the login paragraph’s width — and this is where things start to go wrong. Browsers could easily provide the old paragraph width stored in memory. But since the paragraph's contents were just changed, that old width may be incorrect. Browsers then have no choice but to pause script execution, execute a full page re-render, restart your script, and return the new paragraph width.

Now, the script execution resumes. It identifies more changes to make, the browser makes those changes, and the page re-renders a second time once the script finishes executing. Thus, using the element.offsetWidth property requires browsers to re-render the entire page not once but twice. For very large pages, this can take considerable time — and make users impatient.

For this reason, it's best to avoid using any method or property that forces a re-render.

Painting a site with content

Once browsers determine the dimensions and positions of all HTML elements, they can begin generating text and images. Content generation can require several steps, but painting one “region” (a DOM element or group of elements) doesn’t influence other regions — making this a wholly autonomous process.

So, changes that don’t alter any element's dimensions — only a color or background image — only require a re-paint, and not a full re-layout. CSS transforms and animations are also paint-only, making them fairly cheap.

It's possible to define your own regions (also called layers) in the page, thus speeding up the re-paint process considerably. This article on layers explains how to do so, but it requires a solid understanding of CSS.

As discussed in the second article in this series, the First Contentful Paint (FCP; Table 2) and Largest Contentful Paint (LCP; Table 3) metrics execute when the first and largest visible parts of the page are painted. In actuality, they fire following the completion of the final composite phase, but this paint phase is the one referred to in both metrics’ respective names.

Painting something on-screen enables users to see it, which resets their impatience timer to close to zero. In fact, LCP may execute several times as larger and larger regions get painted.

Meaning |

Definition |

Other names |

Useful for |

First Contentful Paint |

Time from the first byte to the first bit of fully rendered content |

First Paint First Meaningful Paint |

Resetting the user's impatience timer |

Table 2: A definition of First Contentful Paint, its function, and other names

Meaning |

Definition |

Other names |

Useful for |

Largest Contentful Paint |

The time between the first byte and the render time of the viewport's largest visible image or text block |

Time to Visually Ready Visually Complete Front-End Time |

Core Web Vitals compliance and measuring site usability speed |

Table 3: A definition of Largest Contentful Paint, its function, and other names

Combining regions to a single composite

The final phase is compositing, where all individual painted regions are combined, and any overlapping regions receive their final styles. For instance, some regions may have a CSS opacity of less than 1, so they’re partly translucent. If such a region is positioned on top of another region, the contents of the bottom region should be dimly visible in the top one. This effect is achieved during the compositing phase.

Changes that only require a re-composite are the cheapest from a performance perspective, since browsers can skip the layout and paint phases; this article on browser painting and considerations for web performance and this article on front-end web performance discuss strategies to help you make those kinds of changes.

Essentially, changes to an element’s transform, opacity, and filter properties are very fast because they only require re-compositing. If a website can achieve its user experience goals by solely editing these properties, the performance gains are well worth the extra development time.

Enabling interactivity

Once all four rendering phases have completed, the users finally see something on-screen. This doesn’t necessarily mean they can interact with the site, even if they expect to have that ability. To handle interactions like running a script when the user clicks a button, the browser’s main thread must be free and ready to execute that script.

Still, the main thread may not be free. As previously mentioned, it's common for large scripts to execute when the load or DOMContentLoaded events fire. These events may occur after final rendering, or may have fired earlier.

Regardless, initialization of the script must wait to execute until rendering finishes and the main thread frees up. That may mean that once rendering finishes and the page appears ready to use, the main thread will still be busy, so browsers cannot react to user input. If this lasts too long — for example, when a button is visible but does nothing when clicked — users get impatient.

Measuring main thread access times

Even if a user interaction successfully runs a script, the main thread is occupied, which can delay other interactions and script initializations. At the same time, any scripts that change the styles or the DOM may trigger a re-render thus occupying the main thread even longer.

This delayed main thread state continues until either the large scripts finish executing or the user leaves the page. The user initiates an interaction, browsers typically react by running a script that occupies the main thread, and the page re-renders to display the result. Browsers then wait for the next user interaction, and the process repeats.

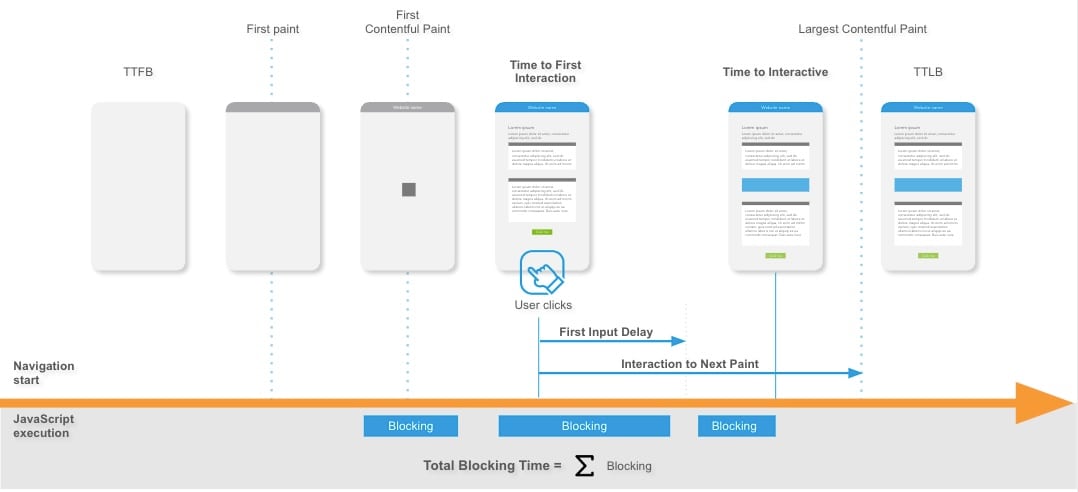

It's against this backdrop that numerous metrics begin to execute (Figure), including:

Total Blocking Time (TBT). This measures the total time that script execution and the resulting re-renders block the main thread. It begins measuring at FCP and continues to TTI.

Time to Interactive (TTI). This occurs when the main thread frees up for the first time, so the user can successfully interact with the site.

Time to First Interaction (TTFI). This occurs when the user first attempts to interact with a page.

First Input Delay (FID). This is the delay between the first user interaction and the start of the script. If the main thread is occupied when the first interaction takes place, it may take a while before the script runs.

- Interaction to Next Paint (INP). This is an experimental metric that measures the time from any interaction to the resulting re-render — making it a more complete measurement than FID.

Figure. Example of a timeline that shows a browser's reaction when running a script

Figure. Example of a timeline that shows a browser's reaction when running a script

Optimize your website with knowledge

This final article in our web performance series examined every task browsers do — from the moment they receive a page’s assets to when that page is displayed on-screen and the main thread is freed up to enable user interaction. It also revealed what aspects of this process are measured by particular performance metrics.

The entire five-part series gave a basic introduction to web performance goals, metrics and things they measure, and various tasks that browsers perform to display a page.

Now, web developers should have enough information to make their first foray into web performance research. They should understand which aspects of the downloading and rendering processes are most likely to have performance issues.

Finally, web developers should be able to use the three Core Web Vitals metrics — LCP, FID, and CLS — to better understand their site’s performance. Of course, each site requires its own approach, so web developers should also be capable of determining other metrics for gaining even deeper insights into site performance problems.

Learn more

Now that you’ve learned all there is to know about optimizing your website’s rendering process and overall performance, head over to the Akamai TechDocs documentation site to discover how Akamai can help you to build faster, more engaging web experiences.