Deliver Faster Downloads for Better Browsing, Part 4 of 5

The previous three articles in Akamai’s web performance series unpacked individual web performance metrics, and the final two articles in this series will explain how browsers work. To better understand how those performance metrics work, you’ll need to know plenty of specifics on how web browsers operate.

As mentioned in the first article, there are two main causes for bad performance:

- Slow internet connections that stall asset loading times

- A lack of developer awareness on the single-threaded nature of web browsers, which yields poorly performing front-end code

The power of performance engineering

Many users — especially those on 3G or similarly slow connection types — know that network conditions affect all sites equally, and that reloading a slow loading site can help speed things up. But users and web developers alike often fail to understand that issues stemming from a browser’s single-threaded nature are specific to individual sites — making the opportunity for website errors much larger.

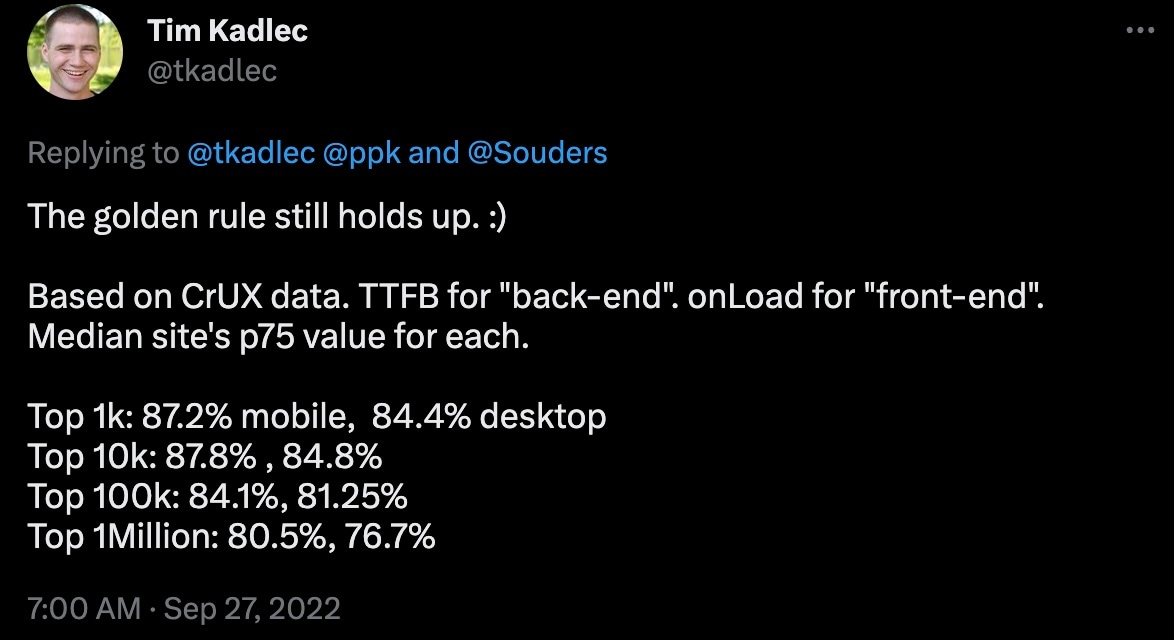

According to CrUX data provided by Tim Kadlec, director of engineering at Catchpoint, today between 80% and 85% of all website speed performance problems are caused by faulty front-end code private communication (Figure). That's why performance engineering is mostly focused on website front-end code — HTML, CSS, and JavaScript — that gets sent to, and is executed on, your users' browsers.

Fig: Tweet from Tim Kadlec providing updated information on website performance problems

Fig: Tweet from Tim Kadlec providing updated information on website performance problems

A closer look at how web browsers work

A browser is a piece of software that downloads all necessary files, such as code and images, to interpret, execute, and properly display web pages. It must provide all users with a consistently excellent browsing experience, no matter their technical literacy.

Breaking down browser essentials

To accomplish this goal, browsers are made up of several components. They have a networking component for sending and receiving HTTPS file requests, and a user interface component — containing the back button, URL bar, bookmarks, and more — to make user navigation easier. Crucially, they use both a rendering and JavaScript engine to enable optimal performance.

The JavaScript engine parses and executes JavaScript files (those with a “.js” extension). This is generally a module separate from the browser itself, so although rare, in theory a browser could switch from one JavaScript engine to another.

Finally, the rendering engine parses and interprets HTML and CSS, then delivers the Document Object Model (DOM) and other object models to the JavaScript engine. There are three main rendering engines: WebKit (for Safari), Gecko (for Firefox), and Blink (for Chrome, Edge, Samsung Internet, and most other browsers). Each rendering engine is different, which can lead to particular browser incompatibilities that won’t be covered in this article.

Threading for fast performance

For web performance purposes, it's best to view browsers as a single-threaded environment where only one task can be executed at a time. If the main thread is executing a task, any other pending tasks will have to wait for that task to complete. Some performance problems are caused by lengthy tasks that occupy the main thread, like executing large JavaScript files. When this happens, the browser can’t do important tasks such as displaying assets on the screen.

Certain browser tasks such as asset downloads are multi-threaded, but they deliver their output to the main thread, which does all the page rendering work. As such, managing the main thread’s performance is an important skill.

To summarize, browsers download and parse all required HTML, CSS, and JavaScript code, as well as images, videos, and fonts. They then construct several structures that allow JavaScript to use these assets, execute the JavaScript, and then render the page. Rendering, in turn, consists of styling, laying out, painting, and compositing.

Building a web page

The remainder of this article, as well as the next (and last) in the series, will discuss each of these steps in order, ignoring the aspects that don't generate many performance problems.

This list of browser performance resources offers even more in-depth information on how browsers work. You can also check out this excellent Google article for a behind-the-scenes look at browser structure and functionality.

As you read the rest of this article and the next one, think about a user clicking on a link to load a new website. What happens next?

Connecting with DNS, TLS, and TCP

The first thing browsers must determine is which next web page is ordered to load. Typically, a link on a web page contains a domain name such as “example.com.” This name must be translated into an IP address, which is a number identifying a specific web server. In the end, the requests from the browser must go to this web server — but to do so, it first needs the IP address.

For this reason, browsers connect to a special Domain Name System (DNS) that converts domain names into IP addresses. The DNS returns an IP address that browsers use for all subsequent requests.

The first thing that browsers do when attempting to connect to the correct web server is to establish a Transmission Control Protocol (TCP) connection, the protocol that handles all data transmission. TCP divides large files into small chunks that get sent individually, checks all incoming data for errors or missing parts, and re-requests chunks when necessary. To set up a secure TCP connection, a Transport Layer Security (TLS) handshake must first take place.

If a DNS server takes too long to respond or the web server is slow in setting up a TCP or TLS connection, browsers cannot continue with the downloading process and performance suffers. The connection times metric discussed in our previous article measures the response time for TCP and sometimes TLS. Long response times indicate a network problem. This article more thoroughly discusses the specific performance impacts of TLS.

Prefetching for faster connections

One way to help improve connection times is with DNS prefetching and preconnecting. If web developers know a web page will use several domains — for instance, “images.example.com” and “fonts.example.com” — it’s possible to tell browsers to make the DNS requests and immediately establish a TCP connection. This may reduce downloading time later when browsers access this domain.

To find DNS information, use a DNS prefetch:

<link rel="dns-prefetch" href="https://images.example.com/">

Resolving the domain name

The browser then resolves the new domain name, so the DNS information is already made available once it encounters the first link to images.example.com.

You can also save more time by setting up a TCP connection with servers for later use. However, doing so can be expensive — and in some cases, it's best to both make the connection and preload the assets that will be needed later.

<link rel="preconnect" href="https://images.example.com">

<link rel="preload" href="https://images.example.com/heroImg.jpg">

This article by Google can help you understand which of the two preconnect options to use depending on the context.

Downloading HTML

At this point, the browser has connected to the correct web server and downloading can begin. This process always starts with an HTML page.

HTML is the fundamental building block of the web. Every web page, even single-page applications (SPAs), must contain some HTML. Otherwise, it doesn’t count as a web page, soi other resources such as JavaScript code won’t be downloaded.

That's why the first step of a new web page download is to download its HTML. This HTML may be a static file on the server, or it may be generated by a server-side language such as PHP or Java. The amount of HTML code may be very small, such as a simple opening and closing <body> tag to later be populated by a script, but it must always be present.

Browsers start parsing and interpreting the HTML as soon as possible — even while downloading. They first look for references to other assets, such as a <link rel="stylesheet"> that refers to a CSS file, a <script src> that refers to a JavaScript file, or an <img src> that refers to an image. They start downloading these assets as soon as they encounter them, though there's a catch that we’ll discuss later.

Caching content

The downloading process is the first major cause of performance problems. If part of the network is slow, the site is also slow. Fortunately, this problem was recognized when the web was conceived, and caching was introduced to alleviate it.

Caching is the local storing of retrieved files for use later. If a user requests a certain page and its assets, those assets are stored on their computer. Thus, if the user later returns to the same page, browsers can find the relevant files in their local cache, so the download time is decreased significantly.

Web servers may cache frequently requested pages — especially if the pages were generated by a server-side program. That way, the program doesn't need to run every time the page is requested.

Caches have limited storage space, so occasionally pages that haven't been requested recently get removed to make place for others. Resources will not be cached forever.

In addition, the Firefox team discovered that in many cases, retrieving pages from the network was faster than retrieving them from the local disk — possibly because the local disk was old and slow.

Establishing a caching policy

Sometimes caching can actually be counterproductive. For example, a page containing news items that are frequently updated needs to consistently and directly access the server, since the cached page may lack the latest information.

That’s why you can actually set a caching policy on a per-page basis that establishes whether that page should be cached, and if so, for how long. Using this method, web developers can indicate whether a page will change often or rarely — and pages that fall into the second category can be cached for a much longer time.

As such, an important part of performance engineering is setting cache control headers to sensible values that reflect the expiration date of a website's content.

Some browsers, notably Safari on iOS, have fairly aggressive caching. This can be frustrating while developing a web page and reloading time and again: Sometimes the latest changes don’t show up. In desktop browsers, you can press the Shift key while reloading to tell the browser to skip caching and go directly to the original server.

Unfortunately, no such technique exists for mobile browsers; in that case, manually clearing the cache via the Preferences menus is the only solution.

Unpacking content delivery networks

Some websites, especially larger ones that get global traffic, use a content delivery network (CDN) to speed up the downloading process.

In a CDN, web servers around the world contain identical copies of a website’s static assets and users connect to whichever server is closest to them. This means that an Australian user connecting to a U.S. website doesn’t need to send requests to and receive responses from the United States, but instead to and from a local Australian server.

Although CDNs are conceptually simple, keeping them operational is anything but. All servers must be performance-tested and monitored for problems. In addition, CDNs need a system to seamlessly propagate website changes to every server. That's why CDNs are only offered by specialized companies like Akamai.

Ordering downloads

At this point, the time to first byte (TTFB) metric executes, since the browser has now received its first byte of content — either from the original web server, a CDN, or a cache. The first byte is always part of the HTML page that browsers always download first.

As soon as HTML code arrives, the browser begins parsing it. Each byte is analyzed and interpreted for signs of HTML code. For instance, a ‘<’ character would be interpreted as an HTML tag, not text. Regular text only resumes after the ‘>’ character is found.

While parsing the HTML, the browser will find references to other asset files such as CSS, JavaScript, images, and fonts. It must download those by default in the order they’re encountered. As such, the asset that's declared first is downloaded first, which can matter; if an image is important to a web page, it's best to put the <img> tag that declares that images near the beginning of the HTML to begin the download process early.

Preparing for efficient asset reuse

Another good idea is to prefetch assets you know the page will use later. This works similarly to the previously discussed DNS prefetch process: Browsers use idle time to fetch resources such as images that may be used later. Preconnecting to servers that might be queried later can also save on download time.

In either case, web developers must make an educated guess about which servers or assets will be needed later. Typically, this is based on common user paths through the site. But if a user’s path is unusual or the user leaves the site, the preconnects and prefetches can be wasted.

The fetch priority attribute allows for even finer control over the downloading process. Setting this value to “high” or “low” will force browsers to assign certain assets a high priority or a low priority. This allows web developers to indicate which assets are most important and which can wait. However, prioritization also depends on the resource type being requested. As such, fetch priority may not always work as expected. This article by Google unpacks how you can optimize resource loading with priority hints.

Parsing code

Once received, CSS and JavaScript are parsed. Here, the browser looks for special characters such as ‘{}’ and ‘()’ to determine which CSS style rules and JavaScript objects and functions exist. If the browser encounters JavaScript syntax errors, it’ll throw an error, but it won’t do so for CSS errors. In reality, the parsing rules are far more complicated than this summary suggests. But since parsing doesn’t cause severe performance problems, this article will largely ignore them.

Preparing assets

Preparing assets for quick downloading is an excellent way to make websites faster. However, each asset type requires unique preparation approaches and techniques.

A general rule is to minify and compress them. Minification removes all excess data, such as comments or newlines, while compression further reduces the asset's size.

Refining images for speed

Images need additional optimization. This topic deserves its own article, but here are some tips from Google. Picking the right image format (JPG, PNG, WebP) is important, since each has pros and cons. Images should be compressed and made responsive by manually or automatically generating several versions of each — from high- to low-resolution

Then, the browser or server chooses which version to use depending on the network conditions and the user's screen (smaller screens with fewer pixels don't need high-resolution images). It's also important to set an image’s size in the HTML, as this prevents a high Cumulative Layout Shift (CLS), which was discussed in the second article.

If necessary, preload important images that appear later on the page. In addition, always be sure to preload fonts:

<link rel="preload" href="https://example.com/myFont.woff2">

Halting CSS downloads

CSS files block rendering for slightly different reasons. If they didn’t, it would be possible for the HTML to fully load while the CSS is still unavailable. Browsers would have no choice but to render the unstyled HTML, only to change it later once the CSS had loaded.

This displays an unappealing and potentially confusing flash on the screen when the styles are applied and the site changes. But crucially, it also requires an extra rendering of the site.

The next article in this series will discuss rendering in more detail, but you should know that it's the most costly step in the entire process — and rendering the site a second time should be avoided at all costs. That's why CSS files block rendering.

Splitting CSS files

CSS files tend to be smaller than JavaScript files, and are far less costly to initialize. Unlike JavaScript, CSS doesn’t need executing. Still, there are several tricks that can make CSS blocking behavior less troublesome.

One such solution is to split your CSS file into critical and noncritical sections, the latter of which can be loaded later with the use of the preload attribute:

<link rel="stylesheet" href="load-now.css">

<link rel="preload" as="style" href="load-later.css">

Now, only the first style sheet blocks rendering, whereas the second doesn’t. The second one may become available once the page has appeared on screen, but if all crucial styles are in the first style sheet, this won’t be much of an issue.

Tagging with media queries

Another option is to use media queries in <link> tags:

<link rel="stylesheet" href="basics.css">

<link rel="stylesheet" href="small-screen.css" media="max-width: 400px">

<link rel="stylesheet" href="portrait-screen.css" media="orientation: portrait">

Browsers evaluate these media queries when they parse the <link> tags. If one of them doesn’t apply to the current situation, the style sheet won’t block rendering.

If the example code is loaded when the window is wider than 400 pixels and in landscape mode (on a desktop computer, for instance), the second and third style sheets are still loaded, but the browser now knows they're not needed immediately and won’t block rendering. Rendering is only blocked by the first style sheet that’s always loaded.

Still, you shouldn’t rely too heavily on media queries. Use, at most, three or four style sheets. Splitting your CSS into dozens of files — each with its own media query — is usually a bad idea, since each separate download uses some overhead. Large file and query counts will likely nullify the performance gains you get from not blocking rendering.

Inserting CSS-in-JS

When using large JavaScript frameworks such as React, it’s possible to use CSS-in-JS. This is where the CSS for small modules is set inside the module itself, and the JavaScript is responsible for locating the CSS and passing it to the browser. This is useful in some respects, notably because developers can maintain the CSS and JavaScript they need for that module.

Still, the performance implications are dire. Consider what happens when a script is found and executed, during which CSS is added.

Now, the browser has to both insert the CSS and re-calculate the styles for the entire web page. It’s true that the CSS is only applied to the module, but the browser has no way of knowing that.

Sam Magura, a software engineer at Spot, writes:

"Runtime CSS-in-JS libraries work by inserting new style rules when components render, and this is bad for performance on a fundamental level."

Put another way, while CSS-in-JS solves some issues, it introduces others. To ensure your website is as fast as possible, consider avoiding CSS-in-JS.

Analyzing metrics

Now that the browser has gathered all its resources, several metrics that were treated in the previous articles will begin executing:

- Error rate is now known: How many requests returned errors?

- Page size is now known: What’s the total size of all assets?

- Response time is now known: How long did the overall downloading process take?

- Page response time is now known: Which single asset had the longest response time?

Summary

By reading this article, you’ve discovered what happens when a user clicks a link and learned about the work browsers do in response, including:

- Establish a connection to the correct web server

- Download the HTML and all related assets

- Mitigate all blocking problems encountered during the entire process

Stay tuned for part 5: browsers and rendering

Now that the browser has gathered all the necessary resources, it can begin rendering the result of all the assets on screen. In the fifth and final article in this series, we’ll explore browser rendering in great detail.

Learn more

Once you’ve learned all there is to know about speeding up downloads on your website, head over to the Akamai TechDocs documentation site to discover how Akamai can help you to build faster, more engaging web experiences.