While an edge network is the sum of locations where devices interact with the internet and produce and consume data, the cloud is composed of virtualized computing resources that may reside on servers and data centers anywhere in the world.

An edge server is a server that operates at the edge of a network to provide processing, storage, networking, security, and other computing resources. Unlike cloud servers, which operate in data centers anywhere in the world, edge servers operate at far more locations or “points of presence” (PoPs) where data is produced and consumed by users and devices. These may include local computers, cell phones, Internet of Things (IoT) devices, self-driving vehicles, point-of-sale retail machines, and thousands of other devices in various locations. Edge servers move computer processing and storage closer to these locations to minimize latency, reduce bottlenecks, lower costs, and improve user experiences.

What are the types of edge servers?

There are several kinds of edge servers, each deployed for a different use case.

- CDN edge servers are located at a regional edge and support the needs of a content delivery network (CDN). These edge servers cache versions of static content that are located on an origin server, enabling them to serve content from locations closer to users to improve response times and enhance user experiences.

- Network edge routers are deployed in smaller data centers at edge locations, placing computing resources closer to users to minimize latency.

- On-premises edge routers are located in individual companies or enterprise data centers.

Device edge nodes or servers exist on or within an endpoint device such as a smart machine and provide edge computing for tasks like monitoring and analytics.

What are the benefits of an edge server?

By performing compute, storage, networking, and security functions close to the users and devices that need them, edge servers provide several significant advantages.

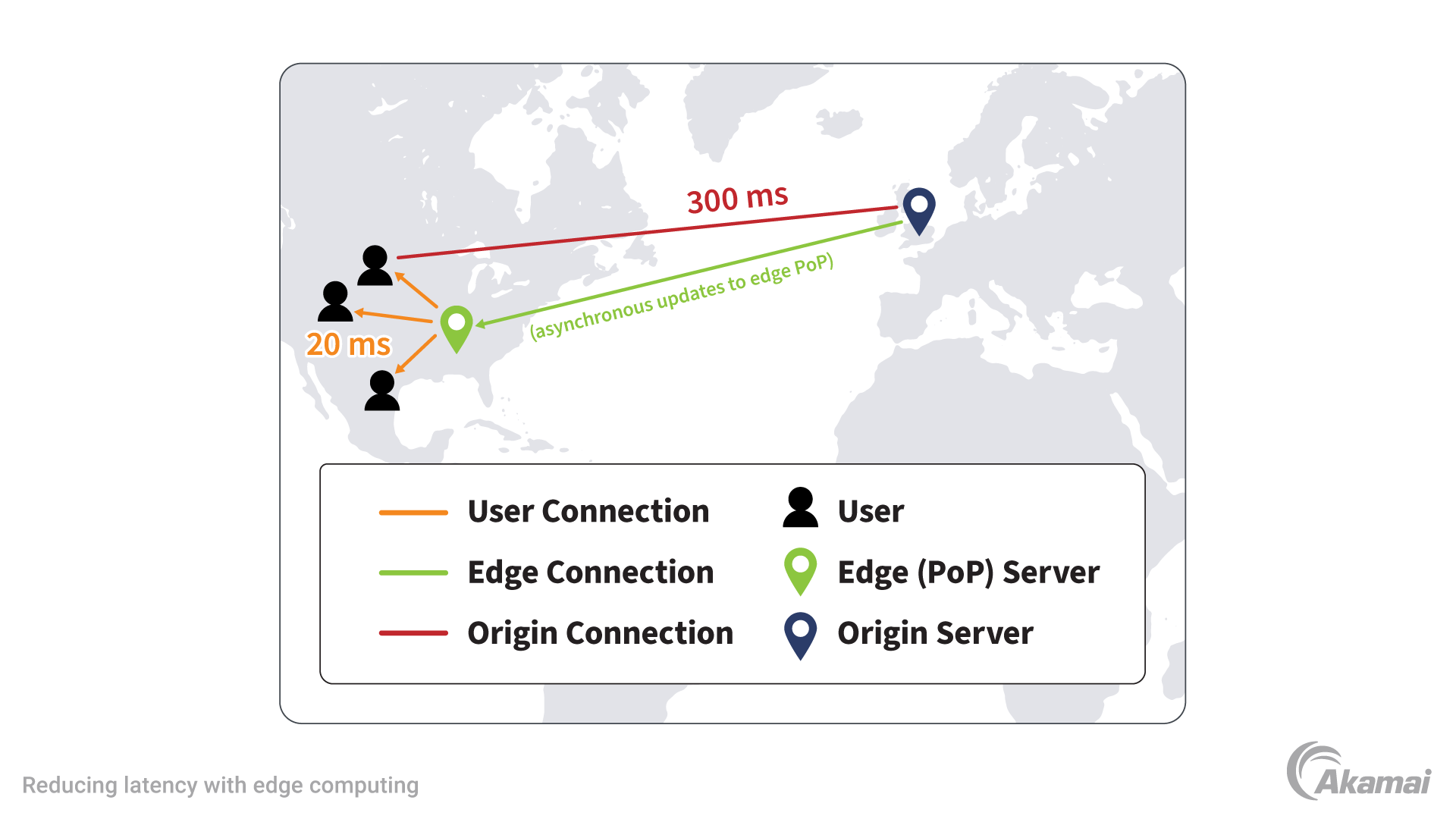

- Reduced latency. Edge servers minimize the distance that data must travel by serving content or processing data closer to users and devices. The result is less latency, better user experiences, and faster decision-making capabilities.

- Stronger security. Because edge servers reside closer to users and devices, data can travel a shorter distance and is exposed to fewer potential risks of cyberattack. In specific use cases like back-end core banking systems or processing for industrial equipment, edge servers provide stronger security by not exposing data to outside networks.

- Reduced workload for origin servers. CDN edge servers cache copies of content stored on origin servers elsewhere in the world. Serving up content from CDN edge servers reduces the workload on origin servers and makes them less susceptible to degraded performance during traffic spikes.

- Lower costs. Because edge servers minimize the amount of data that must be exchanged with external networks, they can reduce the amount of required bandwidth and associated cost.

- Higher availability. Edge servers can continue to function and serve content even when cloud servers and central data centers are down. And because there are thousands of them, you’re less likely to have an outage with the whole edge network.

- Greater reliability. With multiple servers located at the network’s edge, traffic and application requests can be routed to the next nearest point of presence when one edge server goes down.

- Greater efficiency. Edge servers make operations more efficient by rapidly processing massive amounts of data near the places where the data is collected.

- Simplifying data sovereignty. By keeping data close to where it’s produced and used — rather than transmitting it to cloud data centers elsewhere in the world — organizations can more easily comply with local data sovereignty regulations like GDPR.

What are examples of use cases for edge servers?

Edge servers are typically deployed in use cases that require ultra-fast, real-time data processing. These may include:

- Self-driving vehicles that require large amounts of data to be processed for real-time decision-making

- IoT devices that monitor conditions, equipment, or patients in high-stakes environments

- Banking apps that require fast performance and strong security, where sensitive data must be isolated

- Streaming services that deliver cached content to users with low latency and high bandwidth

- Surveillance systems that require real-time data analysis

- Remote monitoring systems of oil and gas operations and assets

How can organizations protect edge servers?

To protect edge servers, IT teams must incorporate a multilayered approach to security. Strong identity and access management prevents unauthorized users from accessing edge servers and minimizes broad permissions for authorized users that inadvertently lead to security breaches. Data encryption can protect data traveling between edge servers and devices. Intrusion detection systems monitor activity at the network’s edge to uncover possible intrusions. Endpoint protection helps to monitor potential security risks for remote devices connecting to edge servers. Web application firewalls block threats like DDoS attacks at the edge.

Frequently Asked Questions (FAQ)

Edge servers are ideal for IoT networks because they bring computing power as close as possible to the vast number of connected devices and applications in an IoT network. By reducing latency and improving performance, edge servers enable IoT networks to deliver real-time data that can enhance decision-making and awareness for organizations that rely on the data collected by IoT devices.

A distributed edge architecture is also far better able to deal with the scale of millions of devices sending data and updates. Just like we saw with video and website distribution, once the traffic starts going, we’re going to need a distributed architecture — because a single centralized cloud can’t meet the cost and performance requirements for this type of use case.

In computing, the network edge is everywhere that devices, servers, machines, local computers, and local networks interact with the internet as they produce, process, and consume data.

Cloud edge occurs when cloud resources are moved closer to the edge of a network where data is generated, processed, and consumed by end users and devices. Cloud edge scenarios enable computing processes to occur with lower latency, higher availability, and stronger security.

AI on edge refers to artificial intelligence (AI) applications that reside on edge servers. This enables computations to be performed closer to where data is collected instead of at cloud computing centers, which may be located anywhere in the world. Running AI on edge servers enables results to be returned faster, enhancing real-time monitoring and decision-making.

Why customers choose Akamai

Akamai is the cybersecurity and cloud computing company that powers and protects business online. Our market-leading security solutions, superior threat intelligence, and global operations team provide defense in depth to safeguard enterprise data and applications everywhere. Akamai’s full-stack cloud computing solutions deliver performance and affordability on the world’s most distributed platform. Global enterprises trust Akamai to provide the industry-leading reliability, scale, and expertise they need to grow their business with confidence.