Kubernetes is an open-source container orchestration platform for automating, deploying, scaling, and managing containerized applications, workloads, and services.

Containers are packages of software that have all the files and dependencies required to run in any environment, including application code, runtime, system libraries, and system tools. By virtualizing the operating system, containers can run in dev, test, and production environments, or in any computing environment from personal devices to public clouds or private data centers.

How do containers work?

Containers involve several components.

- Container images are complete, static, lightweight, executable packages of software that contain all the information needed to run a container. There are several container image formats — the most common is the Open Container Initiative (OCI).

- Container engines access container images from a repository and run them. Some of the most common container engines include Docker, CRI-O, Containerd, runC, LXD, and RKT. Container engines can run on any container host including personal devices, physical servers, or in the cloud.

- Containers are container images that have been run by the container engine. The host operating system — typically Linux, Mac, or Microsoft Windows — limits the container’s ability to access resources like CPU, memory, and storage, preventing one container from consuming a disproportionate amount of physical resources.

- Container scheduler and orchestration technology manages the deployment and running of containers.

What is containerization?

Containerization is the task of bundling software code with the operating system, libraries, configuration files, and other dependencies required to run the code. By offering a “build once, run anywhere” approach to software development, containerized applications enable developers to create and deploy applications more quickly and securely.

Why are containers needed?

As digital transformation (link to /glossary/what-is-digital-transformation) has made the world more interconnected, applications are frequently required to run in many different environments. In the past, many applications would not run correctly without modification when moved from one environment to another, typically because of configuration differences in underlying infrastructure and dependencies.

Containers solve this problem by packaging software in a lightweight infrastructure that contains everything required for an application to run. In this way, containers allow developers and IT teams to deploy software across environments without needing to modify code. As a result, IT teams can count on consistent behavior across different machines and environments. Developers can make changes to code and add new dependencies to containers without worrying whether the revised code will be incompatible with various environments.

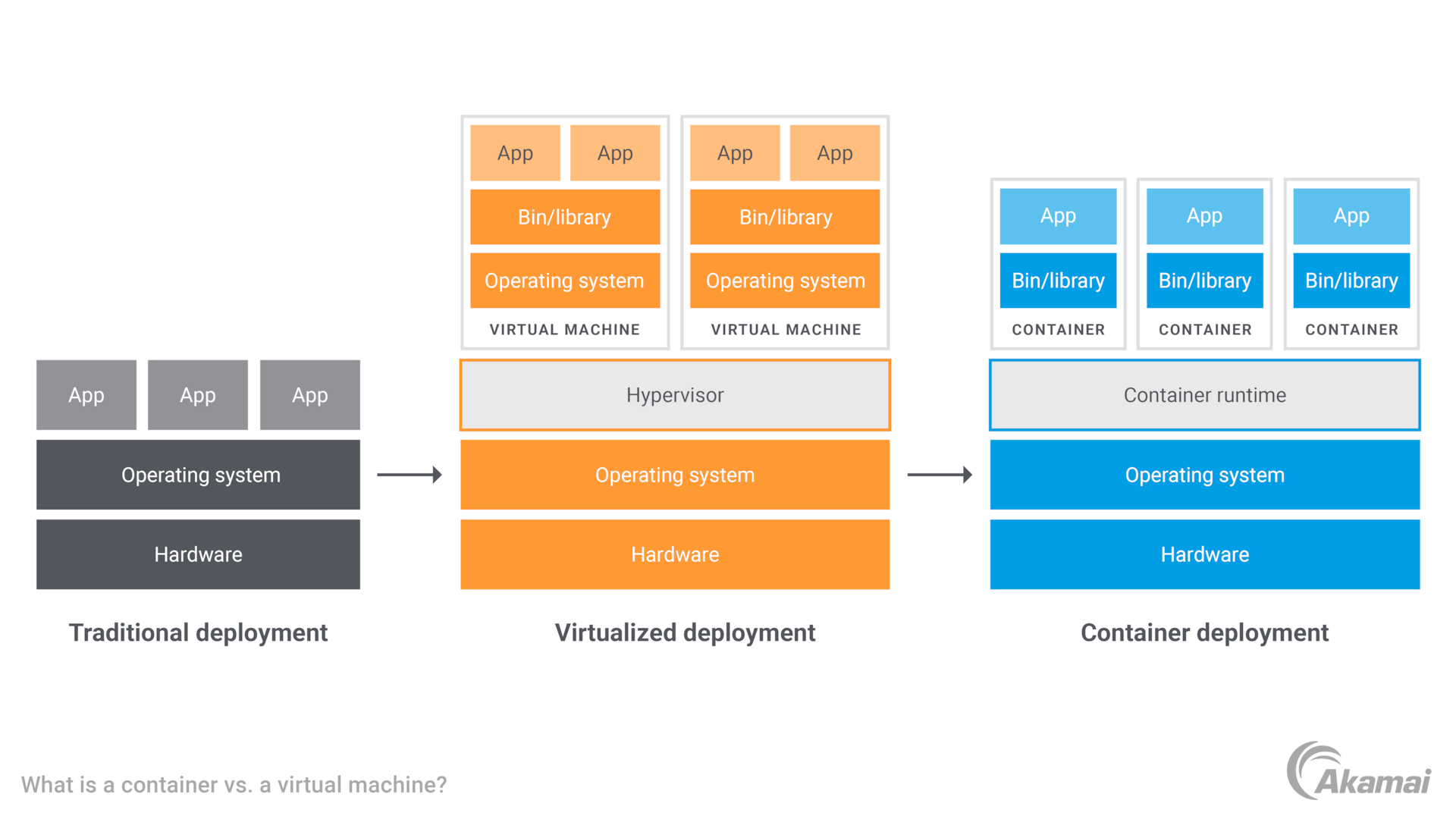

What is a container vs. a virtual machine?

Virtual machines (VMs) are another virtualized computing model. VMs use a hypervisor to virtualize the physical hardware required to run a server, and each VM contains a guest operating system along with applications, libraries, and dependencies. VMs can consume a significant amount of system resources, especially when several VMs — each with its own guest OS — are running on the same physical server.

In contrast, container technology is virtualized at the OS level rather than the hardware level, and multiple containers share the same host OS or system kernel. As a result, containers use a small fraction of the memory required by VMs, since containers share the OS kernel. This also allows containers to start up in seconds, while VMs may require several minutes.

What is a container designed to do?

Containers support multiple use cases.

- DevOps. Containers enable application development teams to adopt a cloud-native development model for DevOps, continuous integration and continuous deployment (CI/CD), and serverless frameworks.

- Microservices. The lightweight quality of containers makes them ideal for microservice architectures in which software is made up of multiple, loosely coupled smaller services.

- Cloud computing. Because containers provide consistent performance anywhere, they are ideal for multicloud and hybrid cloud deployments where workloads may move between public cloud and private data center environments.

- Cloud migration. Containers are often used to modernize applications as IT teams prepare them for cloud migration. Containers are also an ideal candidate for services that will be refactored during migration.

What are the benefits of containers?

Containers offer organizations many advantages, including:

- Greater efficiency. Since they share the same operating system kernel as the host, containers are more efficient than VMs.

- Easier management. Containers make it possible to quickly deploy, patch, and scale applications.

- Workload portability. Containers allow IT teams to deploy and run workloads anywhere — on Linux, Windows, or Mac operating systems; on VMs or physical servers; and on individual devices, on-premises data centers, and in the cloud.

- Consistent performance. Containers provide reliable performance across multiple operating systems and platforms.

- Lower overhead. When compared to traditional computers and VMs, containers have far fewer system resource requirements for memory, CPU, and storage. This makes it possible to support many more containers on the same infrastructure.

- Optimized utilization. By enabling a microservices architecture, containers make it easier to deploy and scale application components granularly, avoiding the need to scale up an entire application when a single component is unable to manage a workload.

What is container orchestration?

Container orchestration is the task of managing multiple container deployments across an organization. A container orchestrator is a platform that automates the lifecycle of container management, including tasks such as provisioning and deployment, resource allocation, maintaining uptime, scaling up and down, service discovery, networking, storage, and security.

Orchestration provides visibility and control over where containers are deployed and how workloads are allocated across several containers. Orchestration avoids the need to manage containers manually and allows IT teams to apply policies selectively or collectively to a group of containers.

Kubernetes is the most well-known container orchestration platform and can be deployed on any infrastructure, including public clouds, on-premises, or on edge networks. Red Hat® OpenShift® is an enterprise-ready Kubernetes platform that provides full-stack, automated operations on any infrastructure along with self-service environments for building containers.

What are security threats to containers?

While containers allow IT teams to deploy software anywhere and start applications quickly, they also create another area of security concern. Developers and IT teams can take several steps to prevent containers from being exploited by attackers, including:

- Embracing security best practices and adding validations at the application/code level

- Running containers on a secure service such as Kubernetes

- Adding network restrictions at the cluster level to filter out unauthorized traffic

- Choosing a secure and reliable container platform at the cloud level

- Scanning container images for malware

- Enforcing strong controls in container registries

- Using security tools to address vulnerabilities that may become active during container runtime

FAQs

Containers first appeared several decades ago, but were reintroduced by Docker in 2013 to meet the demands of the modern computing era.

Docker is an open-source platform for building, deploying, and managing containers on a common operating system. The Docker platform packages, provisions, and runs containers, using resource isolation in the OS kernel to run multiple containers on the same operating system.

The Open Container Initiative (OCI) is an open governance structure under the Linux Foundation that is dedicated to creating open industry standards for container formats and runtimes.

Why customers choose Akamai

Akamai is the cybersecurity and cloud computing company that powers and protects business online. Our market-leading security solutions, superior threat intelligence, and global operations team provide defense in depth to safeguard enterprise data and applications everywhere. Akamai’s full-stack cloud computing solutions deliver performance and affordability on the world’s most distributed platform. Global enterprises trust Akamai to provide the industry-leading reliability, scale, and expertise they need to grow their business with confidence.