Accelerating Zero Trust Outcomes with Generative AI, Part 2: Guardicore AI

One of the most recognizable applications of generative AI we’re seeing in the market today is in the development of chatbots powered by artificial intelligence (AI), such as OpenAI’s ChatGPT. This next generation of chatbots, powered by generative AI’s learning capabilities, is making huge impressions on the public.

One could argue that it was the debut of ChatGPT in November 2022 that brought generative AI fully into the public consciousness — and for good reason. The potential of these chatbots sparks the imagination, for better and for worse, and the technology continues to evolve as investments in AI are reaching record highs.

This blog series highlights our use of generative AI on our platform for Zero Trust. In the first blog post, Accelerating Zero Trust Outcomes with Generative AI, Part 1: AI Labeling, we introduced AI Labeling. For this second installment, though, we’ll focus on the future of achieving Zero Trust outcomes, when microsegmentation will no longer be a primarily manual effort.

Introducing Guardicore AI

A trained generative chatbot can be quite useful, especially for speeding up manual tasks, querying relevant information, and making informed recommendations. With that mindset, we decided that one of the best ways to leverage generative AI in our industry-leading segmentation solution was to build our own chatbot and train it specifically to assist security practitioners with their Zero Trust segmentation initiatives.

Now, we are excited to announce the unveiling of our new Guardicore AI chatbot. Simply put, Guardicore AI is a generative AI-powered chatbot that will serve as a built-in segmentation and Zero Trust expert, as well as an in-house cybersecurity advisor. Driven by our customers’ desire to use natural language to query their networks, speed up manual tasks, and give them advice based on best practices, our chatbot will provide a platform in which the necessary components of Zero Trust no longer require manual effort to operationalize by:

Accelerating operations and providing support

Acting as a security consultant.

Accelerating operations and providing support

The first version of Guardicore AI, including the following capabilities, will start rolling out to existing customers in Q2 2024.

Network traffic analytics

The first thing you’ll be able to do with Guardicore AI is glean insights about what’s going on in your network at any given moment. This information is already provided by the Reveal map, but Guardicore AI will enable you to ask specific questions about certain aspects of your network traffic, in natural language, and receive the exact information you need.

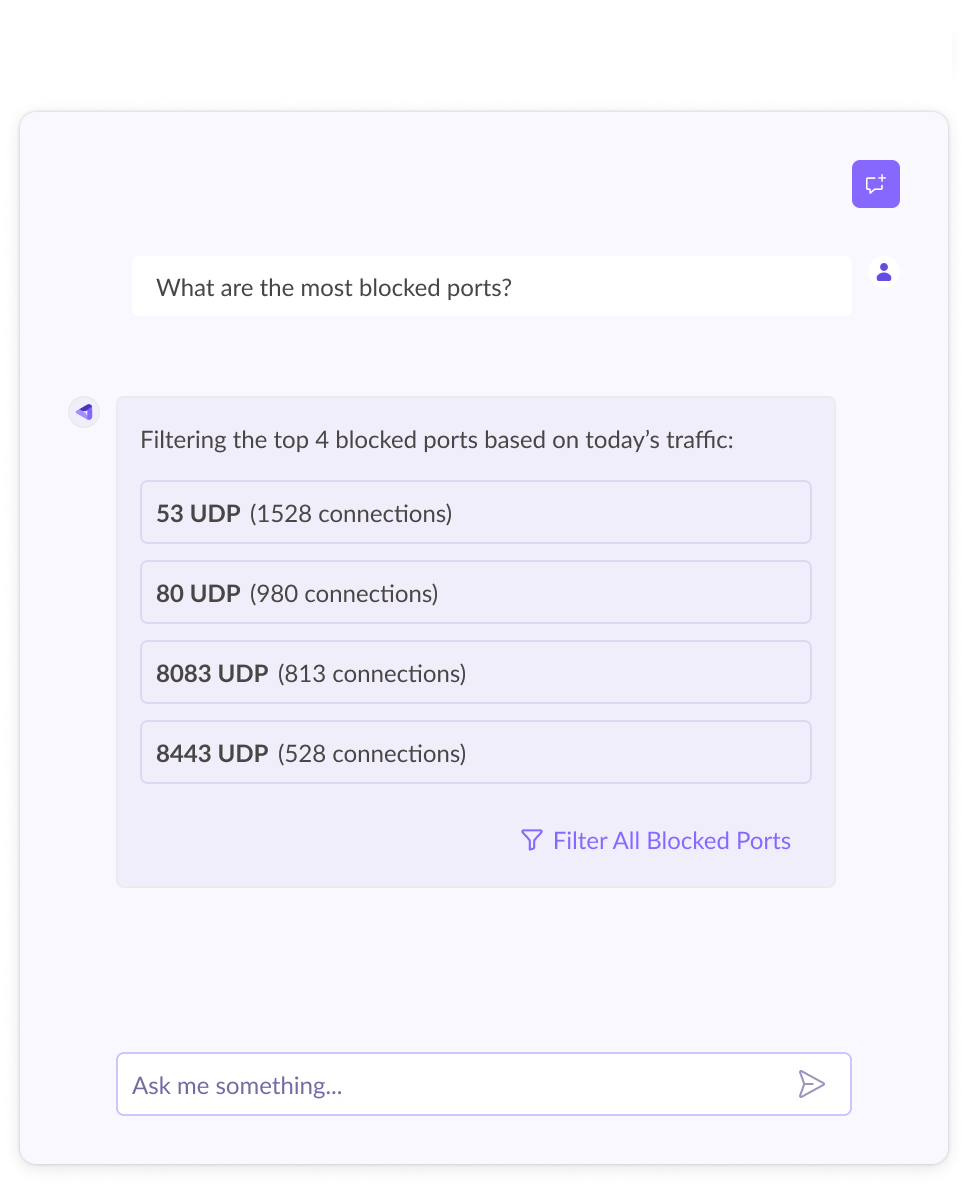

For example, you can ask the chatbot a simple question like “What are the most blocked ports?” and quickly receive a comprehensive answer (Figure 1).

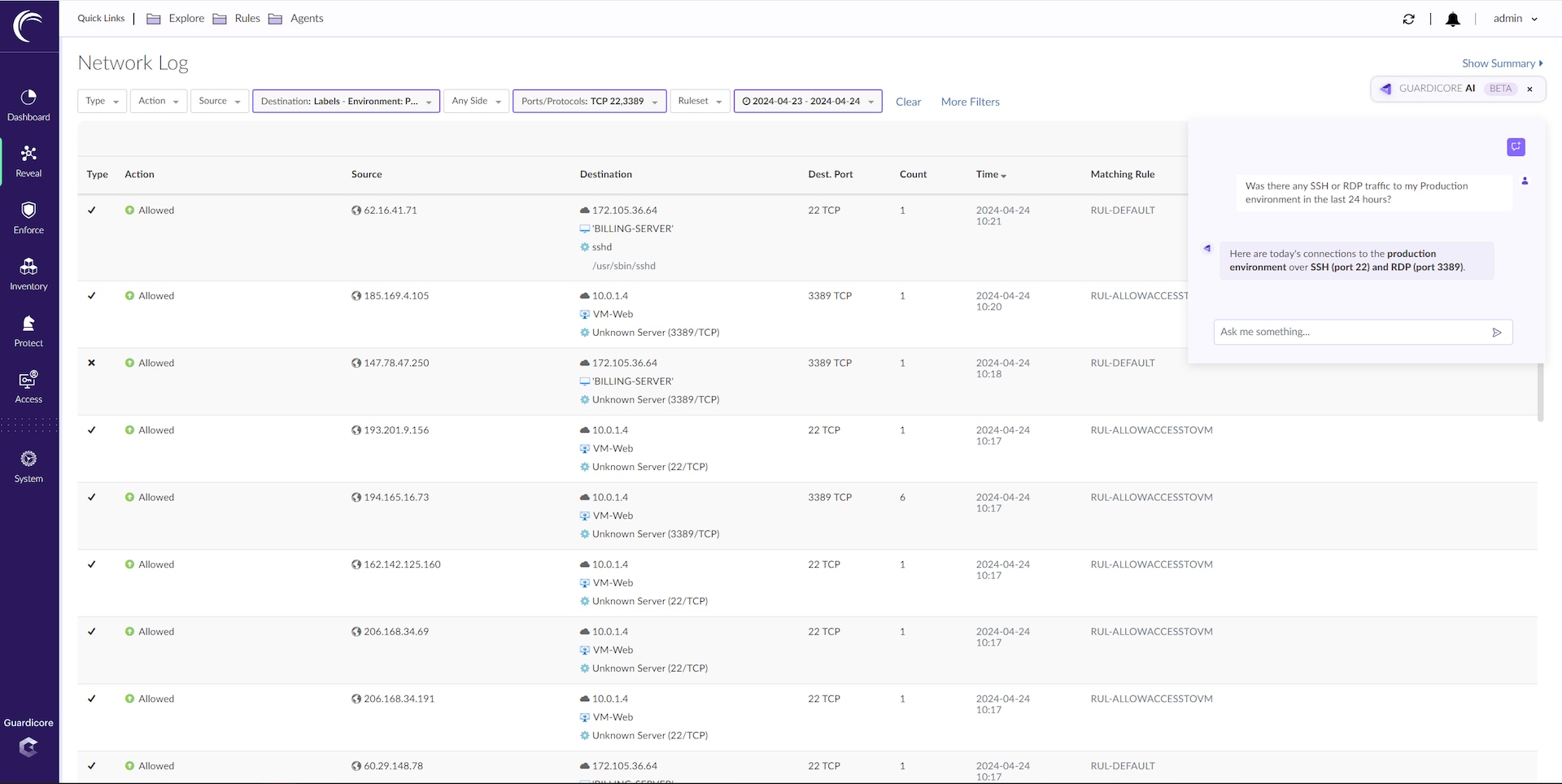

Network log and map filtering

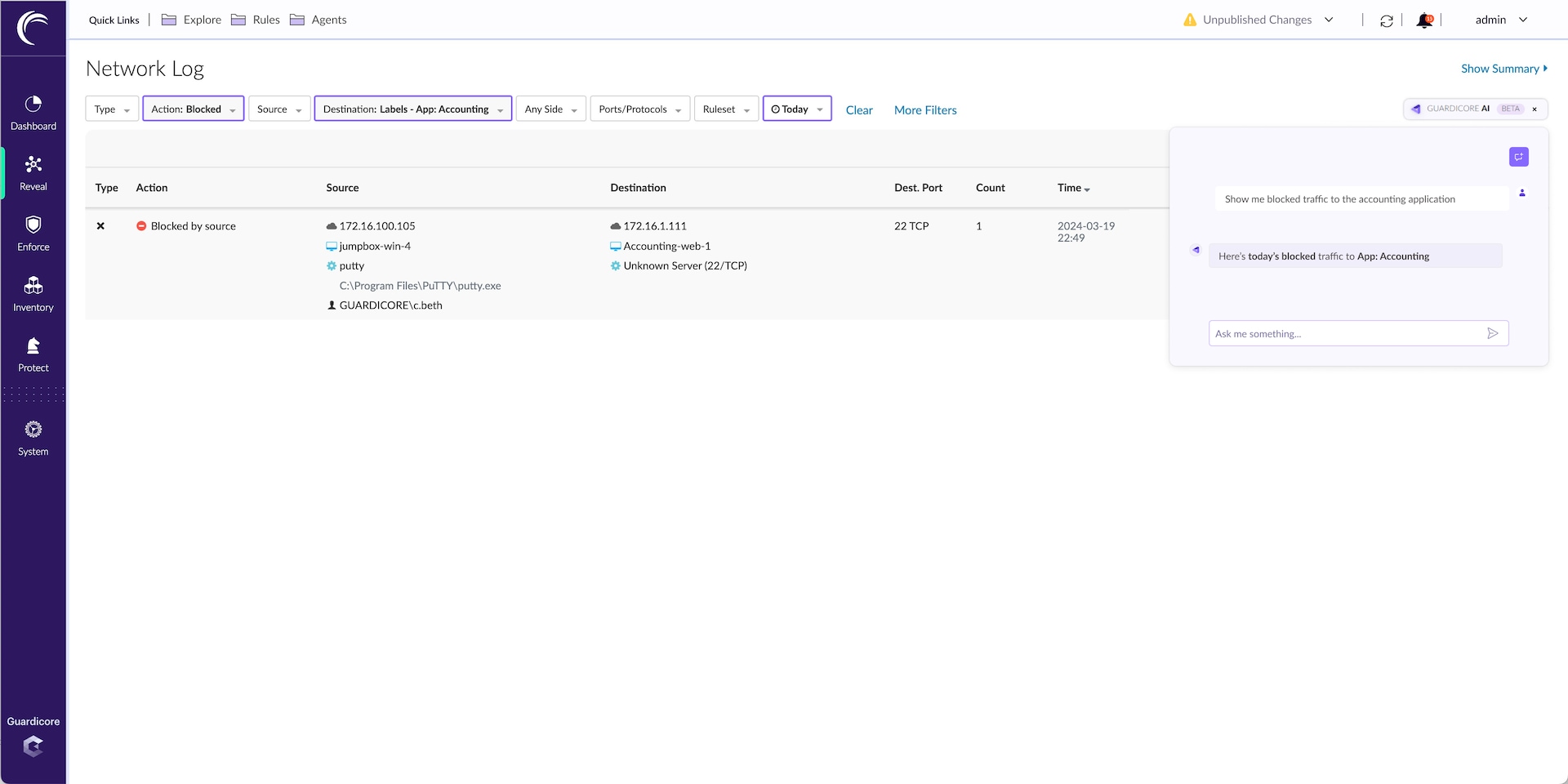

You’ll also be able to query the network logs for specific information, and then use that information to create filters for the Reveal map (Figure 2). Those filters will allow you to visualize the information, which will aid in understanding and knowledge sharing.

Acting as a security consultant

Our vision for Guardicore AI goes well beyond analytics and querying. We want this chatbot to be a guide and advisor to those who are trying to make real progress on their Zero Trust journeys to help accelerate segmentation initiatives and decrease time to policy.

Having the ability to deploy segmentation faster and more easily will eliminate some of the major obstacles to full Zero Trust implementation in modern IT environments, deliver a significant decrease in overall attack surface, and prevent the success of ransomware and other malicious threats.

Some examples of features to come in Guardicore AI include:

Detailed analyses of your network and solution deployment data (traffic data, assets, labels, etc.)

Guidance on best practices for operationalizing Akamai Guardicore Segmentation

Suggestions on which areas or applications to segment next

Suggestions on how to mitigate the latest CVEs using Akamai Guardicore Segmentation

Assistance in solution troubleshooting

Generation of SQL queries from natural language for Insight

Convert natural language into SQL queries for Insight

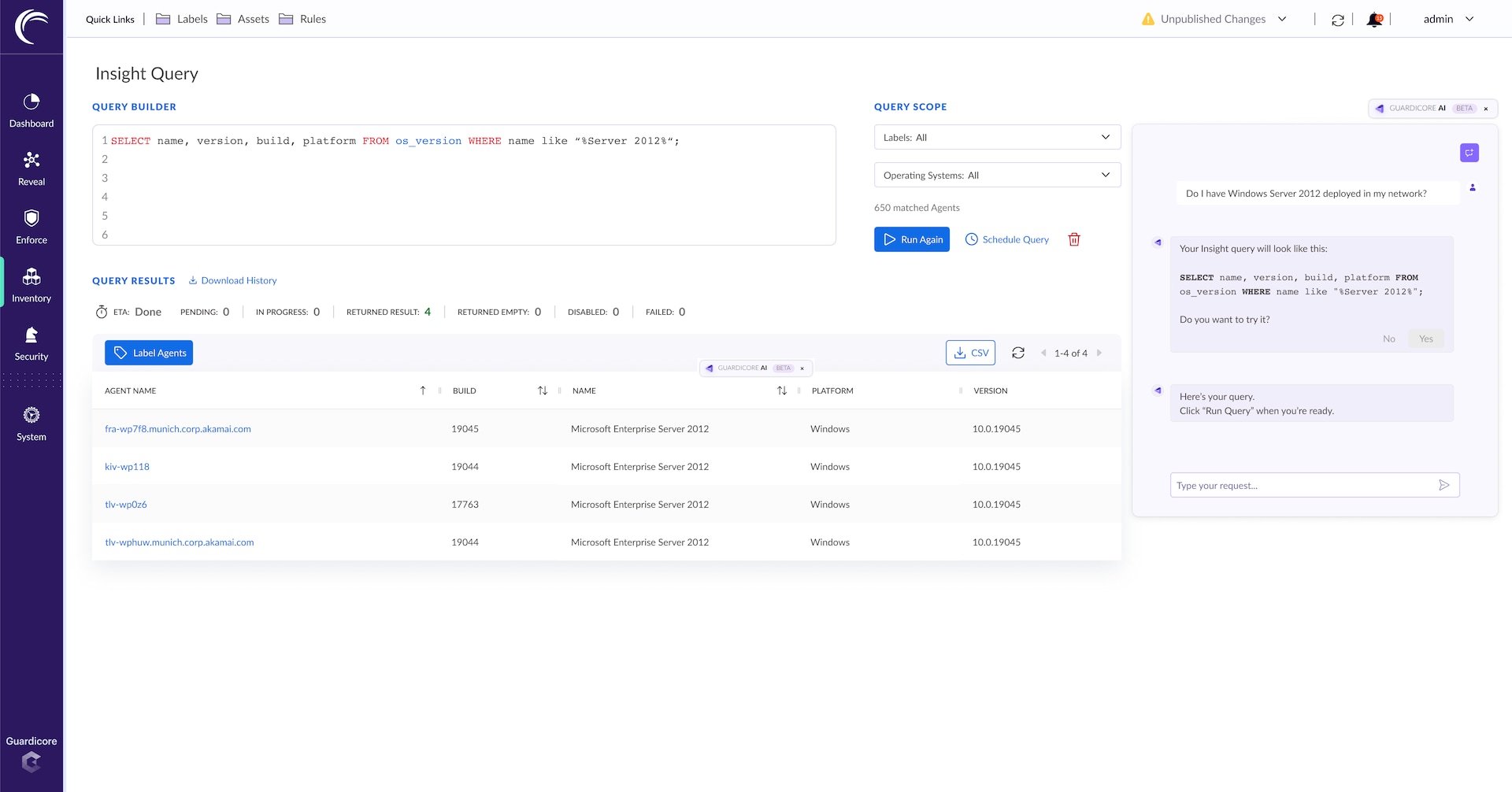

Customers who are using our Insight feature (our OSquery-based engine for collecting real-time context from assets communicating in the network) will soon be able to create queries in natural language.

This will be a huge value-added feature for our customers, as one of the main obstacles to the widespread use of Insight is the need for queries to be asked as SQL commands. This requires somebody on your team to be familiar and comfortable with generating queries in SQL, and while many teams have at least one person who can do this, it shouldn’t be a prerequisite to getting maximum value out of this feature.

Figure 3 is an example of how Guardicore AI will be able to convert natural language into a SQL command, which can then be used in Insight to generate the relevant information.

Use cases for Guardicore AI

So what kind of value do our customers stand to gain from a generative AI–powered feature like Guardicore AI? Three use case examples include:

Assisting with compliance

Responding to threats

Assessing vulnerability

Assisting with Compliance

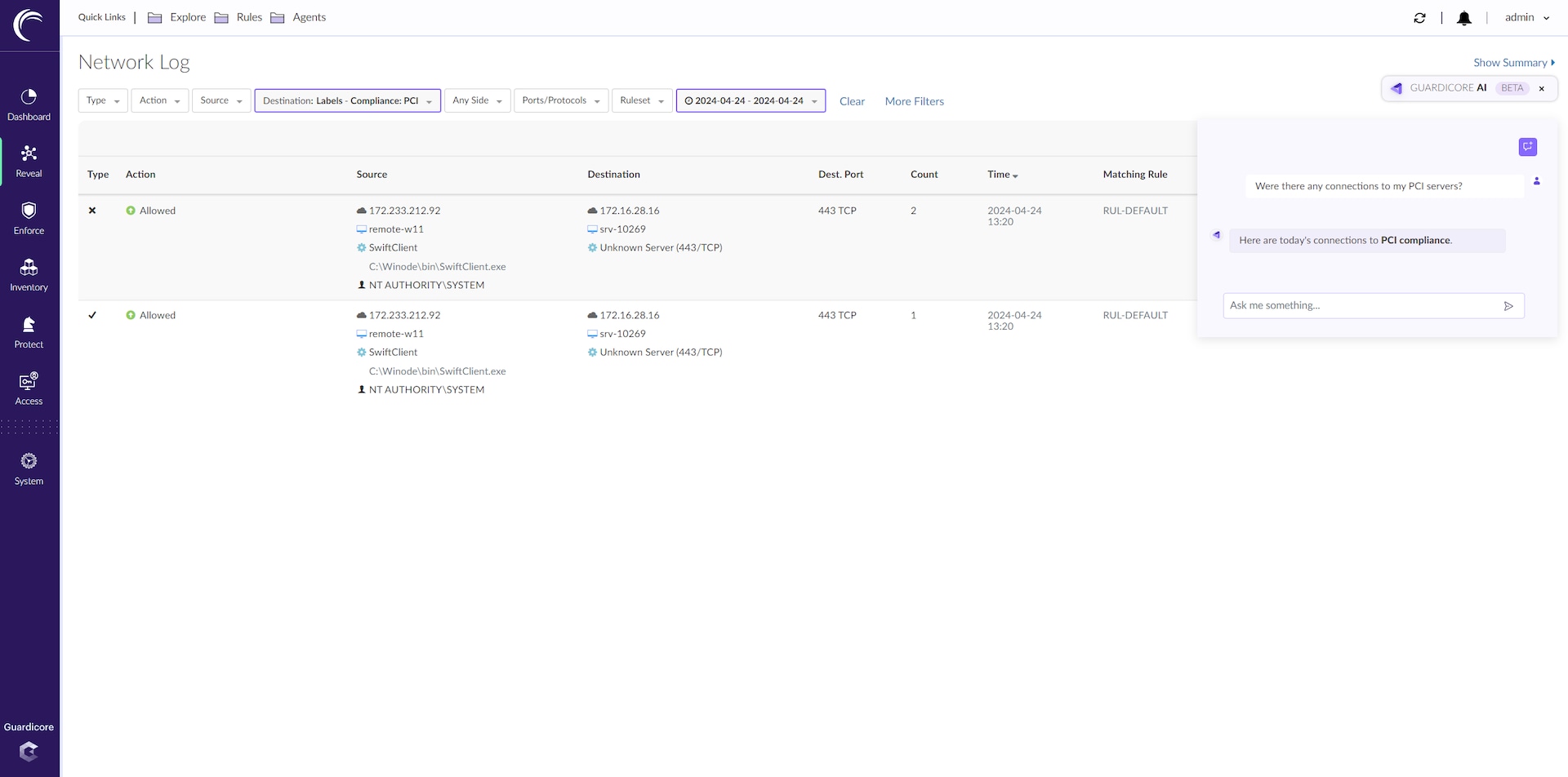

Many compliance mandates, such as PCI DSS, require any systems that store, process, or transmit sensitive data (such as payment card data) to be isolated from the rest of the IT environment. This requirement is logical and provides clear security benefits, but its implementation can be difficult. Scoping out every relevant system, and then attesting compliance, is difficult in organizations with large, sophisticated networks. Collecting and correlating relevant information is a time-consuming step, and security professionals need to be able to access relevant data with ease.

Guardicore AI can help. You’ll be able to use natural language prompts to quickly pull up relevant, critical information about connections to PCI-labeled servers across your entire network (Figure 4). This data can also be viewed historically, which means you can immediately attest compliance for a specific period. This is a huge time-saver for any team that has to attest compliance.

Responding to threats

When an organization suspects that they are under attack, time becomes the most valuable resource. It becomes a race against the clock to find the threat and begin analysis and remediation. Typically, this requires using multiple point products, and rich data and technical expertise must be promptly available. Security professionals need a better way to investigate and respond to incidents quickly — and from a single platform.

Guardicore AI can help here, as well. Now you can use natural language prompts to investigate suspicious traffic, or surface any process communications that may have been abused by malicious actors, such as the commonly abused Secure Shell (SSH) or Remote Desktop Protocol (RDP) (Figure 5).

Assessing vulnerability

Malicious attackers are constantly looking for security gaps in IT systems, and there will always be new vulnerabilities that security teams need to manage, such as Log4j and XZ Utils. As a result, effective vulnerability management is essential to proactively secure increasingly complex IT environments. There are existing solutions available to address this, but almost none of them are Zero Trust–oriented, nor do they leverage microsegmentation to secure potentially vulnerable systems.

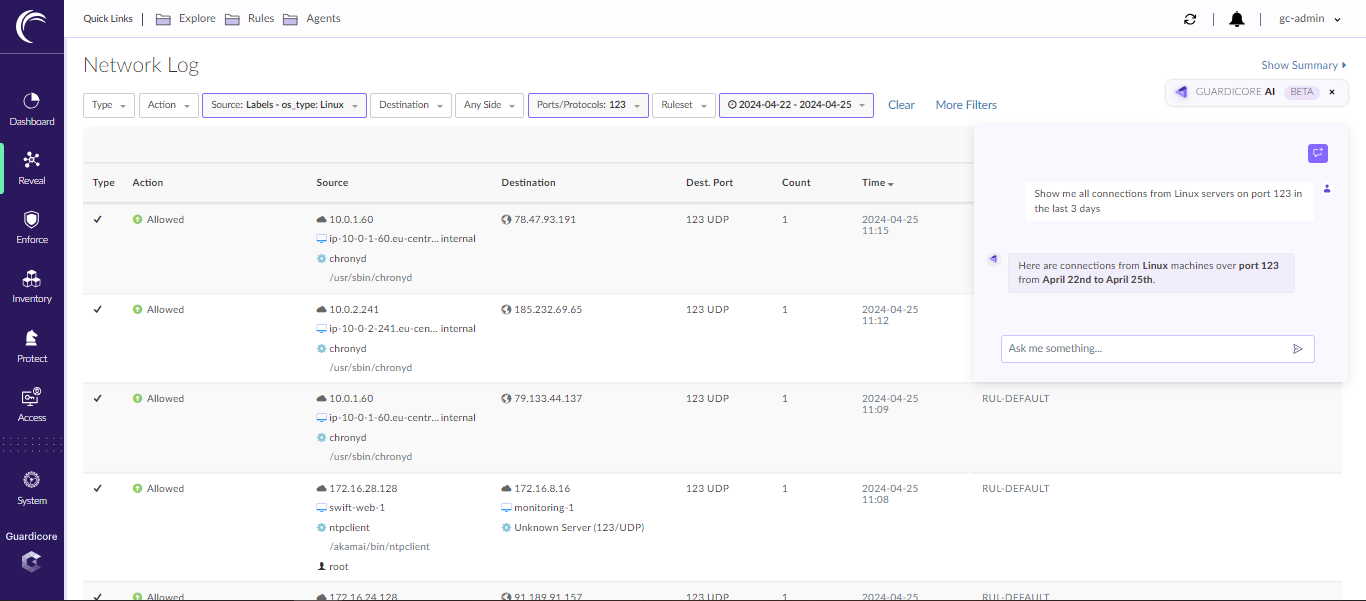

As with general threat response, time is the most important factor when responding to a new vulnerability, and Guardicore AI is here to help. For example, if a new vulnerability is announced that is known to target Linux machines, Guardicore AI can help your teams with vulnerability assessment. With just one command in natural language, the chatbot can surface all connections made to all Linux machines in your network (Figure 6).

Policy can then be quickly applied to these machines, acting as a virtual patch, and protecting your systems until a permanent patch or fix is distributed. This is just one more example of the security value that can come from leveraging generative AI to facilitate operations and enable Zero Trust.

Conclusion

One of the difficulties of implementing Zero Trust in a large enterprise environment is the amount of work that’s required to achieve that protected state. It involves many manual tasks and relies on constant monitoring and administration across different infrastructures.

We believe that the future of achieving Zero Trust outcomes is one in which most of the manual work is assisted by a generative AI feature or application, which will remove one of the biggest barriers to adopting Zero Trust. Our goal is to provide a platform in which segmentation and other necessary components of Zero Trust require less manual effort to operationalize.

Learn more

Learn more about our AI-powered Zero Trust platform.