Lightning-Fast Requests with Early Data

Introduction

We are proud to announce that TLS 1.3 Early Data (also known as 0-RTT) is now available for both HTTP/2 over TCP and HTTP/3 over QUIC.

This new performance feature helps to reduce the time to first byte (TTFB) metric when loading your web pages via Akamai, in turn improving Google Web Vitals such as First Contentful Paint and Largest Contentful Paint (LCP), which helps optimize SEO and search rankings.

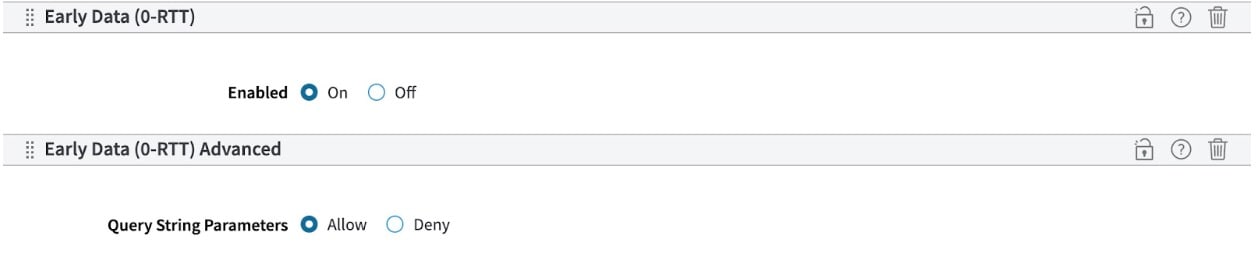

You can easily enable Early Data today by adding the new Early Data (0-RTT) behavior to your property configuration (via Property Manager or our API), which automatically enables the feature for both TCP and QUIC.

For security reasons, the default behavior only allows GET requests without URL query string parameters. However, you can add the optional Early Data (0-RTT) Advanced behavior to explicitly allow query string parameters on specific URL paths, endpoints, or for certain client types.

Why do you need Early Data?

When it comes to web performance, network latency (not bandwidth) remains a key constraining factor. It is crucial to deliver a web page’s HTML content to the browser as soon as possible (TTFB) so it can begin to discover and request the other resources it needs (JavaScript, CSS, images, etc.). As such, TTFB is a key predictor for higher-level metrics like LCP, which Google uses to influence search rankings.

Akamai has traditionally offered many different options to minimize latency, including having a huge number of edge servers close to end users, providing highly flexible caching at the edge, and compressing resources with advanced algorithms to make them as small as possible.

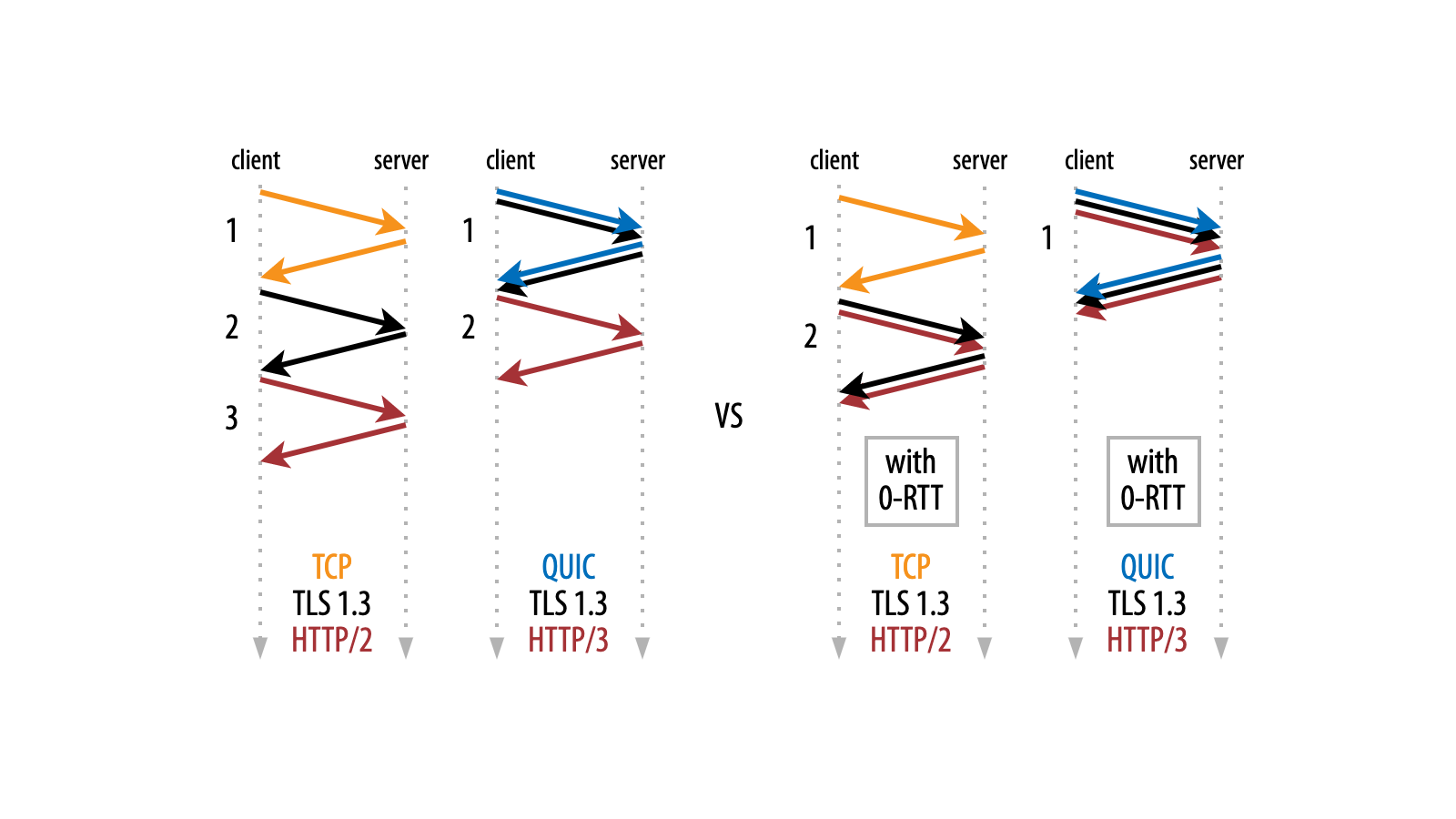

However, these optimizations mainly come into play after the end user has established an encrypted connection to the nearest edge server. This connection setup by itself can also take a long time; for example, three network round trips for the older TCP + TLS 1.2 or two round trips for the newer TCP + TLS 1.3.

This is one of the main reasons the QUIC protocol, which underpins HTTP/3, was invented. The QUIC protocol can combine both the transport-level and encryption-level handshakes into a single round trip, minimizing the delay.

Now, we are able to shave off one additional round trip from both the TCP+TLS 1.3 and QUIC handshakes with the new Early Data feature, lowering TTFB even further (Figure 1). In the QUIC + HTTP/3 case, we even remove the handshake overhead entirely, causing zero round trips of connection setup overhead (which is why it’s often also called 0-RTT).

While the Akamai platform’s massively distributed nature guarantees as-low-as-possible latency, there are still situations and cases where saving even one round trip matters. For example, for one of our CDN configurations, 34% of connections still experience a round-trip time (RTT) of more than 50 ms (12% even experience 100+ ms). In these instances, you can get an impressive free TTFB and potential LCP boost for a lot of users by enabling this feature.

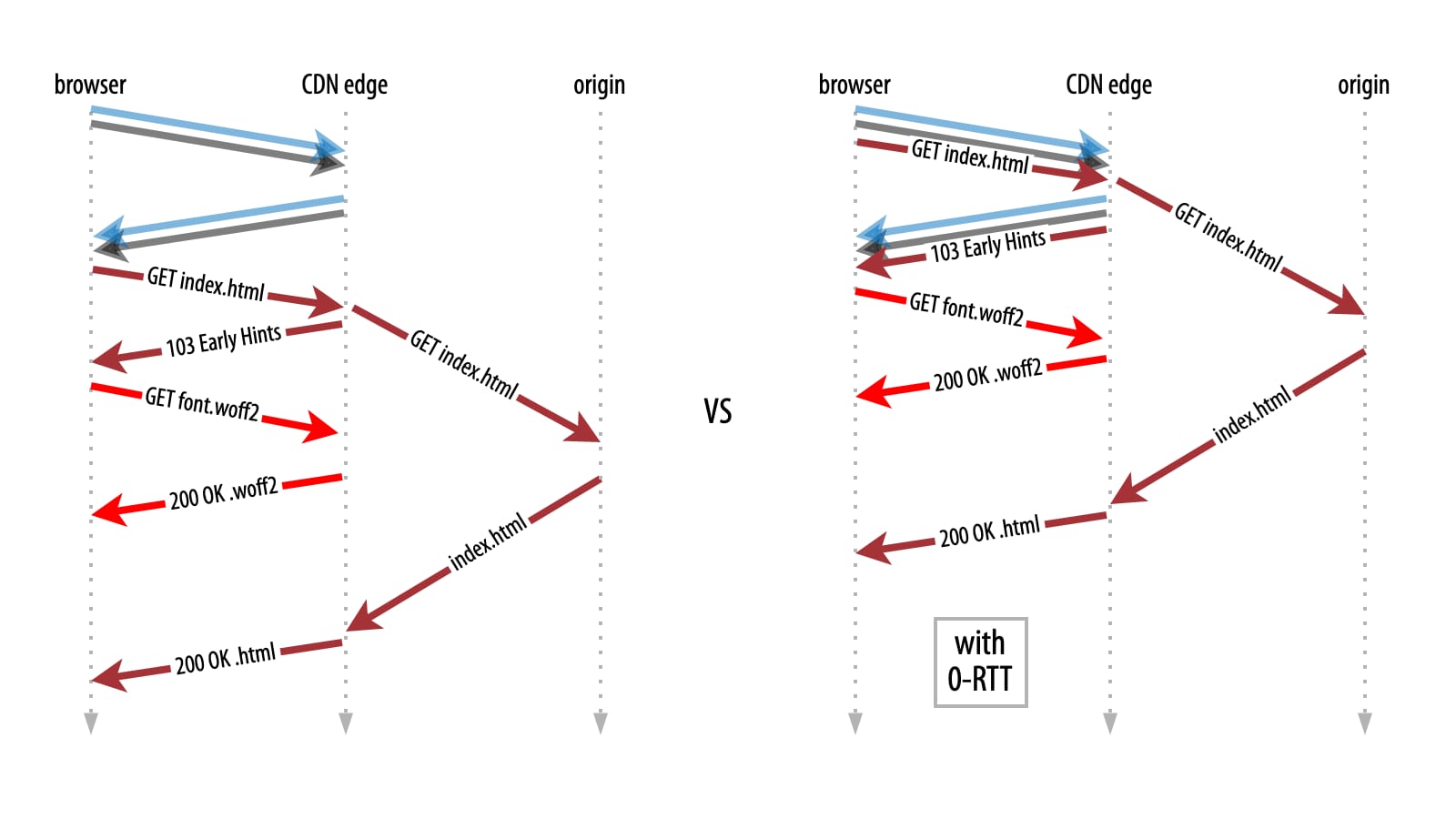

Early Data complements Early Hints

Finally, Early Data is also highly complementary to Early Hints, another cutting-edge performance feature that Akamai released earlier this year. With Early Hints, the Akamai edge can signal to the browser that it can start preloading the resources it will need for the page load, even while still waiting for the page’s HTML to be downloaded from the origin.

Normally, these Early Hints are sent in the first round trip after the handshake, but they can be perfectly carried in Early Data as well. This gets them to the browser one full RTT earlier, allowing more time for them to be downloaded from the Akamai edge into the browser’s cache by the time the HTML arrives.

The best part: This happens automatically when you enable both the Early Hints and Early Data behaviors in your property’s config.

How does Early Data work?

You might wonder: If this faster 0-RTT handshake is possible, why don’t we just do it all the time? Why do we even need the slower handshake variants? We need them because TLS 1.3 Early Data is a prime example of a performance vs. security trade-off.

To understand this, imagine the browser is connecting to a new server it has never seen before and tries to load its home page (e.g., www.example.com/index.html). Conceptually, the browser could send the HTTP GET for the page in Early Data for optimal performance, but crucially, it wouldn’t be able to encrypt this request at all. This is because the encryption keys are negotiated during the TLS handshake, which happens concurrently with the Early Data request. As an industry, we’ve been moving away from unencrypted HTTP for more than a decade, so even a single unencrypted request was deemed unacceptable by the creators of TLS.

Now you might wonder: How then can encrypted Early Data ever work, if we only get the encryption keys after the TLS handshake? Won’t that always be too late?

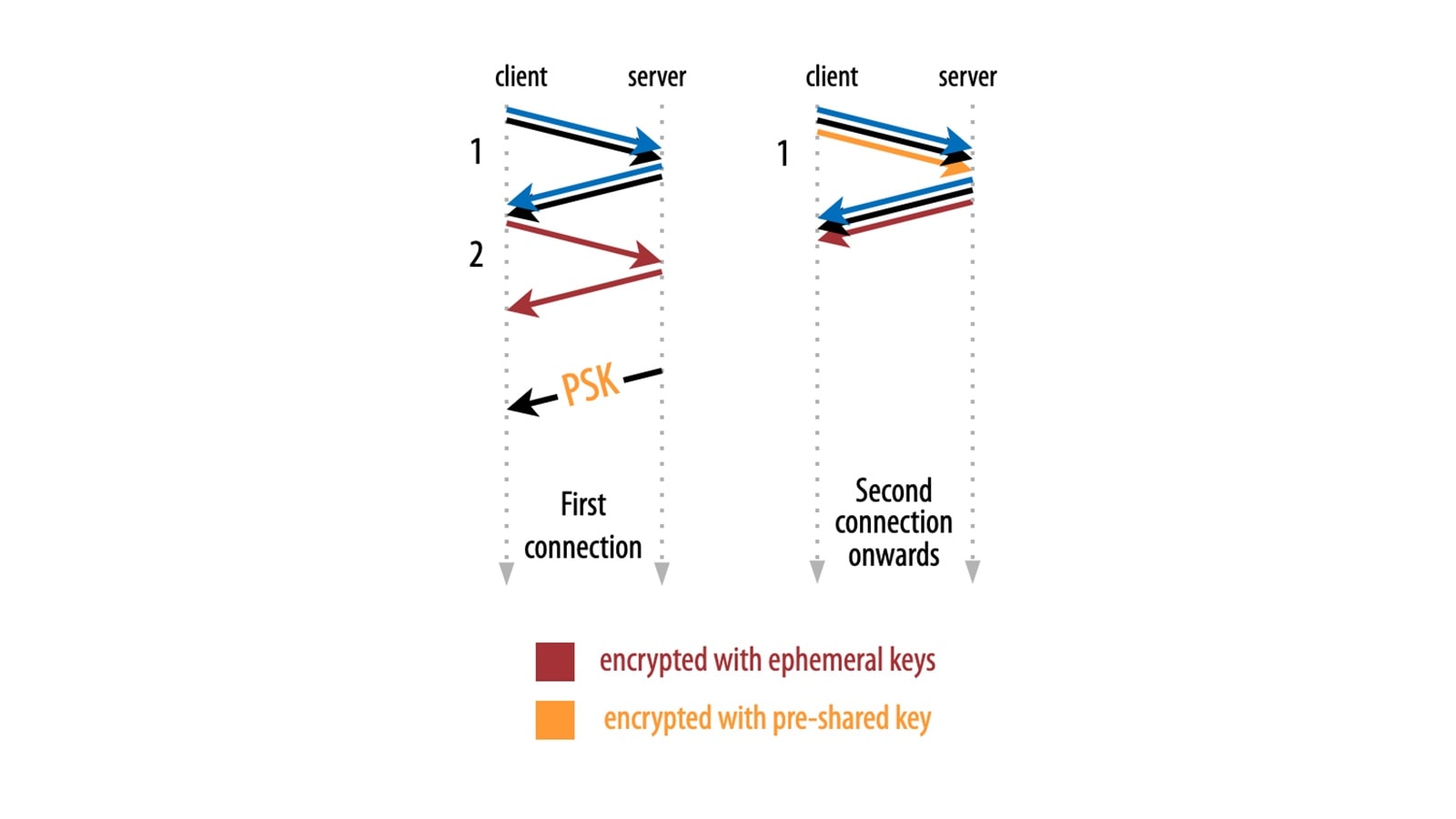

The solution is as simple as it is brilliant: We can re-use encryption keys from a previous connection to encrypt the Early Data request (Figure 3).

As such, the first time a client connects to a new server deployment, the client won’t be able to use Early Data. Instead, it uses the full/slower handshake to establish the encrypted session. Once this is done however, client and server can safely negotiate additional encryption metadata to be used in future connections.

Pre-shared keys

This metadata includes a so-called pre-shared key (PSK), which the client stores and can then use to encrypt an Early Data request at the start of the next connection to the same domain. Crucially, only the initial 0-RTT request is encrypted with the “old” PSK.

All of the other data on the new connection (including the response to the 0-RTT request) is encrypted using newly negotiated “ephemeral” keys in the new TLS 1.3 handshake. This is important for Perfect Forward Secrecy, ensuring that data stays safe even if your private keys ever get compromised.

Session tickets

As you might expect, there is a lot more technical complexity to the underlying mechanisms. For example, TLS uses a concept called Session Tickets so that only the client has to store the pre-shared metadata, removing distributed memory requirements at the server.

This, in turn, requires a new private Session Ticket Encryption Key (STEK) at the server deployment to encrypt the session tickets, which means the tickets can only have a limited lifetime, and so on.

Describing this process in full is beyond the scope of this blog post, but interested readers can find more details on our TechDocs page.

Luckily, all this complexity is mostly hidden behind Akamai’s battle tested implementation, and handled automatically when you add the Early Data (0-RTT) behavior to your property.

Full control over replay attacks

As we mentioned above, Early Data is one big performance vs. security trade-off, and that goes beyond how to encrypt the 0-RTT requests. This imposes some limitations and nuances on how the feature can be used in practice. A good example of why additional constraints are needed is the so-called replay attack.

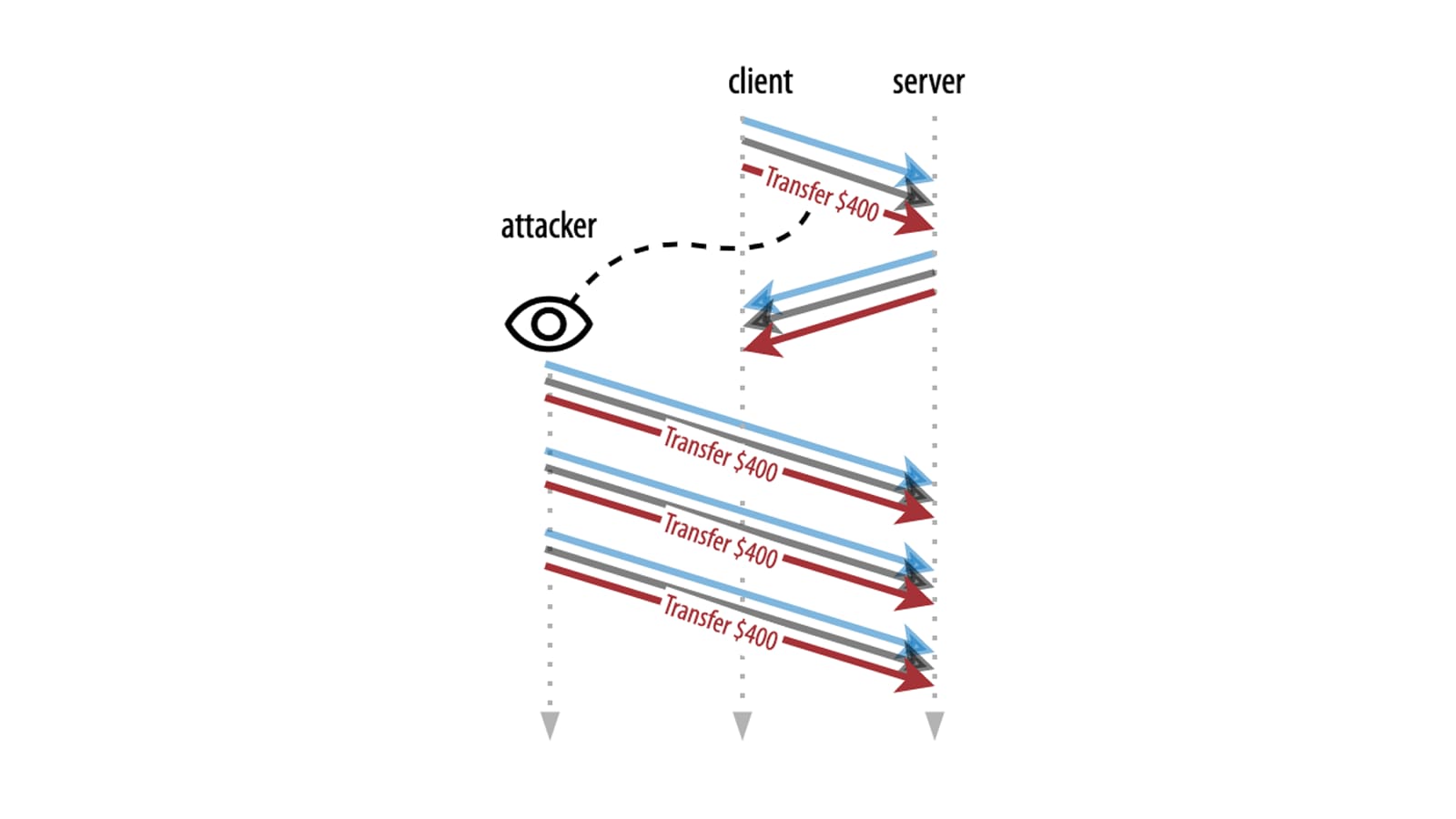

As discussed, Early Data is fully encrypted with the PSK: Attackers can neither read nor change anything inside the 0-RTT packets. However, what they can do is make copies of the 0-RTT packets, and send those copies to the CDN servers, as well (Figure 4).

Ideally, the edge servers would only allow each unique session ticket to be used once, but that’s difficult and costly in a highly distributed environment (and would undo much of the benefit). As such, the copied 0-RTT packets are processed as normal, which means attackers can cause Early Data requests to unintentionally be executed multiple times.

For a simple GET to www.example.com/index.html, this probably doesn’t matter much, but imagine Early Data carrying a request that changes some permanent state (e.g., causing a write to a database). The typical (though quite unrealistic) example here is someone sending a payment order/funds transfer request in 0-RTT. If an attacker would replay that, it could cause the payment to happen multiple times instead of just once.

However, it doesn’t have to be that impactful to be an abuse vector; even incrementing a visitor counter or replaying an outdated location update could have real consequences in highly targeted attacks.

Replay attack prevention

Conceptually, origin back ends and APIs themselves should already have replay protection built-in, as this can happen even outside of Early Data in normal settings. For example, some browsers will automatically retry requests if they think they might have timed out at the server.

However, many (dare I say most?) deployments don’t actually deal with duplicated requests at all,which can lead to a potential security blind spot. Indeed, it’s mostly some critical APIs (like payment processing) that provide logic to prevent replays, but even then sometimes mistakes still happen (and people get two pizzas delivered instead of one!).

Replay attack mitigation

This leaves CDNs in a weird situation: We can’t just upstream all the 0-RTT requests to the origin because the back end might be vulnerable to replay attacks. We also can’t just prevent the replay attack at the edge, because that’s too costly/difficult. As such, we have no choice but to try and mitigate the attack as much as possible.

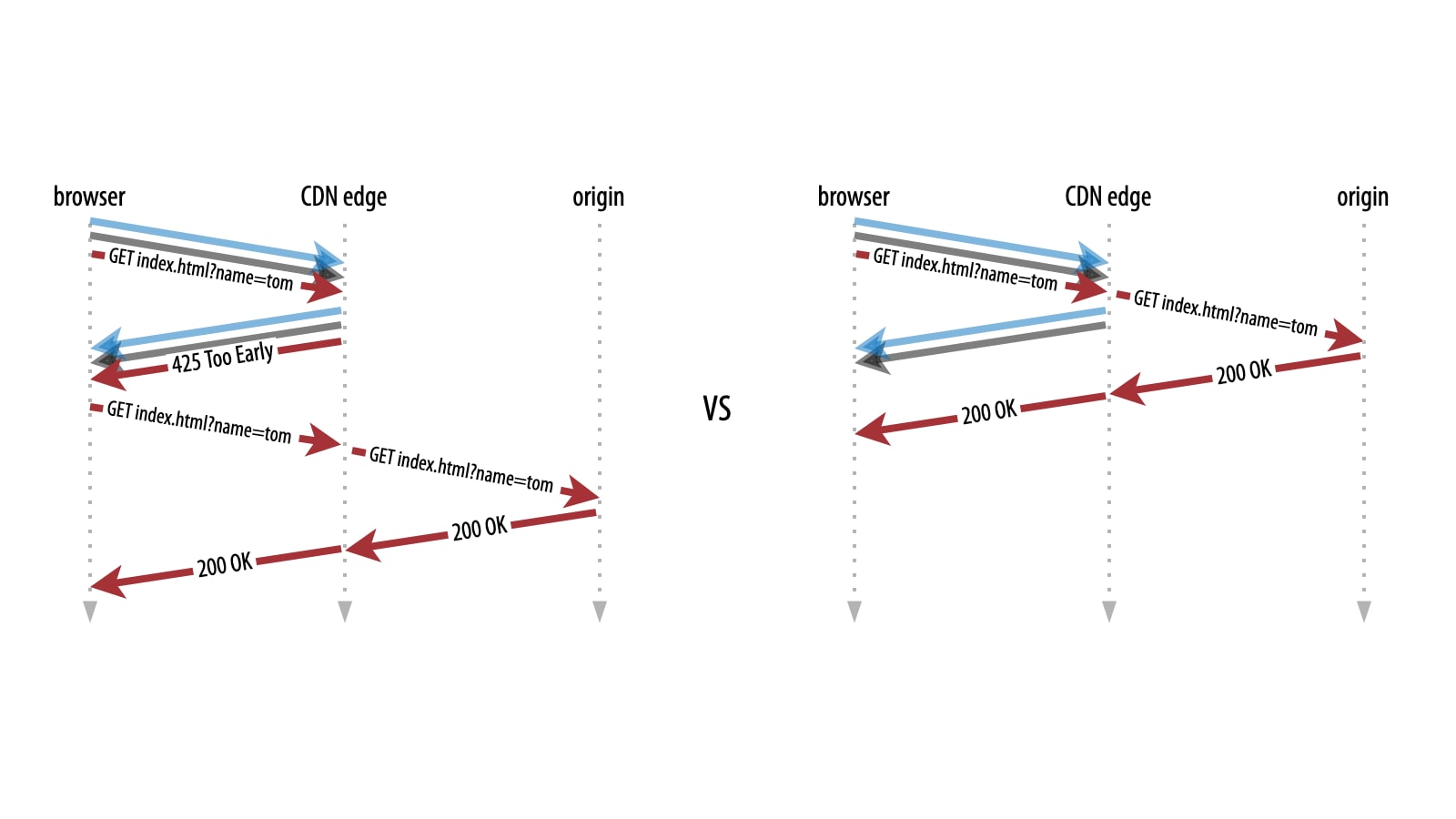

One common approach is to limit 0-RTT requests to only those operations that are most unlikely to change permanent state. Typically, this means only allowing HTTP GET requests without URL query parameters, as those should be safe in most environments (so www.example.com/index.html is possible, but www.example.com/index.html?name=tom or a POST will not be allowed in Early Data).

This is also how Akamai’s default Early Data behavior works: the server will send back a 425 Too Early HTTP response for noncompliant requests so the client can retry them after the full handshake is done (Figure 5, left).

However, in many production setups, these restrictions are simply too harsh, preventing a large number of use cases for 0-RTT (e.g., API calls). They are also unfair to those customers that do properly protect their origins and endpoints against replay attacks.

This is why we decided to introduce a separate Early Data (0-RTT) Advanced behavior, that allows you to manually enable GET requests with query string parameters (and will soon also allow other HTTP methods such as POST and PUT) for select paths and endpoints, where you know for sure the back end is safe from replay shenanigans (Figure 5, right). This way, you have full control over the performance-security trade-off and can mitigate the risks in a fine-grained way.

Conclusion

Early Data is a powerful new performance feature for both HTTP/2 over TCP and HTTP/3 over QUIC, which allows you to save a full round trip on most connections to the Akamai edge. It also works nicely together with other options such as Early Hints to get data to your users’ browsers as soon as possible.

However, its raw power also makes it complex, requiring you to make a conscious decision on how to balance the impressive performance gains vs. the potential security risks. Luckily, Akamai allows you unprecedented control over your 0-RTT configuration, ensuring only hardened back ends will receive Early Data requests, and empowering your developers to build both lightning-fast and fully secure applications on your tech stack.

Stay tuned for further updates and improvements to our Early Data (0-RTT) Advanced behavior, which will add support for other HTTP methods besides GET in 0-RTT requests.