The Web Scraping Problem, Part 3: Protecting Against Botnets

Protecting against web scraping

Defending against scrapers requires a sound bot management strategy. Botnets that are designed for scraping come in various levels of sophistication, all based on the botnet’s ability to:

Spread the load to a wide number of IP addresses. The most sophisticated botnets leverage several hundreds of thousands of IP addresses from residential and mobile internet service providers (ISPs).

Randomize their signatures to avoid being uniquely identified. This makes it more challenging for defenders to define a signature to identify and block the whole activity. The most sophisticated botnets will take great care to reproduce a legitimate browser signature, making it impossible for the defender to block the activity without significant risk of false positives.

Blend into the legitimate traffic pattern. The most sophisticated botnets will only be active during typical daytime traffic, making them less noticeable to support staff who are monitoring the traffic.

The spectrum of botnet sophistication

As we’ve seen, data extraction service providers have an incentive to support business intelligence services since they can’t fulfill their mission without the right data. In this case, one can expect the most sophisticated botnet with broad load distribution through residential and mobile ISPs, well-defined fingerprints, and traffic blending within the legitimate traffic pattern.

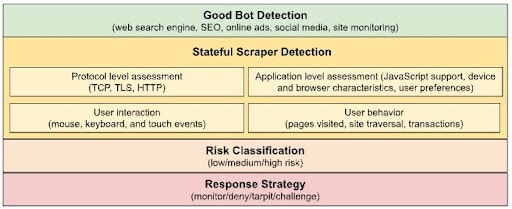

All websites will typically see a wide spectrum of botnet sophistication. To consistently defend against all kinds of botnets, a bot management product with various detection methods must identify both the simplest and the most sophisticated botnets. To achieve this goal, the product must provide:

Good bots identification

Strong scraper detection

Risk classification

Response strategies

Good bots identification

Although bots that support SEO, web search engines, and online advertising are scrapers by definition, they are vital to the site's operations. A bot management solution must be able to recognize these good bots with high accuracy to ensure content is served promptly, while also recognizing botnets that are attempting to impersonate them.

Strong scraper detection

Strong scraper detection consists of protocol-level assessment, application-level assessment, user interaction, and user behavior.

Protocol-level assessment (evaluate how the client establishes the connection with the server at different layers of the OSI model: TCP, TLS, and HTTP): This will verify that the parameters negotiated align with the one expected from the most common web browsers and mobile applications.

Application-level assessment (determine whether the client can run some business logic written in JavaScript): When the client runs JavaScript, it will collect the device and browser characteristics and user preferences. These data points will be compared and cross-checked against the protocol-level data to verify consistency.

User interaction (ensure that a human interacts with the client through standard peripherals like a touch screen, keyboard, and mouse): A lack of interaction or abnormal interaction is typically associated with bot traffic.

User behavior (monitor the user journey through the website): Botnets typically go after specific content, resulting in significantly different behavior than what’s observed in legitimate traffic.

Risk classification

Risk classification provides a deterministic and actionable classification (low, medium, or high risk) of the traffic based on the anomalies found during the evaluation. Traffic classified as high risk must have a low false positive rate.

Response strategies

A set of response strategies are necessary, including the simple monitor-and-deny action and more advanced techniques, such as a tarpit, which is purposely unresponsive with incoming connections, or various types of challenge actions. CAPTCHA challenges are generally more user-friendly for dealing with possible false positives.

The figure represents the high-level architecture of advanced bot management systems that are able to detect the most sophisticated scrapers.

High-level bot management product architecture

High-level bot management product architecture

Akamai: Leading the way in scraper detection

Akamai has been developing a brand new solution: Akamai Content Protector is the next generation of scraper detection, designed to detect most activity and resist continuous changes in attack strategies. The technology has been tested by several major online brands — including those that have experienced some of the most persistent scraping activity for years. Our new solution has mitigated the effect of scrapers and, in some cases, eliminated the unwanted activity altogether.

Find out more

Learn more about Akamai Content Protector and how it mitigates scrapers.