Improve User Experience with Parallel Execution of HTTP/2 Multiplexed Requests

The HTTP/2 protocol emerged as a beacon of progress compared with its predecessor HTTP/1 — featuring significant performance advantages, such as multiplexing. Although HTTP/3 has taken center stage as the latest iteration, HTTP/2 remains a major player in the web world, with a global adoption rate exceeding 35% among all websites.

What are HTTP/2 multiplexed requests?

Multiplexing refers to the protocol’s ability to send multiple HTTP requests over a single TCP connection. As of today, it remains one of the most significant HTTP/2 attributes and a substantial improvement over the previous version of the HTTP protocol, which used a separate TCP connection for each request.

Implementation on Akamai’s platform

Our customers expect superior web delivery performance when enabling HTTP/2. On Akamai’s platform, we observe that roughly 71% of API requests and 58% of site delivery traffic use HTTP/2 — and 25% of these requests experienced decreased performance before our recent update.

To improve HTTP/2 performance, we changed how the distribution of requests is made at the CPU level, distributing requests across multiple CPU cores instead of serializing them on a single core. Since rolling out the improvement, we’ve observed that implementing parallelism in resolving multiplexed HTTP/2 requests has decreased the turnaround time (TAT), improving performance and the user experience.

Performance benefits of multiplexed HTTP/2 requests

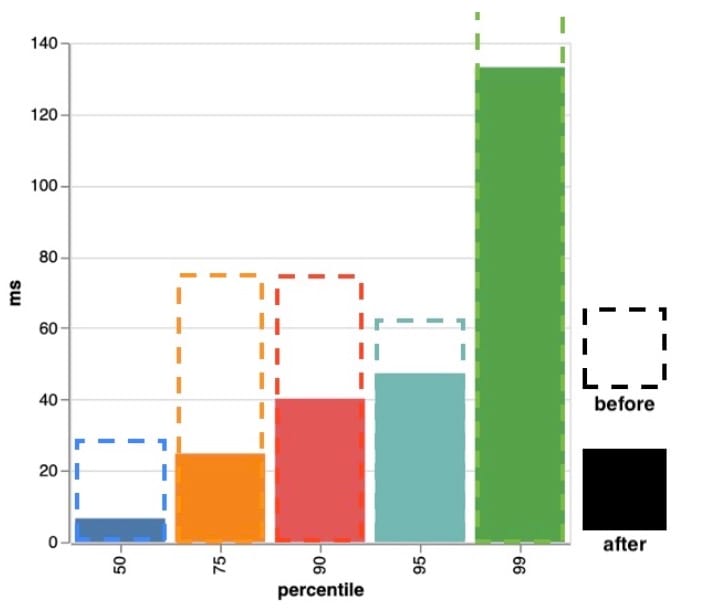

Completed in January 2023, the worldwide rollout marked a significant milestone, effectively eliminating the delays associated with responding to multiplexed HTTP/2 requests. These updates produced substantial performance enhancements, notably reducing the TAT for GET cacheable requests by approximately 28% at percentile 95 and percentile 99 (Figure 1).

Lessons learned

The expectation was that processing HTTP/2 requests in parallel would yield substantial performance enhancements — and, as anticipated, it resulted in faster object delivery.

However, we observed that the impact on certain Core Web Vitals (CWV) varied depending on web page characteristics. In some cases, these metrics improved; in others, they worsened.

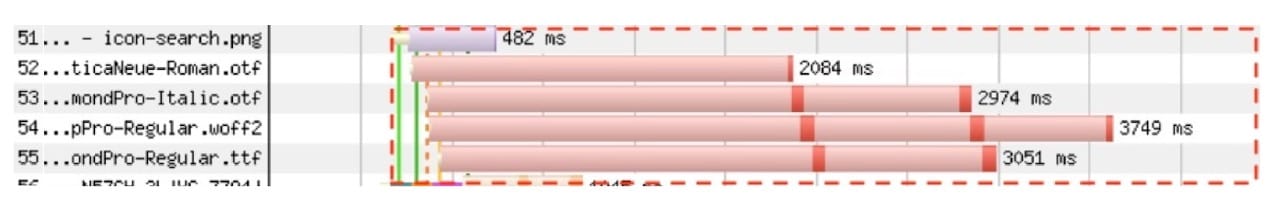

The team also discovered another hidden contributing factor: a restricted HTTP/2 writer frame size of four kB, with responses exceeding that limit being sent in four kB chunks.

As illustrated in Figure 2, assets 52 to 55 are high priority. Even if they are available, these are sent in chunks, with other data interleaving. This segmented delivery requires the browser to patiently await the final chunk before fully processing the page, resulting in a noticeable impact on the overall user experience and CWV.

Fig. 2: Example of the chunking effect of small HTTP/2 writer frame size

Fig. 2: Example of the chunking effect of small HTTP/2 writer frame size

Results in action

To address these challenges, we:

Made it easier for requests to be sent and received quickly by increasing the default HTTP/2 writer frame

Set a new value of the HTTP/2 batch size to improve how web content is delivered

Enhanced control over how different types of web data are managed by adding a parameter specific to HTTP/2 requests; it’s now independently configurable for HTTP/1 and HTTP/2

Streamlined the way we tell web browsers that a part of a webpage is done loading by activating the “last byte flag” on the last data frame, which means faster load times

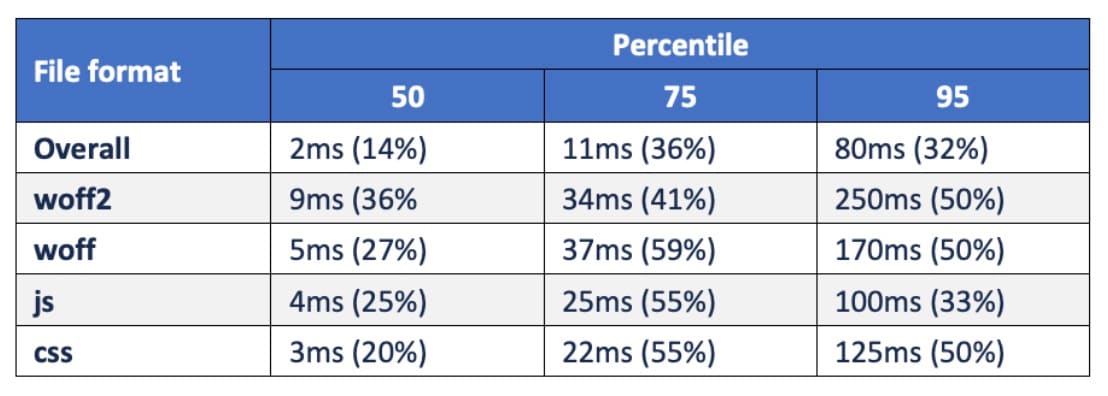

Fine-tuned the way web content is loaded, resulting in faster downloads for important file types, like HTML, CSS, fonts, and JavaScript, and making the web experience better and more efficient (Figure 3)

Fig. 3: Improvement of the download time across different file formats

Fig. 3: Improvement of the download time across different file formats

Summary

We remain dedicated to providing our customers with the best performance possible and to continually improving our solutions. Implementing parallel execution of HTTP/2 multiplexed requests marks a major milestone in fulfilling that commitment.

Learn more

If you found this information useful, you can learn more about HTTP/2 tips and best practices by checking out this documentation or by contacting our support team for further assistance.