How the Edge Improves Microservices

Microservice architecture has transformed the way we develop and operate our applications. Microservices aren't a technology or a programming language. Instead, they create a structure for designing and building applications based on the idea that the individual functions of a website should operate independently. From this simple concept comes a multitude of benefits including:

Faster feature velocity through parallel development efforts

Reduced compute spend from optimized resource utilization for each service

More flexible and responsive scaling on a per-service basis

To help you maximize the productivity gained from microservices, Akamai provides opportunities to further optimize performance and management for each service. Let's dissect Akamai's own web portal, the Akamai Control Center (ACC), as an example. Examine this site with a web development tool and you'll see that it is a collection of content and microservices.

The content -- Cascading Style Sheets (CSS), JavaScript, images, fonts -- is the stuff Akamai has been optimizing, compressing, and accelerating for decades. How about the microservices? Go back to the dev tool view of the ACC home page and you'll see periodic requests to a URL ("/v1/messages/count?status=new") -- that's a service. Specifically, it's the service that updates the number of outstanding notifications you see logging into the portal. So, when the web page wants to update the user interface (UI) to reflect the current number of messages, it calls this message service. Because this is a microservice, the whole page doesn't reload, reducing resource usage and improving performance -- all advantages of a microservice architecture.

Most frequently, a service is called by a script on the page as in this scenario, however, not always. Moreover, it's important to remember the distinct relationship between JavaScripts and services: the former make requests to the latter. As a result, we apply caching and minification to JavaScripts, as they are static content. We apply routing, traffic management, and dynamic optimization to microservices because they are generally used to deliver dynamic portions of the application -- like the counter in our portal example.

The inability to cache dynamic requests has created a tendency to underutilize the edge when attempting to solve problems of availability, performance, statefulness, and data sovereignty in microservice architecture. In this blog, we'll share some best practices for optimizing microservice performance and management at the edge.

Availability

Aside from the performance deficit encountered when unable to store content one hop away from online users, the lack of caching requires every client request to go to the microservice to be fulfilled. That means an origin is up and accessible to handle the request as we can't rely on the "Serve Stale" cache setting to fulfill the request and prevent an error from being displayed. Common practice is to run multiple instances of each service and then load balance across them for availability. The load balancing setup can impact overall service availability as well as increase compute expenses due to underutilized resources.

To improve microservice availability:

Move region/data center selection to the edge. Implementing global load balancing in a cloud data center or region is inefficient and introduces failure points. The Akamai Intelligent Edge can be used to route based on user location, client type, and other factors, eliminating the need for redundant load balancing services in each availability zone.

Monitor from the outside to determine health. Internal microservice monitoring is important for operations. For load balancing purposes, consider if the application is available externally before attempting to route traffic to it. That will prevent "false available" events from an upstream failure that internal monitoring won't detect.

Implement request-level failover. A number of requests inevitably fail. Availability of the overall service is largely determined by how those instances are handled. Request-level failover at the edge via site failover reduces the visibility of errors to end users. Prioritize failures of dynamic calls -- such as logins or searches -- which have a greater impact on user experience than a missing font or broken image link.

Performance

If we go back to our ACC example, there's a universal search bar at the top. The bar autocompletes when a user types -- a standard feature in 2020. This autocompletion is also implemented as a service ("/search-api/api/v2/query") as seen with a dev tool. High availability ensures this service works, but performance is more of a concern here. The autocomplete service is an interactive part of the UI. Response time is particularly important -- if it takes five seconds to update after each letter, users will become frustrated.

Luckily, there are tools at the edge to call upon here. Akamai SureRoute is designed to find the fastest way to deliver noncachable content from your origin server to improve response times, especially for more distant users. Transmission Control Protocol (TCP) optimizations help shuttle the requests and responses between the client and microservice more quickly, and are particularly beneficial when applied to larger requests or response bodies. Finally, Transport Layer Security (TLS) connection reuse between the edge and service decreases the large overhead associated with setting up a secure connection, providing more compute for the application itself.

Keep in mind that performance actually starts before sending the request. To take the greatest advantage of the performance improvement multiple instances can give users, consider using a performance-based global load balancing scheme. This approach will automatically select the optimal data center for each client location and, when combined with other optimizations, can measurably improve user experience.

Improvement of mean response times for a 3 MB API response using only edge dynamic acceleration, no caching measured using WebPageTest.org

Improvement of mean response times for a 3 MB API response using only edge dynamic acceleration, no caching measured using WebPageTest.org

Statefulness

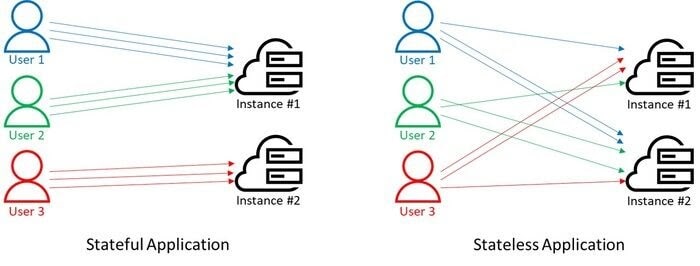

Some microservices are built in a way that allows any instance of that service to handle any request, from any client, at any time. Stateless services are the easiest to load balance because any available instance can process requests, keeping performance in mind, of course.

In contrast, stateful microservices require every request from a session to be routed to the same instance. For example, consider a chatbot. By deploying multiple instances, the best performing, available instance can service the initial request to begin a chat session. However, once a conversation is started with that instance, it must remain there because of the context of the session -- it's stateful.

The challenge is to make sure that new requests are distributed evenly for better utilization of compute power, while keeping existing sessions with the same instance throughout the conversation to keep the service functioning consistently. Attempting to implement this type of routing in a region or data center is inefficient because it inevitably involves some requests routed to the wrong place only to be backhauled or redirected to the correct instance. Any additional compute, user delay, and complexity is eliminated by moving the stateful routing logic of dynamic requests to the edge. Doing so eliminates the scenario in which the request is directed to the wrong destination only to have extra processing reroute it to the correct one.

Data Sovereignty

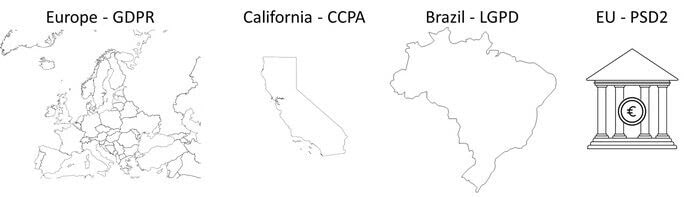

Many applications process sensitive data subject to regulation on where that information is managed and stored. As a result, the microservices that handle this information require specific geography deployments to meet privacy requirements.

Some organizations simply deploy their services in the most stringent regions hoping to avoid deciding between compliant and noncompliant locations -- an approach that's not always possible and rarely most efficient for the rest of the user base. Another approach is to set up a separate instance and hostname for the region requiring special handling, making routing easier by directing users to different URLs. This approach negatively impacts user experience and places far too much onus on the user to comply with data privacy.

Instead of multiple hostnames, user characteristics such as location and session information can be used at the edge to determine the compliant instance. User characteristics let the administrators of each service more efficiently select cloud providers and locations, helping with overall compute costs and operational complexity.

Case study

Now, let's look at how an Akamai customer, a global SaaS provider, streamlined management of a complex web application by improving service availability with edge load balancing. The application consisted of typical static content -- JavaScript, CSS, and images -- but there were also more than three dozen microservices. For performance, availability, and regulatory requirements, each service was distributed globally across up to eight different zones.

The routing was complicated by the heterogeneity of the services -- some were stateful, some required specific geographic compute locations, and others had client latency demands. As a result, each team implemented its own layer of logic using proxies and load balancers, creating an unnecessarily complex architecture.

In an effort to consolidate these multiple layers, we began with a single, but very critical, service. Stripping away the layers of routing logic, we cataloged the endpoints for that service and defined them all directly in the Akamai edge configuration. This particular service required a very specific routing order among six possible global compute instances. Once a session with a particular instance began, however, that session needed to be maintained.

The solution included a combination of Domain Name System (DNS) and Layer 7 load balancing implemented at the edge. DNS load balancing via Akamai's Global Traffic Management was deployed to select the optimal region for each client session. Since DNS doesn't address the service's statefulness requirement, the edge automatically inserted a client cookie indicating which instance the session used. The cookie is used at the edge for any subsequent in-session routing to provide statefulness without any application changes.

With these optimizations, the customer:

Achieved 99.999% availability for the first time

Eliminated multiple redundant instances of local balancers

Removed dependency on inter-availability zone connectivity

Manage dynamic services at the edge

Typical microservices deployment -- centralized load balancing and extraneous solution elements

Typical microservices deployment -- centralized load balancing and extraneous solution elements

Improved deployment model -- reduced solution elements, simplified management

Improved deployment model -- reduced solution elements, simplified management

Microservices are used to implement the dynamic portion of a web application such as the login, alert counter, search functions, and interactive features. These services are fundamentally different from the static objects Akamai caches, but it doesn't mean you can't use the edge to optimize them.

Client requests for these services always require an available origin and often have many layers of rules to determine the best destination. These rules, along with the increase in complexity that results in breaking up an application into many pieces, are what makes a centralized approach to effective load balancing very difficult. By extending the same edge that is already providing caching, UI optimization, and security today to implement a unified routing layer, you can simplify all the complexities and work across environments.