How a CDN Can Make Your APIs More Powerful

Co-written by: Atoosa Rezai, Senior Technical Account Manager at Akamai Technologies.

Companies of all sizes and in every industry use APIs for a staggering range of activities. Just a few examples: APIs make it easy to include weather forecasts in apps, recommend driving routes where you are least likely to have an accident, and allow banks to comply with open banking regulations.

But there are difficulties: similar to general web traffic, APIs also use the HTTP/ HTTPS protocol to transfer information between servers and clients. This means that the delivery, reliability, and scalability challenges that websites face are also challenges for APIs.

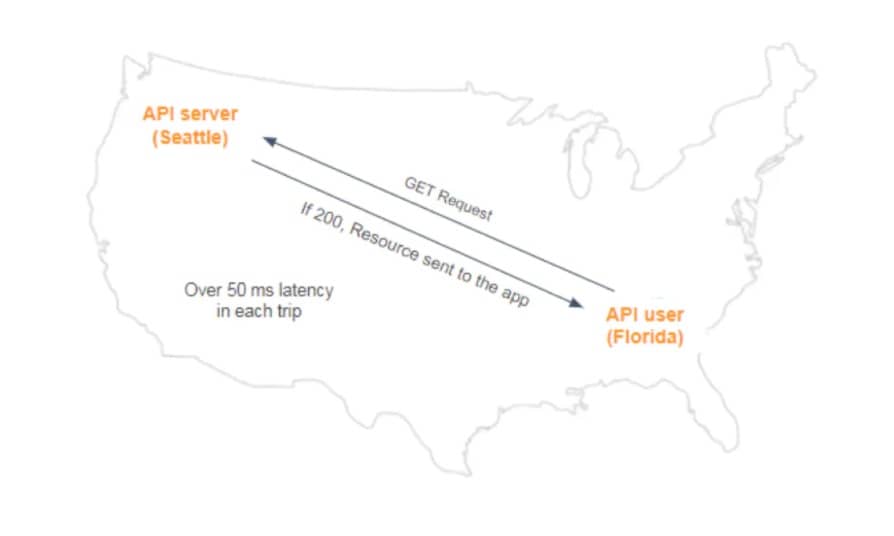

Let’s look at a typical API call to understand how each of these challenges can have a detrimental impact on your APIs. It starts with an API consumer making a GET request to an API endpoint which connects to an API server that, in turn, requests a resource from a database server (see diagram below). That’s when the challenges begin.

API challenge #1: latency

The scenario outlined above works fine if your consumers are located close to your servers. But what if they’re not? There is often a great distance between your API consumers and backend, and this distance can significantly increase latency in serving requests.

The above image demonstrates latency experienced by an end user in Florida using a mobile app whose origin is at a data center in Seattle. Within the continental U.S. alone, each leg of the request/response cycle can take upwards of 50 milliseconds. The latency will be substantially higher for users who access the app from other parts of the world.

Increased latency means your websites and apps are slow to load, and this can lead to a loss of revenue and brand value. Across sites and apps, 53% of visits are abandoned if a mobile site takes more than three seconds to load, and 49% of users expect a mobile app to respond within two seconds.

API challenge #2: reliability

Another major issue with having your backend infrastructure in a single data center or a region is reliability. Your data center can go down, and that can bring down your APIs and everything that depends on them. If the region of your cloud infrastructure provider goes down, you may not have control over where that traffic will failover.

To solve the reliability problem, API developers often replicate their backend infrastructure in multiple regions or data centers, thus avoiding the issue of single point of failure. However, this approach is expensive and requires meticulous engineering and operational practices to ensure that the right resources are available in all locations.

API challenge #3: traffic spikes

APIs routinely encounter large spikes in consumption, such as with holiday traffic for retailers, during open enrollment for health care companies, and at year-end for travel and hospitality companies. You can analyze past data to forecast the amount of traffic during the next peak period and then provision additional infrastructure accordingly. But what if the actual traffic is far greater than what you forecasted? If you’re not prepared for this surge, you run the risk of overwhelming your data centers and making your API unavailable to consumers.

If you use cloud infrastructure, you can notify providers in advance of an expected traffic spike to ensure that servers are available and warmed. Another common approach to preparing for traffic spikes is known elastic scaling, but the reality is that elastic scaling is not instantaneous, not every API gets it, and servers can take 20 minutes or more to spin up. In summary, capacity planning for peak traffic spikes is unpredictable and difficult.

How a CDN can help

The answer to all three of these API challenges (latency, reliability, and traffic spikes) is a geographically distributed network that can deliver content as close to the end user as possible. This is also known as a content delivery network (CDN).

Akamai’s CDN — the Akamai Intelligent Edge Platform™ — has over 240,000 edge servers deployed in 2,400 globally distributed data centers in over 130 countries.

The global deployment of the platform lets you serve consumers from an edge server close to them, thus reducing latency.

The platform’s 100% service-level agreement (SLA) for availability ensures that your API service is always on and maximizes your reliability.

The platform gives you the scale needed to handle spikes in API traffic, whether planned or unplanned, without the need to scale your servers

The diagram below illustrates API responses being served by Akamai’s edge servers instead of the origin server:

Getting started

If you’re ready to power up your APIs with a CDN, we’re ready for you. It’s easy to get your API traffic onto the Akamai platform.

The first thing to do is CNAME your API to Akamai. CNAME and A are two common Domain Name System (DNS) referral records. An A record points a hostname directly to a specific IP address, whereas a CNAME record points the hostname to another hostname instead of an IP address.

For example, if you want to point techno.com to the server 192.0.2.1, then the A record will look like this:

techno.com A 192.0.2.1

In this case, all the requests made to techno.com will be directed to the server with IP address 192.0.2.1.

If you run multiple services such as API, blog, or email under your techno.com domain, then a CNAME record will make your life easier. Instead of creating an A record for each of the services, you can CNAME API.techno.com, blog.techno.com, and ftp.techno.com to techno.com, and A record techno.com to 192.0.2.1. This way, if you change your IP address, then all you have to do is to update the A record for techno.com.

Here’s an example of a CNAME record:

| NAME | TYPE | VALUE |

| API.techno.com | CNAME | techno.com |

| techno.com | A | 192.0.2.1 |

When you CNAME your API endpoint to Akamai, we use DNS to figure out where a calling client is located. Once found, we chase a CNAME chain to route the traffic accordingly. This allows us to direct API requests to the edge server that’s closest to your consumer.

Once we’ve identified the optimal edge server for your traffic, the request is then served from cache, preventing a long (read: higher latency) trip to your origin. If we don’t have the response in cache, we find the fastest route back to origin to get it — which is often not a straight line. We use Akamai SureRoute to route around internet congestion points and return the response in the fastest way possible.

Caching improves API performance

A CDN can make your APIs faster, more reliable, and more scalable by caching data at the edge. So when you design your API, think about structuring your data between what can be reused (therefore cached) and what cannot be cached. Caching static content as well as API response codes at the edge will cut the response time of your APIs significantly, improving your API’s performance.