HTTP/2 Request Smuggling

HTTP Request Smuggling (also known as an HTTP Desync Attack) has experienced a resurgence in security research recently, thanks in large part to the outstanding work by security researcher James Kettle. His 2019 Blackhat presentation on HTTP Desync attacks exposed vulnerabilities with different implementations of the HTTP Standards, particularly within proxy servers and Content Delivery Networks (CDNs).These implementation differences with regard to how proxy servers interpret the construction of web requests have led to new request smuggling vulnerabilities.

One researcher inspired by Kettle's effort is Emil Lerner, who gave a security presentation on HTTP/2 request smuggling attacks in May 2021. He also released a testing tool called http2smugl to allow organizations to assess their exposure to these attack vectors. At the same time, Kettle has been researching similar attacks. On August 5, 2021 at Black Hat 2021 in Las Vegas, Kettle presented a talk entitled, "HTTP/2: The Sequel is Always Worse". In this blog post, we will review past HTTP Request Smuggling research and then take a closer look at the new HTTP/2 attack vector.

Request smuggling basics

Request smuggling is not a new attack; it has been known since 2005. Request smuggling attacks can be leveraged in many ways by attackers, including accessing sensitive data, polluting cached content and even mass cross-site scripting.

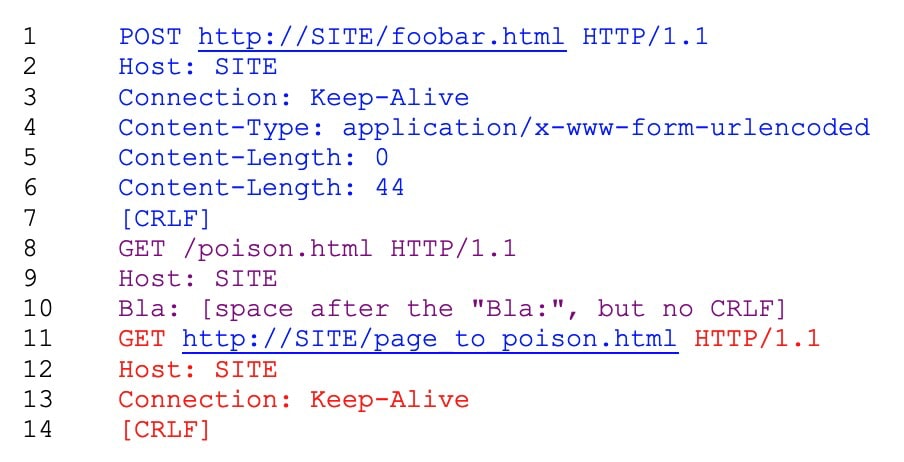

The original concept focused on misinterpretations of HTTP headers when HTTP connections had more than one Content-Length header as shown below on lines 5 and 6.

Having duplicate, conflicting Content-Length headers could cause different proxy servers to interpret the request differently, depending upon how the HTTP Standards were implemented. Specifically, the issue is whether this is a single request with the data from line 8-13 as the request body content or if this is actually two separate requests.

Akamai's response

Akamai responded to the original HTTP Request Smuggling issue from 2005 by updating our CDN Edge server platform to identify and block many request anomalies related to HTTP Request Smuggling such as:

Duplicate Content-Length headers

Spaces in the Content-Length header name value

Extending headers over multiple lines by preceding each extra line with at least one space or horizontal tab (obs-folding)

An HTTP request found in POST body

Empty Continuation header data

2019 Research: HTTP Desync via Transfer-Encoding Headers

Kettle's research resurrected the HTTP request smuggling concept by reviewing the HTTP RFCs and identifying another area where proxy servers may have implementation differences. RFC 2616 states the following regarding requests that have both Content-Type and Transfer-Encoding headers:

As stated in the RFC, the Transfer-Encoding header must take precedence over a Content-Length header when processing the request. Semantic differences in implementations of proxy servers caused this attack to manifest into HTTP Desync scenarios.

In order to understand why the order of interpretation matters, it is important to know how many CDNs handle traffic going forward to customers' websites. Typically there is a persistent, shared connection between the CDN proxy and the customers' web sites. The CDN servers must maintain a mapping of request/response data from the front-end clients with the data being returned. In an HTTP request smuggling/desync attack, this mapping gets corrupted as extra response content is returned and not properly mapped to a front end client request.

HTTP Desync Image by Portwigger.net

Akamai's response

In order to protect against this type of attack at the platform level, Akamai updated our compliance with the RFC 2616 specification to ensure the Transfer-Encoding header had precedence. Additionally, our engineering team adding additional broader logic to comply with RFC 7230, related to header parsing and will detect if:

Header does not end with "\r\n"

Header does not contain a colon ":"

Header does not contain a name

Transfer-Encoding header obfuscation

After CDNs implemented updates, the security research community pivoted to obfuscation attempts of the Transfer-Encoding chunked request header. If a researcher could trick the front-end proxy into not seeing a Transfer-Encoding header but then another proxy upstream does interpret one, then an HTTP Request Smuggling attack would still be possible. Here are some examples of different obfuscation attempts:

Transfer-Encoding: xchunked |

Transfer-Encoding : chunked |

Transfer-Encoding: chunked Transfer-Encoding: x |

Transfer-Encoding:[tab]chunked |

GET / HTTP/1.1 Transfer-Encoding: chunked |

X: X[\n]Transfer-Encoding: chunked |

Transfer-Encoding : chunked |

Kettle created a Burp Extender called "HTTP Request Smuggler" that attempts a large variety of obfuscation attempts:

Akamai's response

Many of these request header obfuscation attempts result in our CDN Edge server stripping out the header due to it being malformed. For the headers that the CDN Edge server does process and keep, the next layer of defense is the Akamai Web Application Firewall (WAF). We added many rules to identify request smuggling attempts and protect customers from forwarding these requests to Origin.

Akamai's HTTP/2 support

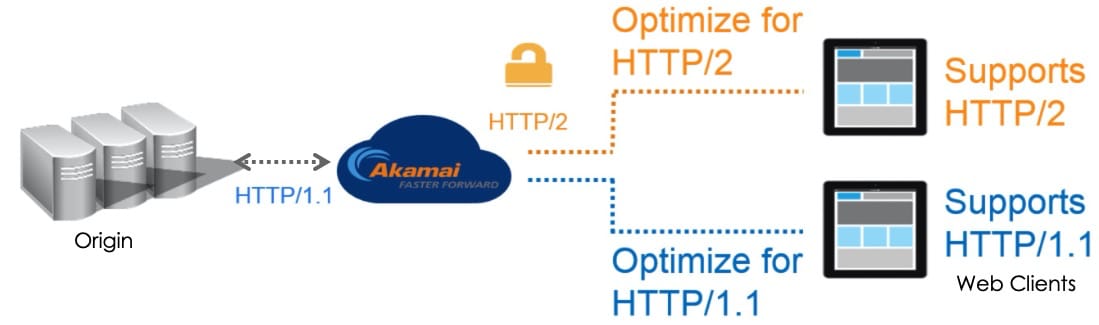

Akamai has supported the HTTP/2 protocol on our platform since 2015. Here is an architectural graphic showing some important concepts.

Akamai supports both HTTP/1.1 and HTTP/2 at the edge in order to interact with a wide variety of web clients. When Akamai forwards requests to Origin, however, we utilize a persistent, shared HTTP/1.1 connection. This means that any HTTP/2 requests received at the edge must be terminated and translated into HTTP/1.1 for processing by WAF and then forwarded to the origin.

HTTP/2 request smuggling

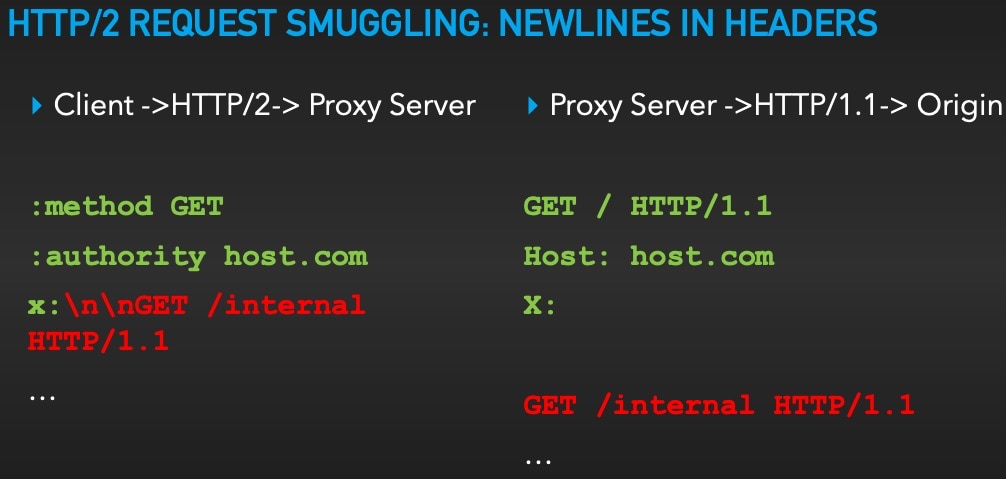

Now that we have discussed previous HTTP Request Smuggling research, we can move on to the current research presented by Kettle. The key component of the new research focuses on the fact that HTTP/2 is a binary protocol. Request headers do not have the same "\r\n" delimiter values as HTTP/1.1 and can contain newline characters in the header names and values. This means that there is potential for more processing mismatches during translation/downgrading of the HTTP/2 data to HTTP/1.1. To put it more plainly, improper translation of an HTTP/2 request may create a new HTTP/1.1 header. This is similar to the header obfuscation tactics mentioned earlier in this blog post, however this time the front-end client is sending the data in HTTP/2 protocol format. During the translation from HTTP/2 to HTTP/1.1, a new HTTP request header might be created and cause a smuggling attack. Here is an example of this scenario:

Akamai's response

Akamai's threat research team received new Burp HTTP Request Smuggler proof of concept tooling from CERT/CC (via Kettle). The PoC tool sends three different HTTP/2 smuggling requests. We also obtained Emil's http2smugl tool from GitHub. Utilizing both tools, we tested our CDN Edge server platform to validate our processing of these HTTP/2 requests and to identify any gaps or issues. When run in "detect" mode, the http2smugl tool tries roughly 564 different combinations of smuggling techniques. Appendix A provides example output from the http2smugl tool. This tool was run against our CDN Edge servers and we modified our default logging settings in order to gain debug level insights into the HTTP/2 processing. Our testing determined the servers blocked all requests at HTTP/2 processing prior to the request being translated into HTTP/1.1. The http2smugl tool was also run in single "request" mode to confirm one-to-one processing of different smuggling examples. Here is an example of the protocol error messages that is received when the tool is run:

$ ./http2smugl request https://internal-test-server.com/ "x:\n\nGET /internal HTTP/1.1" Error: server dropped connection, error=error code PROTOCOL_ERROR |

Our testing with both tools showed that all HTTP/2 requests with the obfuscated smuggling header data was blocked by our CDN Edge servers.

Conclusion

HTTP Request Smuggling seems to be another case of "What was old is new again." As organizations adopt new protocols and technologies, new attack surfaces for older vulnerabilities may be discovered. It is therefore critical to ensure that an organization has an agile response to the discovery of new vulnerabilities and is able to test and identify any new issues.

Akamai appreciates the work of researchers like Kettle and the coordination with CERT/CC that allowed for testing for this vulnerability prior to the presentation. HTTP Request Smuggling enables an attacker to exploit a variety of vulnerabilities and a coordinated response by the community is essential to safeguard the millions of systems that might be vulnerable.