Building an Effective Bot Management Strategy

Bot management isn’t just about detecting threats; it’s also about having a tailored response strategy. Too often, we see a strong detection strategy combined with a weak response strategy (or vice versa).

If you have a strong detection strategy but a weak response strategy, you may perceive bots that get through as false negatives — in reality, the detections worked but your response strategy allowed the bots through.

Conversely, if you have a weak detection strategy paired with a strong response strategy, you may feel a false sense of security because you’re taking action on the bots you’re detecting — in fact you’re letting through all the bots you didn’t detect.

In this post, we’ll provide guidance on how to effectively manage bots and prevent both false negatives (when bots are mistaken for humans) and false positives (when humans are mistaken for bots).

How the bot detection layer affects bot management strategies

An effective bot management product must have a strong detection layer, featuring several detection methods that look at web traffic from different angles. This helps you differentiate legitimate traffic from malicious traffic and detect potential threats like credential stuffing and inventory hoarding.

Over the years, we’ve learned how bot operators evolve their attack strategies to evade detection. The rate of evolution is generally proportional to the defense in place, and the persistence of the attack depends on the economic incentive.

Simple attack strategies and more advanced botnets

Let’s suppose the targeted website is only protected with a web application firewall (WAF) with basic bot detection methods that check the HTTP header and request rate. In this case, it’s easy enough for attackers to evade detection. With a botnet, they can load balance requests through multiple proxies to keep a low request rate for each “node” and craft a request that has an HTTP header signature close enough to one seen in a typical browser like Chrome or Firefox. Automation tools like SentryMBA and OpenBullet make this possible.

But with a bot management product in place, such a simple attack strategy won’t work. Attackers must resort to more advanced strategies, such as randomizing the device and browser characteristics collected client-side through JavaScript in an attempt to defeat fingerprinting detection methods.

They’re also forced to load balance the traffic through more advanced proxy services (like mobile and residential IP addresses) and mimic user behavior such as mouse movement and keystrokes.

The more advanced botnets run on headless browsers in an attempt to further blur the lines and make it harder for the bot management system to differentiate between bots and humans. This makes a lot of malicious traffic fall into what we call the gray area — a classification for requests that we can identify as neither clearly human nor clearly bot.

The fine line between false positives and true positives

Sophisticated attackers understand how bot management products work, and they try to adjust their attack traffic so it falls into the gray area as often as possible. The defender’s job is to tune their bot management policy to the smallest gray area possible.

However, the digital ecosystem is constantly changing, with new devices and software released on a regular basis — and bot operator attack strategies are ever-evolving, too. That makes minimizing the gray area an ongoing battle.

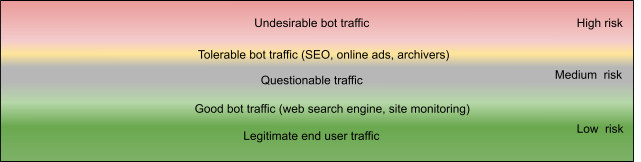

The relative proportion of human, undesired bot, desired bot, and gray area traffic may vary depending on two factors: (1) how well-tuned the bot management solution is, and (2) how sophisticated the attacker is. In Figure 1:

- The red band represents high-risk undesirable bot traffic, such as scraper bots.

- The orange band represents tolerable bot traffic, like SEO bots, web archivers, and online ads bots. (Note: Akamai Bot Manager classifies them as “known bot” within our library of 17 Akamai Bot Categories because they typically self-identify in the HTTP headers, but the notion of what constitutes “tolerable” or “good” largely depends on the context of your business.)

- The green band represents “premium,” low-risk, legitimate end-user traffic. This band also includes some good bot traffic necessary for optimal site operation, such as web availability, performance monitor services, and search engines that make content visible and accessible to potential customers.

- The gray band represents the medium-risk questionable traffic that includes anomalies common to both legitimate and undesirable bot traffic.

In this example, the gray band is fairly broad, likely because an advanced persistent attack is in progress and the bot management policy may not be adequately tailored to the threat.

Fig. 1: A site with a large amount of medium-risk questionable traffic

Fig. 1: A site with a large amount of medium-risk questionable traffic

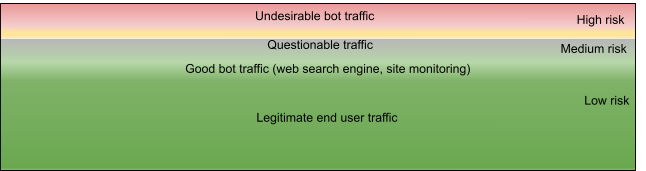

For a site that sees more moderate attack traffic or has a well-tuned policy, it’s usually easier to clearly distinguish the bot traffic (undesirable and tolerable) from the legitimate traffic — and the gray area between legitimate traffic and bot traffic is much narrower (Figure 2).

Fig. 2: A site with a smaller amount of medium-risk questionable traffic due to a well-tuned bot management policy

Fig. 2: A site with a smaller amount of medium-risk questionable traffic due to a well-tuned bot management policy

In both cases, the gray area remains and appropriate action is necessary to defend against illegitimate traffic without impacting legitimate users.

Building and applying a response strategy layer

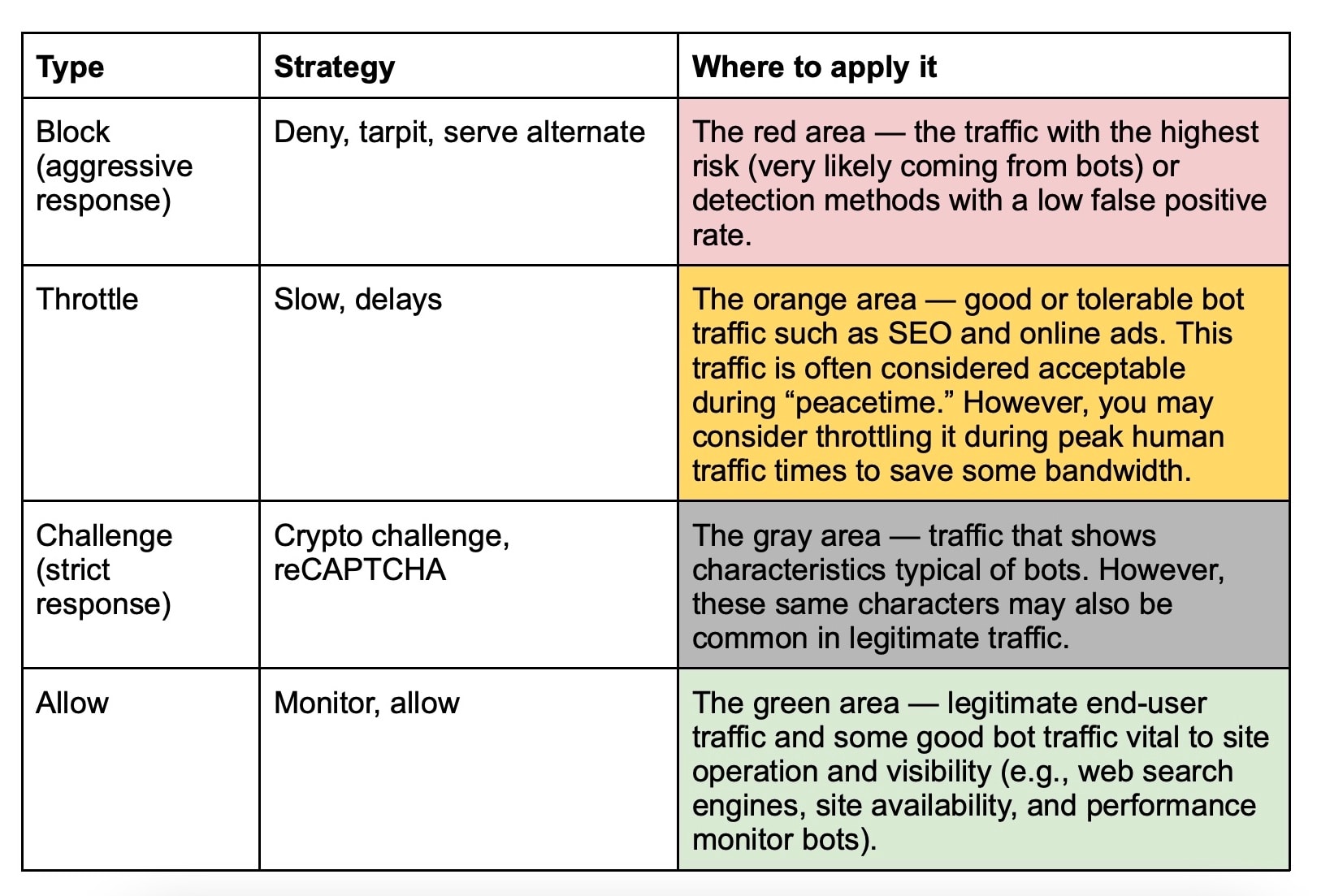

To effectively face the diversity of request activities and their varying threat risks, companies need to adopt a strong response strategy that mitigates bot activity. Akamai has developed a broad variety of response strategies for various scenarios. Table 1 illustrates the different types of response strategies available and to which category of traffic they should be applied.

Table 1: The different types of response strategies available for different categories of traffic

Table 1: The different types of response strategies available for different categories of traffic

When protecting your most critical endpoints like the account creation and login with Bot Manager, Akamai recommends leveraging the Bot Score to classify traffic as low-, medium-, or high-risk. You can reference the Akamai bot categories to understand what we refer to in this article as “tolerable bot” traffic.

Challenging the gray area requests

The challenge strategy is key for dealing with the gray area. At Akamai, we’ve developed a challenge framework that currently offers two strategies: the Google reCAPTCHA challenge and the Akamai crypto challenge.

The seamless integration doesn’t require any back-end changes to your website’s code. You can fully manage the user experience and front-end challenges page within the Akamai security configuration, and even brand and style the challenge window or overlay layout with a custom message.

To set up the reCAPTCHA challenge, you need to open an account with Google reCAPTCHA, configure the reCAPTCHA action on your bot manager policy, and apply it to the relevant protected endpoint. The crypto challenge developed by Akamai simply evaluates the client machine’s ability to execute complex cryptographic operations. The crypto challenge puts the burden of proof on bots, not humans. Using it may take a few seconds and users will see a countdown until the operation is complete.

A secondary detection layer

The challenge action is like a secondary detection layer, giving us an opportunity to further evaluate web traffic and either confirm that it’s suspicious or realize we’re potentially dealing with a legitimate user. Ultimately, each challenge strategy is designed to prevent bots from accessing protected resources while allowing any legitimate users who got caught initially in the gray area to proceed.

The challenge action also allows you to adopt a stricter detection strategy to proactively defend against the most advanced botnets. At the same time, it reduces the need for tuning to adjust for false positives or false negatives when using the block action.

Upgrade your bot manager policy with cutting-edge tools

If you haven’t already done so, make Bot Score and the crypto challenge part of your bot management strategy. Implementing Bot Score is simple, and it only takes a few minutes to configure the challenge strategy to protect your most critical endpoints using the Akamai Control Center. Use Akamai Bot Manager to defend against bot attacks with a sophisticated management policy.

Learn more

For more details, please contact your professional services representative.