The Web Scraping Problem, Part 2: Use Cases that Require Scraping

Good bots

A bot activity is considered “good” when its operator has positive intent and when its activity does not negatively impact site performance, availability, reputation, or revenue. Good bots help drive smooth website operations, including optimal site performance and availability monitoring services. They help provide easily accessible content via search engines for potential customers. Search engine optimization (SEO) bots help improve site positioning and ranking. Online advertising bots help attract customers to the site.

Today, good bot traffic represents 27.5% of all web scraping activity. Of course, this ratio may vary depending on the content's value and the company's market position.

Good bot traffic trend

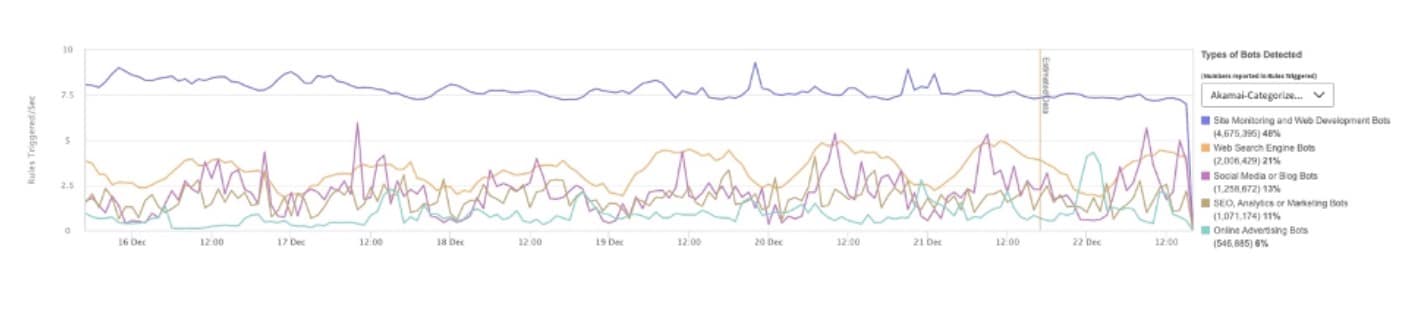

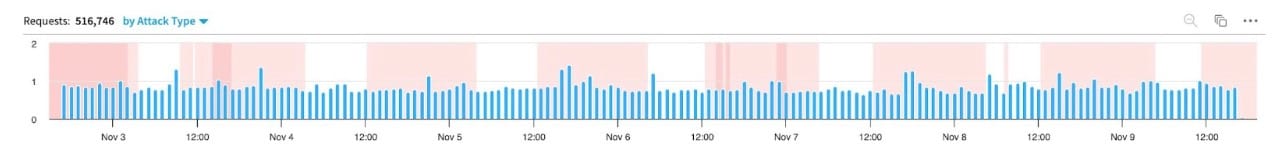

Figure 1 represents good bot traffic trends on an airline’s website. Site availability and performance are essential to airline operations and, generally, all business-critical websites that generate millions in revenue.

Consequently, bot traffic from site monitoring services (in purple) is significant but usually only targets a handful of pages on the site. Next comes traffic from web search engines and social media sites in yellow and pink, respectively. The other bots identified in the figure are SEO (gold) and online advertising bots (aqua).

Web search engine preferences

Although scraping activity from web search engines is universally accepted and critical to the success of ecommerce sites, the preferences of website owners and users vary. Some website owners have specific preferences regarding the web search engines that they allow; most of them broadly accept Googlebot, Bingbot, and Baidu. Other regional web search engines, such as Yandex (Russia) and 360Spider (China), are not always readily accepted in North America and Western Europe.

Some users (and potential customers) are wary of web search engines operated by large corporations because of internet privacy concerns and may prefer alternatives like DuckDuckGo. Restricting the scraping activity of certain web search engines, however, may limit a website’s audience and opportunities to attract new customers.

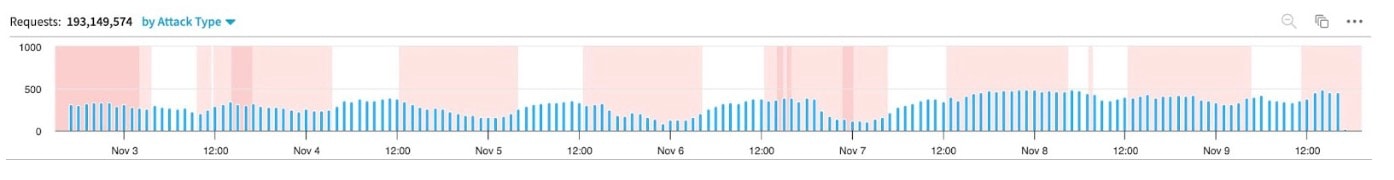

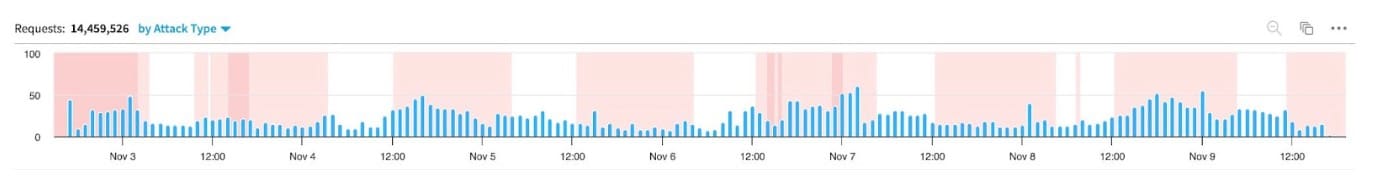

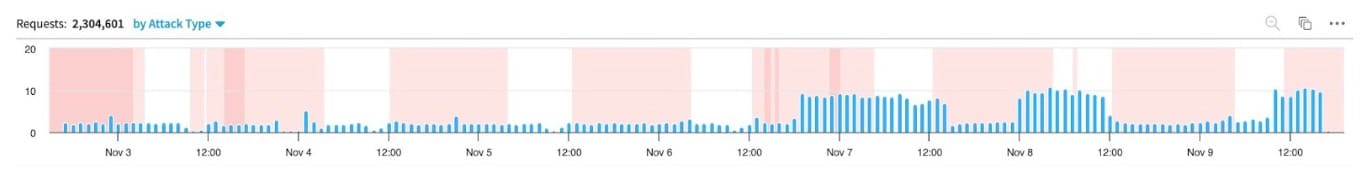

Figures 2 to 5 show activity from the most popular web search engines on the same popular ecommerce site. As you can see, the volume of activity and the botnet's behavior may vary depending on the search engine.

Inventory hoarding and scalping

Scalpers or inventory hoarders scrape websites in search of popular discounted items or items in low supply. For example, the global COVID-19 pandemic saw a shortage of GPUs. Scalpers searched the internet to find GPU inventory, automatically acquiring it when found. Since price is driven by supply and demand, scalpers can make a good profit by reselling the items on marketplaces like Amazon and eBay.

A similar phenomenon involves hype events — when a shoe company releases a limited-edition pair of sneakers, for example, or when a ticket company releases seats for a world-famous pop star’s concert. Scalpers will either design and build their own scraper, or use one of the many open source or subscription-based scraping services available on the market. The sophistication of the botnet will depend on the skill set of the developer or operator.

Figure 6 describes the scalping process and outlines how scraping helps scalpers achieve their goals.

Fig. 6: The scalper lifecycle

Fig. 6: The scalper lifecycle

Step 1: The scalper develops a botnet that targets popular ecommerce sites; sites may be scraped as often as once a day.

Step 2: The collected data is analyzed based on certain criteria. The analysis provides a potential shopping list of discounted products that can be resold at a profit.

Step 3: The scalper reviews the list and buys the discounted products on the relevant site. This step may be automated or manual.

Step 4: The scalper puts the new product up for sale at a markup on a marketplace like eBay.

Step 5: Once the product is sold on eBay, the scalper pockets the difference.

Business intelligence

Retail and travel companies like to monitor their competition to compare their product positioning and pricing strategy with those of their competitors. Before the internet, companies would have to physically visit the competing store to check their catalogs, product positioning, and pricing, and then return with the intel to adjust their own product strategy.

Today, when companies want to understand consumer sentiment and preferences, they turn to comments left on product review boards or social media platforms such as X (formerly Twitter), Facebook, and Instagram.

The internet and machine learning algorithms have allowed competitors to automate and accelerate this process, enabling a more competitive and dynamic market. Manually collecting and analyzing data is no longer feasible, making botnets the ideal tool for the job.

Do-it-yourself model

Companies can build their own business intelligence systems, but doing so requires a team of expert developers and data scientists to create a scraper that can defeat bot management solutions that protect the targeted site, as well as develop complex models to extract valuable intelligence.

The process can be complex and time-consuming, and the team may need to constantly update their software to adapt to whatever bot management solution is protecting the targeted site. Scrapers in this category are generally less sophisticated — easier to detect and ultimately defeat.

Commercial offerings

Alternatively, companies can turn to a data extraction service provider to provide them with business intelligence. Demand for online data is so significant that a whole industry is dedicated to harvesting it and extracting competitive intelligence for digital commerce sites. These well-funded companies use scrapers to obtain the data they need from the internet.

Because the developers and data scientists working for these companies tend to be more skilled, the botnets are generally more sophisticated, more challenging to detect, and more persistent.

A few companies that offer such services include:

ScrapeHero: Based in the United States, this provider offers services for price monitoring, web crawling, sales intelligence, and brand monitoring. Their customers include Fortune 500 companies and the top five global retailers.

Zyte: This provider, based in the United States and Ireland, offers scraping and data extraction services. You can take advantage of their bot detection technology or buy the data they already scrape regularly for a monthly fee.

Outsource BigData: This provider, located in the United States, Canada, India, and Australia, offers web scraping and data labeling services.

Bright Data: Based in Israel and the United States, Bright Data provides scraping and data extraction services. They also operate a vast network of proxy servers to facilitate scraping operations.

Data collection and intel extraction workflow

From a web security point of view, we like to portray the parties we defend against as the “bad guys” — in this case, the party initiating the scraping activity. (This includes those that don’t fit the typical “hacker” profile, like the data extraction service providers we listed in the previous section.)

Everyone wants data from other sites, but not everyone is willing to share. Third-party data extraction companies make that data accessible, and web security companies that offer bot management products prevent that activity as much as possible.

Figure 7 shows the business intelligence lifecycle when a data extraction company is used.

Fig. 7: The business intelligence lifecycle

Fig. 7: The business intelligence lifecycle

First, a company (such as an online retailer) works with the data mining company to define the scope of the analysis, type of data, and targeted competition.

The data mining company will set their botnet to scrape relevant websites. The scraper may also target social media to collect consumer comments and opinions on various products or on the company itself.

The data collected will be stored and analyzed through complex machine-learning models. The data from online retailers will be used for competitive analysis, while the data from social media will offer insight into consumer sentiment.

The business intelligence will be stored and ready for distribution to the company that originally scoped the work.

Each company may use the intelligence to optimize its product positioning and/or pricing to attract more customers to their site and increase sales.

Other ecommerce companies interested in the same data may purchase a copy of the intelligence, in which case (7.) the data mining company distributes a copy of the report to the second customer.

Protect your data from botnets

As we’ve seen, much of today’s internet traffic is driven by scrapers and the third-party data extraction companies that make them possible. Akamai Content Protector, the next generation of scraper protection, detects and defends against most scraping activity online — even as attack strategies evolve in complex ways. It’s been tested with major online brands, including those with persistent scraping challenges.

Amid today’s most unpredictable threats, the right protection goes a long way. When it comes to botnets and beyond, Akamai makes all the difference — and enables a foundation for sustainable success, right from day one.