Sharing Is (Not) Caring: How Shared Credentials Open the Door to Breaches

Contents

Introduction

Whether via a banking login portal, open source software, or even the operating system itself, modern reliance on technology has made both tech professionals and the general public beholden to other people's code. The need for consistency and practicality has brought us things like code libraries, since writing every piece of code from scratch isn't scalable.

The more complex environments become, the more important it is to find places where you can automate and reuse tools already available to you.

However, a developer’s use of code from these preestablished libraries as foundations for their own code creates “trust issues”; that is, it requires a level of trust that a security professional might consider risky. The further down in the source code a vulnerability hides, the more difficult it is to find — and that’s assuming you know to look for that digital needle in the haystack in the first place.

We encountered an example of these so-called trust issues in our own development process. A seemingly routine use of one of our external services ultimately led to the discovery of several severe attack opportunities. After disclosing the information to the vendor, the identified issues were addressed. In this blog post, we share what we found, detail how we found it, and discuss ways attackers can potentially use it for their benefit.

Let’s take a look

During a routine optimization test, one of our DevOps engineers created a new container for a third-party testing tool. He executed the very familiar command: apt get update && apt get install XXXX -y.

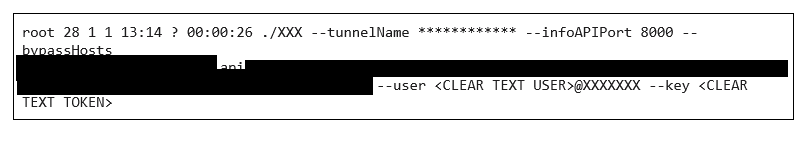

After entering the command, we wanted to see what was being executed on our endpoint while the creation process was running. To do so, we ran the simple list process command ps on our endpoint and came across this very interesting line:

We were able to use the identified credentials to authenticate to a site that contained hundreds of customer builds.

The fact that there was a clear-text key was alarming, but could be explained/contained by proper user control; for example, if the token was per use of the app. Well, this was clearly not the case here. The provided username contained the string “default”, which is never a good sign.

We decided to dig just a little bit deeper and tried to use the credentials to authenticate to the API, which allowed us to query potentially sensitive information - a lot of it. It turned out that the “default” user was indeed very default — the user was not dedicated for Akamai use, but rather was used by the application’s entire client base.

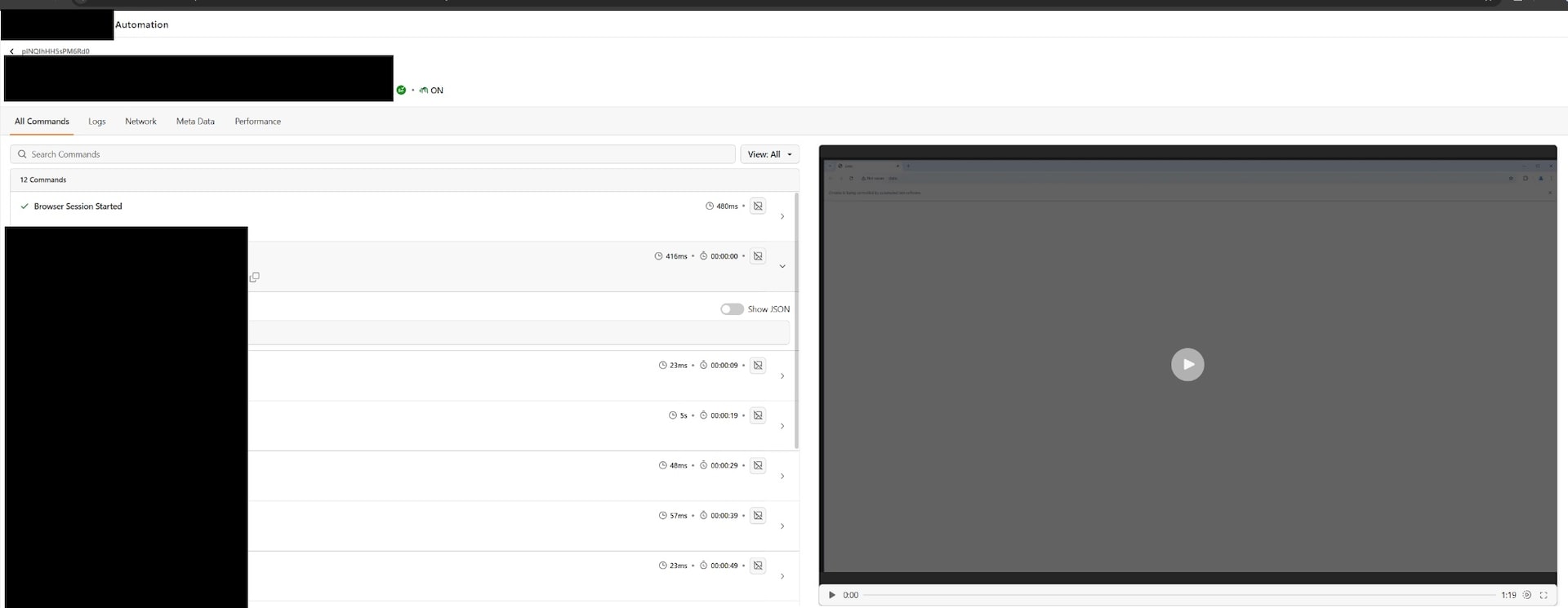

Armed with this plaintext secret, any attacker could use it to retrieve sensitive data, like internal test run results, video recordings, screenshots, and internal script execution flows, for most of the application’s customers (Figure 1).

The extraordinary amount of data that was exposed and the vulnerability’s nature of being in plain sight made us want to look further into their codebase and see what else can be found.

Sharing is not always caring

After identifying a shared secret that was being severely misused by the application, we decided to attempt to identify other such secrets. After a few well-placed searches (and a lot of prettifying) inside the applications source code we came across three additional secrets:

- A Coralogix private key

- A Google API key

- An ngrok token

Let’s examine each of these secrets and their potential for abuse.

Coralogix: A (very public) private key

One of the secrets we identified in the codebase of our applications was a private key, which piqued our interest, so we searched for any username that may be linked to this private key. We managed to find a username a few lines beneath the key as part of a logging function. We used other clues that were left behind and found that the private key belongs to the Coralogix logging framework.

The credentials were hardcoded, meaning that they were also being shared by different customers. This could mean another potential data leak, but fortunately this user/attacker had low privileges and was only allowed to write log messages into the framework.

While not as strong as the previous primitive, a write primitive can still allow for some interesting vectors on the vendor themselves: An attacker can insert spoofed log messages into the vendor's environment or attempt to inject malicious logs to perform attacks such as cross-site scripting (XSS) or Structured Query Language (SQL) injection.

Google API key

OAuth is an authorization mechanism that is used by all of Google’s operations. Applications and sites can give users the ability to log in to their apps or sites with their OAuth-facilitated Google account. To enable this option through code, developers need several parameters they can get from their Google account — namely their API key: their google_name and google_secret.

While browsing the code, we found a reference to Google API. Usually in cases where we see a “google api” key, we see only that — but not this time. This time we also found the “google api” key, google_client unique identifier, and (most bafflingly!) the google_secret identifier. With all three, attackers can request an authentication link from Google as the vendor. This link can be used as part of a phishing campaign to trick victims into giving permission to their Workspace to attackers.

Potential phishing exploitation

Attackers can create a phishing email containing a link to a site that is identical to the vendor’s site with one crucial change — the link to log in with Google is the link the attacker got by using the vendor's Google API details (Figure 2).

By logging in, the victim grants the attacker permission to their entire Google Workspace account. With access to something as sensitive as a Google identity, an attacker’s possibilities are vast. Successful exploitation would allow an attacker to manipulate any aspect of the victim’s Google Workspace account, including email compromise, file downloading, and much more. This could be very useful as part of a social engineering attack on organizations.

ngrok

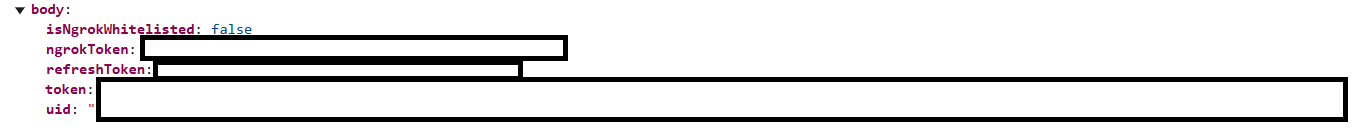

Network tunneling is a nearly universal solution for secure data transfer, which can provide an attacker with a treasure chest full of malicious opportunities if compromised. We uncovered many tunnelling-related parameters in the compromised application’s installation, including an ngrok configuration, prompting us to investigate further. The same features that foster better developer collaboration are precisely what makes it attractive to a threat actor.

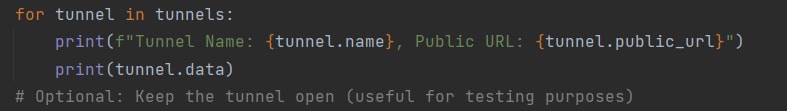

Ngrok is a service that specializes in providing a platform for tunneling information through servers. Ngrok makes it very simple to expose services to the internet, which allows faster debugging and more efficient development feedback loops. Unfortunately, if an attacker has seized control of the tunnel, that simplicity is available to them, too.

By invoking a simple ps command, an attacker can view the company’s unique ID and authentication token. This unique ID is nonchangeable; it will stay the same across other uses of the application on other endpoints. Attackers can use those parameters to send the ID and token to a vendor’s online server to receive the company’s ngrok details (Figure 3).

That means that after initial access attackers can copy the unique ID and token from the victim and use those to read the data that is being sent/received from/by the victims application (Figure 4).

Conclusion

Threats are not exclusively external — in fact, we willingly accept some threats internally. Many people think open source software is the greatest danger because of the trust we give it, but third-party tools can pose a greater challenge for companies than open source.

Following our discoveries, we disclosed the issues highlighted in this blog to the affected vendor and they were fixed. Despite that, new security flaws are always just around the corner.

We believe that in this case — and in many others — security oversight is as important as training developers to have a security mindset. These two things can help ensure that managed services do not cause any problems for companies.