Managing AI Bots as Part of Your Overall Bot Management Strategy

A version of this blog post was previously published.

Whenever something captures our interest we tend to think of it as completely different from everything that came before — but since most innovations build on past knowledge, we may be underestimating what we already know. Bots that collect the data to train large language models (LLMs) are a good example of this phenomenon.

Many customers ask questions about how to block these artificial intelligence (AI) bots because their organizations are worried about the potential adverse impacts of LLMs having so much of their content. These questions imply that AI bots need to be handled differently, but many companies can (and do) already manage these bots effectively.

The potential adverse impacts of AI bots

Managing AI bots is important across all industries because bots can scrape content to train chatbots and LLMs. Those bots, and other web crawlers, continuously crawl sites looking for new information and then immediately scrape it. This can cause site performance degradation, appearances of an organization’s product or service information in places the organization didn’t authorize, information leakage, and other harmful consequences.

It’s important to think about the bot’s purpose, not its tooling. It may be an AI bot because it is being used by AI, but the bot’s purpose is more critical to categorization. Is the AI bot scraping for news aggregation? Enterprise data aggregation? A media search? Those are all pre-existing known bot categories and AI bots performing those functions can be classified as such.

Identified bots

The good news is that most AI crawlers identify themselves. The vast majority of them use user agents, some complement it with a second factor such as the network data such as list of IPs, dedicated ASN or reverse DNS entries. With these identifiers, bot management companies can include the AI bots in their directories of known bots.

(Some bots, however, intentionally use common headless browsers, making their identification and attribution harder. Nevertheless, identifying them is possible with Bot Manager.)

ChatGPT is an example of a “good netizen.” ChatGPT advertises a clearly defined user agent along with the network data, facilitating easy identification and management, if desired. Its definition, along with dozens of other AI crawlers, is already integrated into Akamai's known bot directory, accessible to all Akamai App & API Protector, Akamai Bot Manager, Akamai Content Protector, and Akamai Account Protector customers.

The ease of impersonation of single-factor–based bots creates a serious issue for some bot management and analytics vendors. Akamai’s known bot directory contains only multi-factor definitions and, as a result, provides reliable identification of the known bots.

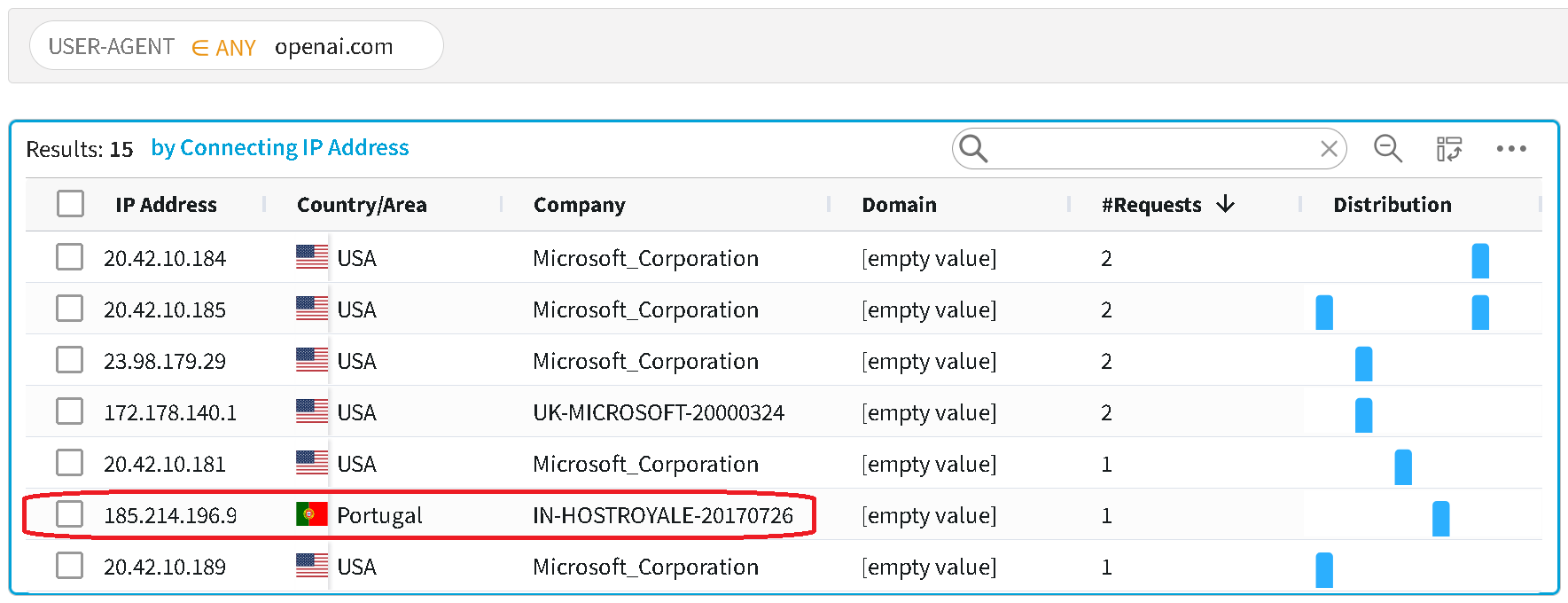

The figure (from Akamai Bot Manager) illustrates how a threat actor tried to impersonate the user agent for the Claude/Anthropic AI bot on akamai.com.

The importance of a bot management strategy

Although it might seem ideal to have a category called “AI bots” (and Akamai has such a category) in a bot directory so you can conveniently manage them all, that approach can pose challenges.

For example, prominent AI chatbots like Google's Gemini and Microsoft Bing’s chatbot, don’t identify as a unique category of bot. Instead these bots use their parent’s existing search engine crawlers for data collection and may be categorized as search engine bots. You could mitigate these easily because they’re known, but blocking them poses the threat of adversely affecting your company's search engine optimization (SEO) standings.

You may still want to mitigate some AI bots within a category, but not the whole category. A good bot management strategy will allow you to take action on one specific bot within a category — for example, you can allow the coupon-scraping bots of companies you work with and block those you don’t.

Beware of inauthenticity

It is worth pointing out that an active mitigation strategy against crawlers will not guarantee that a query to an AI chat engine will ever respond with “We know nothing about that subject because the primary owner of the content is preventing us from crawling their data.” It is quite likely that there will be some data indexed on third-party sites (for example, a competitor) and the LLM will leverage its associative memory and present coherent yet inauthentic responses.

Unidentified bots

Now, let’s look at AI bots that don’t clearly identify themselves. This technically categorizes them as “unknown,” but Bot Manager can still detect them and recognize them as scrapers.

Currently, the industry commonly uses robots.txt (RFC 9309) to manage AI bots by defining directives for crawlers. While major AI-based crawlers follow these rules, some smaller vendors ignore them entirely. Bot Manager allows concerned customers to easily enforce these rules through their security configurations.

Learn more

Read this blog post to learn how to create an effective bot management strategy.