Abusing VBS Enclaves to Create Evasive Malware

Contents

1. Introduction

2. Virtual Trust Levels

3. What are VBS enclaves?

4. Enclave malware

5. Detection

6. Conclusion

7. Acknowledgments

Introduction

Virtualization-based security (VBS) is one of the most fascinating recent security advancements. The ability to isolate critical components of the OS has enabled Microsoft to achieve substantial security improvements with features like Credential Guard and Hypervisor-Protected Code Integrity (HVCI).

One often overlooked feature that is enabled by VBS is VBS enclaves — a technology that allows the isolation of a region of a process, making it inaccessible to other processes, the process itself, and even the kernel.

VBS enclaves can have a wide range of security applications, and Microsoft uses them to implement several notable services, including the controversial Recall feature. Moreover, Microsoft supports third-party development of VBS enclaves and actively promotes their adoption.

While enclaves can help secure systems, they can also be very appealing to attackers — a piece of malware that manages to run inside an enclave can be potentially invisible to memory-based detection and forensics.

We set out to explore VBS enclaves and understand how they could be used for malicious purposes, and this blog post will detail our key findings. We’ll dive into VBS enclaves by exploring previously undocumented behaviors, describing the different scenarios that can enable attackers to run malicious code inside them, and examining the various techniques “enclave malware” can use.

We’ll also introduce Mirage, a memory evasion technique based on a new approach that we dubbed “Bring your own vulnerable enclave.” We’ll explore how attackers can use old, vulnerable versions of legitimate enclaves to implement this stealthy evasion technique.

Virtual Trust Levels

Windows traditionally relied on processor ring levels to prevent user applications from tampering with the OS. This hardware feature enables a separation of the OS from user applications — the kernel runs in ring0, isolated from the ring3 user mode applications. The problem with this approach is that it provides attackers with a relatively easy path to compromise the OS — kernel exploits.

The Windows kernel exposes a very rich attack surface. A huge list of third-party drivers, alongside a wide variety of services exposed by the kernel itself, leads to the development of a seemingly constant stream of kernel exploits. Using such an exploit, an attacker can potentially control every aspect of the OS. The boundary of user/kernel mode was proving to be insufficient.

To close this gap, Microsoft introduced an additional security boundary into the OS in the form of Virtual Trust Levels (VTLs). VTL privileges are based on memory access — each trust level provides entities running under it with different access permissions to physical memory. Among other things, these permissions ensure that lower VTLs cannot access the memory of higher ones.

Similar to the traditional processor ring architecture, VTLs separate the OS into different “modes” of execution, starting from VTL0 and ending at (potentially) VTL16. Unlike processor rings, where ring0 is the most privileged, higher VTLs are more privileged than lower ones.

Windows currently uses two main trust levels: VTL0 and VTL1 (VTL2 is also used, but is out of the scope of this blog post). VTL0 is used to run the traditional OS components, including the kernel and user mode applications. VTL1, which is more privileged than VTL0, creates two new execution modes: secure kernel mode and isolated user mode.

Secure kernel mode

Secure kernel mode refers to the ring0 VTL1 execution mode. This mode is used to run the secure kernel — a kernel that runs in VTL1 and is, therefore, more privileged than the normal kernel. Using these privileges, the secure kernel can enforce policies on the normal kernel and restrict its access to sensitive memory regions.

As we noted, the kernel exposes a large attack surface and is susceptible to compromise. By stripping some privileges from the kernel and granting those privileges to the secure kernel instead, we can reduce the impact of a kernel compromise.

In theory, a compromise of the secure kernel can still allow an attacker to fully compromise the system. Despite that, this scenario is much less likely because the secure kernel is very narrow and does not support any third-party drivers, which creates a significantly reduced attack surface.

Isolated user mode

VTL1 also creates another interesting execution mode called isolated user mode (IUM), which refers to the ring3 VTL1 execution mode. IUM is used to execute Secure Processes — a special type of user mode process that uses the memory isolation capabilities of VTL1. The memory inside IUM cannot be accessed by any VTL0 code, including the normal kernel. This execution mode is the backbone of isolation-based features like Credential Guard.

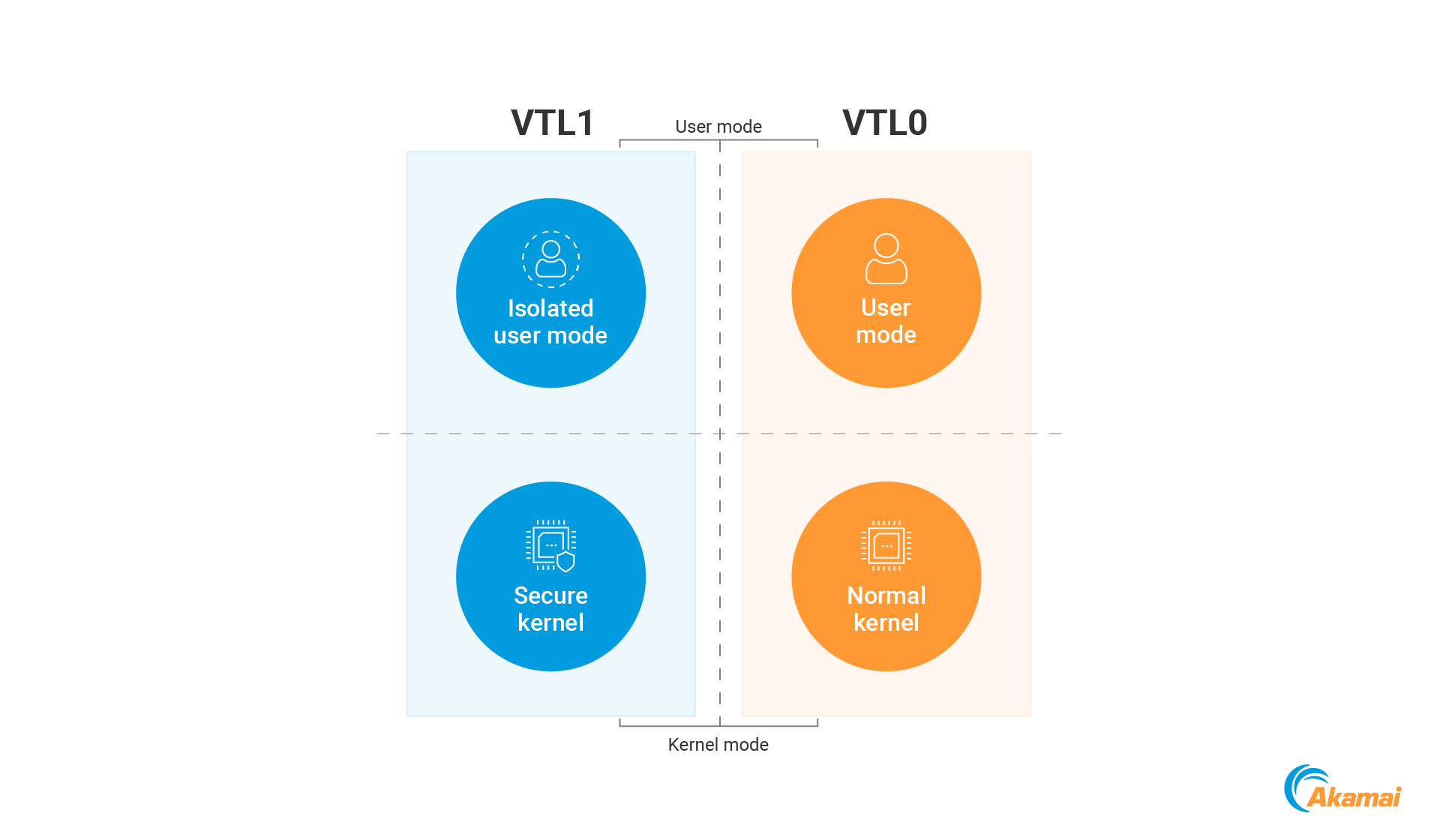

To summarize, the introduction of VTL0/1 results in four execution modes (Figure 1).

- Ring0 VTL0 — Normal kernel mode

- Ring0 VTL1 — Secure kernel mode

- Ring3 VTL0 — Normal user mode

- Ring3 VTL1 — Isolated user mode

What are VBS enclaves?

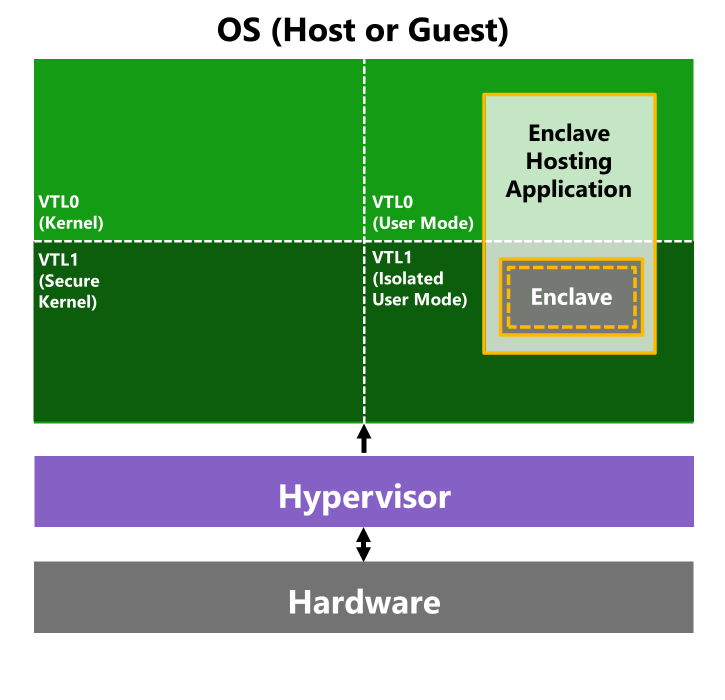

Another feature that is enabled through IUM are VBS enclaves. A VBS enclave is a section of a user mode process that resides in IUM, into which we can load DLLs called “enclave modules.”

When a module is loaded into an enclave, it becomes a “trusted execution environment” — the data and code inside the enclave are inaccessible to anything running in VTL0 and, therefore, cannot be tampered with or stolen (Figure 2). This feature is useful for isolating sensitive operations from attackers who are able to compromise the system.

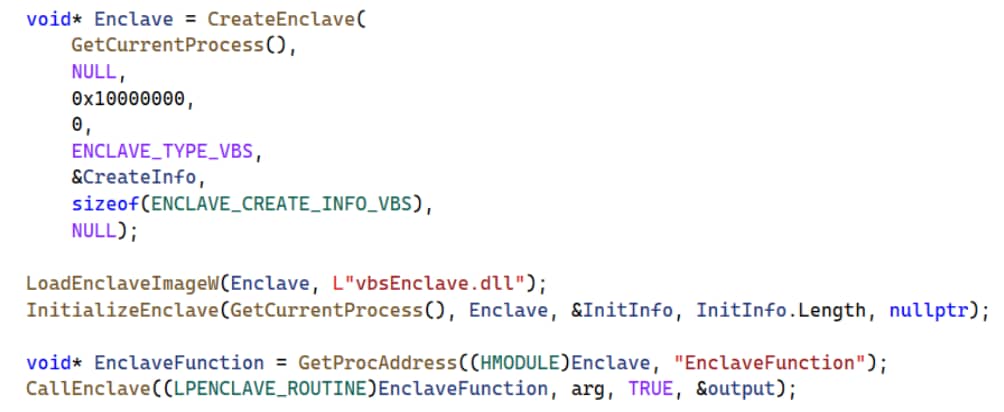

A user mode process can call the dedicated Windows APIs to create an enclave, load a module into it, and initialize it. An enclave module comes in the form of a DLL that was compiled specifically for this purpose. After the enclave is initialized, the hosting user mode process cannot access its memory, and can only interact with it by invoking the functions exported by its modules using the CallEnclave API.

Figure 3 is an example of code implementing this process (a more detailed example is provided by Microsoft).

Because Microsoft aims to restrict access to VTL1 as much as possible, loading a DLL into an enclave requires it to be properly signed using a special Microsoft-issued certificate. Any attempt to load a module without such a signature will fail. The option to sign enclave modules is only delegated to trusted third parties. Interestingly, there are no restrictions on who can load these modules — as long as they are signed, any process can load arbitrary modules into an enclave.

Enclave modules are meant to serve as small “computational units,” which have a very limited ability to interact with or affect the system. Because of this, they are limited to using a minimal set of APIs, which prevents them from accessing most of the OS components.

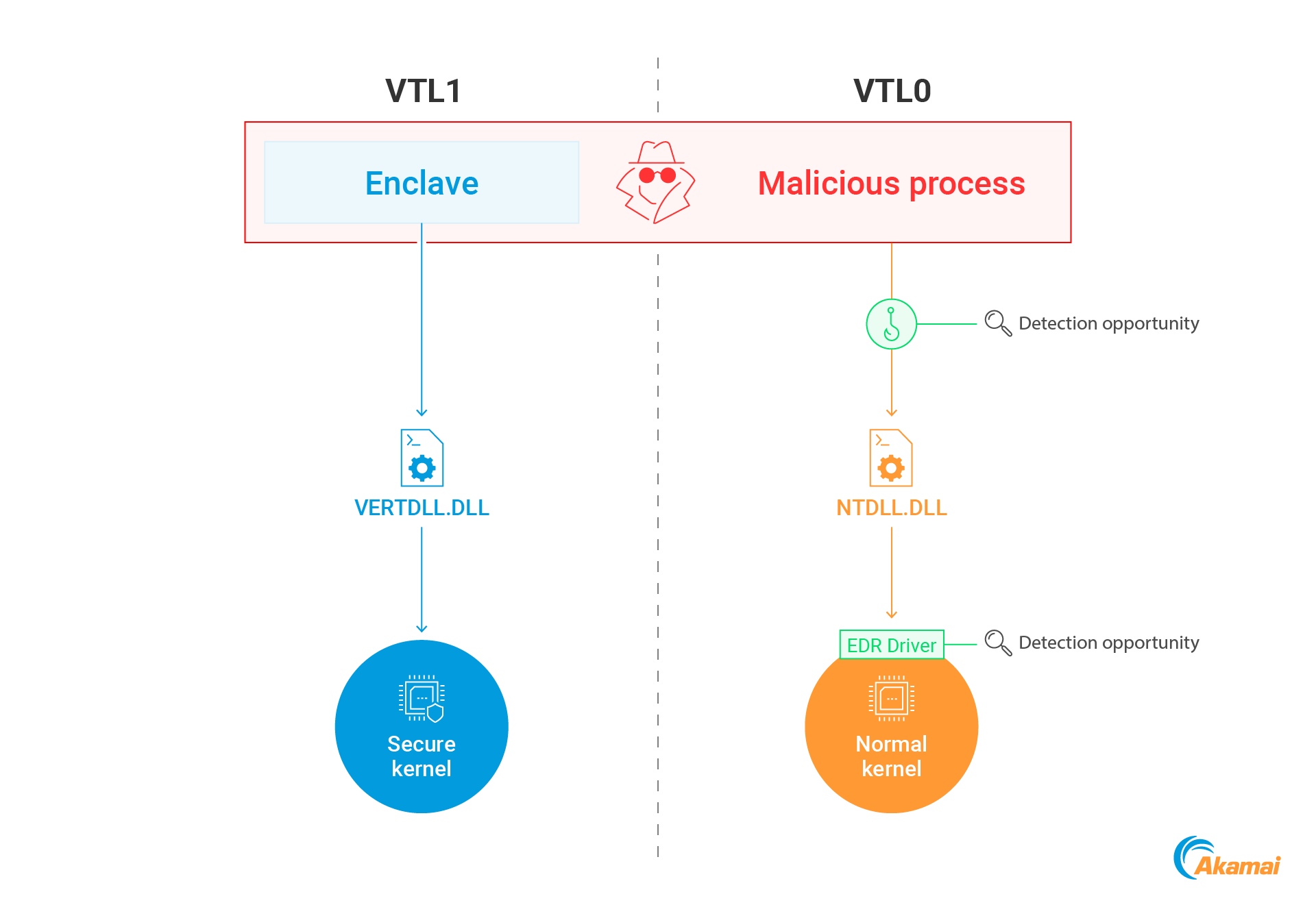

APIs available inside enclaves are imported from dedicated libraries loaded into VTL1. For example, while normal processes rely on the ntdll.dll library to request services from the OS, enclave modules use vertdll.dll — a "substitute" ntdll that is used to communicate with the secure kernel through syscalls.

Enclave malware

The concept of enclave malware can certainly be appealing to attackers, as it provides two significant advantages:

Running in an isolated memory region: The address space of enclaves is inaccessible to anything running in VTL0, including EDRs and analysis tools, making detection much more complex.

Untraceable API calls: API calls made from within an enclave can be invisible to EDRs. EDRs monitor APIs in user mode by placing hooks inside system libraries, and employ drivers to monitor activity in the kernel. API calls triggered from an enclave cannot be detected by these techniques, as enclave calls are performed from VTL1 and do not go through any of these “hooked” VTL0 components.

Figure 4 depicts this advantage: A normal process invoking a Windows API can be monitored through a hook inside NTDLL or the kernel itself. However, an enclave module goes through the VTL1-resident vertdll and invokes calls to the secure kernel — both inaccessible to EDRs.

Recognizing this potential, we set out to investigate the concept of enclave malware. To leverage enclaves for this purpose, two questions must be addressed:

- How can attackers execute malicious code within an enclave?

- What techniques can attackers employ once running inside an enclave?

How can attackers execute malicious code within an enclave?

As we previously stated, enclave modules have to be signed with a Microsoft-issued certificate to load, meaning that only entities approved by them should be able to execute their own code within an enclave. Despite that, attackers still have some options.

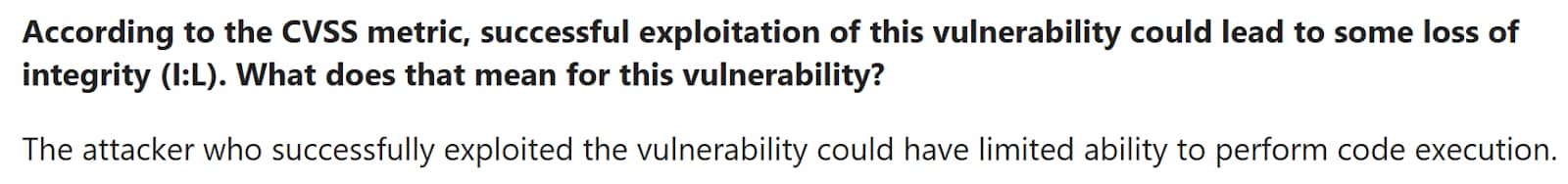

First, an attacker can rely on an OS vulnerability. An example of this came with CVE-2024-49706, discovered by Alex Ionescu, (working for Winsider Seminars & Solutions) which could enable an attacker to load an unsigned module into an enclave. The vulnerability was patched by Microsoft, but motivated attackers may identify similar bugs in the future.

A second straightforward approach would be to obtain a legitimate signature — this could be possible since Microsoft exposes enclave signing to third parties through the Trusted Signing platform. While certainly not trivial, an advanced attacker may be able to obtain access to a Trusted Signing entity and sign their own enclaves.

In addition to these two options, we explored two additional techniques that might enable attackers to run code inside a VBS enclave — abusing debuggable enclaves and exploiting vulnerable enclaves.

Abusing debuggable enclave modules

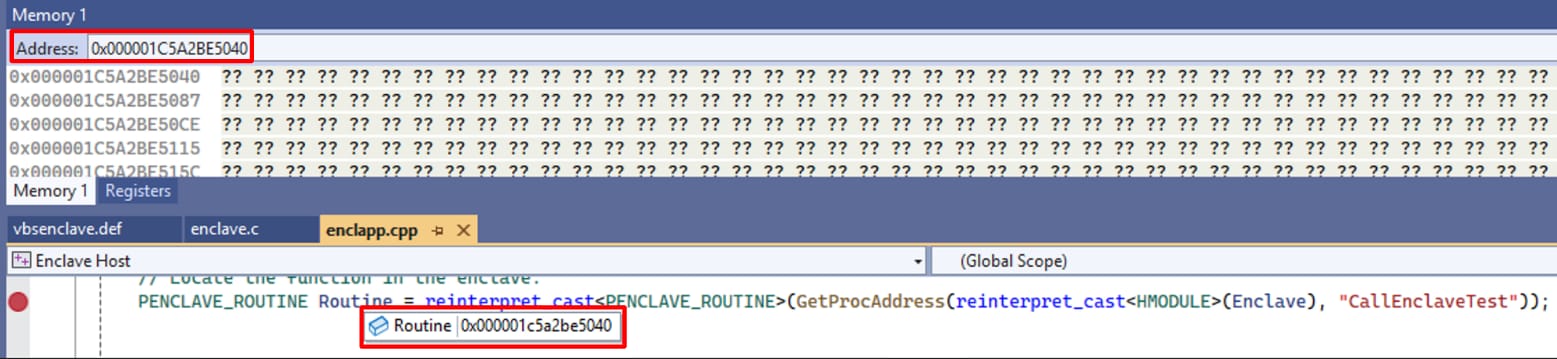

When creating a VBS enclave module, a developer can configure it to be debuggable. Compiling a module with this setting enables, well ... debugging it. As enclave modules run in VTL1, debugging them is normally not possible as the debugger cannot access the enclave memory to retrieve data or place breakpoints. Figure 5 shows an example of a debugger failing to read from a memory address inside an enclave.

Interestingly, when a debuggable enclave module is executed, it is still loaded into VTL1. To enable debugging, the secure kernel implements some exceptions that are applied to debuggable enclave modules. For example, when trying to read from the memory of such a module, the normal kernel issues a call to the SkmmDebugReadWriteMemory secure kernel call, which verifies that the target module is indeed debuggable, before performing the requested operation.

An example of this can be seen in Figure 6 — after loading a debuggable enclave module, the debugger can successfully read from the enclave memory.

In a similar manner, the memory permissions within a debuggable enclave module can also be modified by the VTL0 process (an exception implemented in the SkmmDebugProtectVirtualMemory secure kernel call).

Microsoft strongly urges developers to not ship debuggable enclave modules, as doing so undermines the core purpose of enclaves — which is isolating a section of memory from VTL0 (Figure 7). Using a debuggable module means that the data handled by it can be easily exposed.

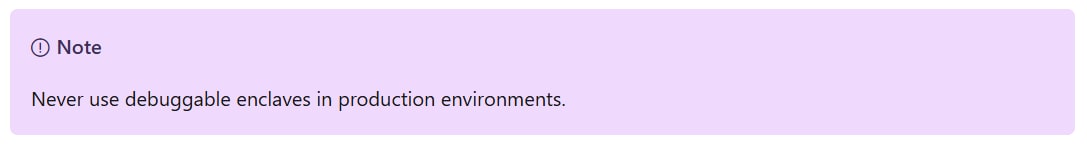

The risks of a production application running a debuggable enclave module are clear, but attackers can actually abuse them for another purpose — executing unsigned code in VTL1. If an attacker obtains any debuggable signed enclave module they can achieve VTL1 code execution by performing the following four steps:

- Obtain the address of a routine inside the enclave module using GetProcAddress

- Change the routine memory protection to RWX

- Overwrite the routine code with arbitrary shellcode

- Trigger the routine using CallEnclave

Figure 8 depicts code implementing this process.

The obvious problem from an attacker's perspective is that this is a double-edged sword — just as the attacker can access the enclave memory, an EDR can do so as well. Despite that, it does come with the upside of evading API monitoring — API calls performed by the enclave module will still go through VTL1 DLLs and the secure kernel, limiting the visibility of EDRs.

Overall, this method might be useful for creating a stealthy “semi-VTL1” implant, which capitalizes on some of the advantages obtained by running inside an enclave.

We have attempted to use VirusTotal and other sources to identify a debuggable signed enclave module, but as of the time of writing this, we have been unsuccessful. However, we believe it is safe to assume that given enough time, and wider adoption of the enclave technology, some are bound to leak eventually.

Bring your own vulnerable enclave

As we've discussed, Windows uses signatures to prevent the loading of untrusted enclaves into VTL1. This approach is not unique to enclaves — the concept emerged through driver signing enforcement (DSE), which prevents untrusted drivers from running in the Windows kernel.

To overcome this enforcement, attackers began to use the bring your own vulnerable driver (BYOVD) technique — that is, an attacker cannot load their own driver, so they load a legitimate signed driver with a known vulnerability instead. They can then exploit this vulnerability to run unsigned code in the kernel.

We wanted to explore this approach in the context of enclaves: Can we abuse a vulnerable signed enclave module to execute code in IUM?

The first step was to find such an enclave, which quickly led us to CVE-2023-36880 — a vulnerability in a VBS enclave module that is used by Microsoft Edge. The vulnerability enables an attacker to read and write arbitrary data inside the enclave. While the vulnerability was labeled by Microsoft as an information disclosure vulnerability, the notes also specify that it could lead to limited code execution (Figure 9).

The vulnerability was discovered by Alex Gough from the Chrome Security Team, who also shared a proof of concept for exploiting it. After we found a vulnerable version of this enclave in VirusTotal, we began attempting to exploit it to achieve code execution.

Our idea was to abuse the read/write primitive to overwrite the enclave stack with a ROP chain, which would eventually enable us to execute shellcode within the enclave. While exploring this option we encountered an interesting fact — enclaves are protected from unsigned code execution using arbitrary code guard (ACG).

ACG is a security mitigation designed to block the execution of dynamically generated code — code created at runtime rather than being part of the original process executable or its DLLs. ACG is implemented by enforcing two rules:

- New executable pages cannot be generated after the initial loading of the process

- An already executable page cannot become writable

ACG is enforced by default on the normal kernel, but in user mode it only applies to processes that are configured to use it. Our research showed that for IUM processes, which include enclaves, ACG seems to apply automatically, as it does on the normal kernel.

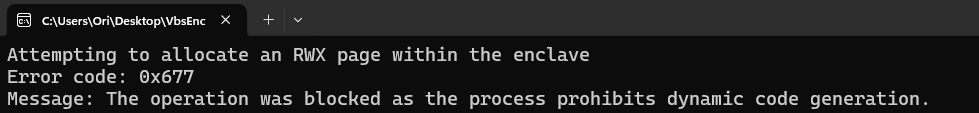

We can observe this by trying to allocate a new RWX page within an enclave using VirtualAlloc — the operation fails with error code 0x677, STATUS_DYNAMIC_CODE_BLOCKED (Figure 10). Attempting to use VirtualProtect to modify an executable page permissions, or to turn a page executable, will lead to the same result.

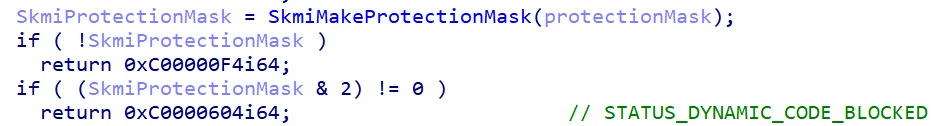

To understand this behavior, we can examine SecureKernel!NtAllocateVirtualMemoryEx, the secure kernel function that handles dynamic memory allocation in IUM. The function evaluates the requested protection mask, and if an executable page is requested it returns the ACG error STATUS_DYNAMIC_CODE_BLOCKED. Similar checks are implemented in SkmmProtectVirtualMemory to prevent changes to existing IUM pages (Figure 11).

We have not found a method to bypass ACG within an enclave and load unsigned code into it. In theory, a full-ROP exploit is possible — potentially enabling attackers to invoke arbitrary APIs in VTL1, for example — but we have not pursued this direction. Despite that, we still managed to identify another interesting application for vulnerable enclaves, which we will discuss later in this post.

What techniques can attackers employ once running inside an enclave?

Understanding that enclaves can have a lot of potential for malicious activity, and that launching them might be possible for a motivated attacker, the next question that we addressed was which techniques could be available for this type of malware.

The most straightforward use would be to use enclaves how they were meant to be used. Just as an enclave can protect sensitive data from attackers, it can also be used by attackers to hide their own "secrets” from VTL0 entities.

This could be useful in many scenarios — by storing payloads out of the reach of EDRs, sealing encryption keys hidden away from analysts, or keeping sensitive malware configuration out of memory dumps.

As for more advanced options, many traditional malware techniques are impossible to implement within an enclave. Since enclaves are restricted to a limited subset of system APIs, they are prevented from interacting with key OS components such as files, the registry, networking, other processes, and more.

Despite this, there are still a number of techniques that are possible to perform from within an enclave that allow us to capitalize on the advantages gained from running in IUM.

Accessing VTL0 user mode memory

Despite their limited access to the system, enclaves still have access to one critical resource: the process memory. Enclaves can perform read/write operations within the entire process address space, VTL0 included.

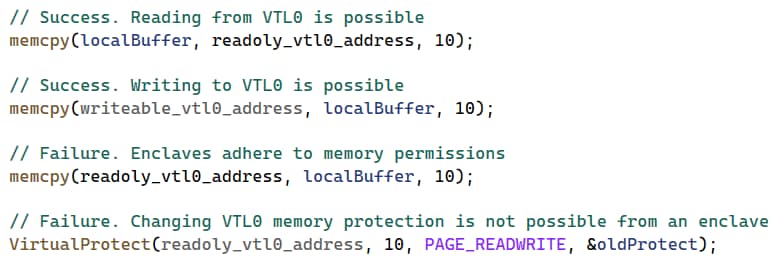

Some restrictions apply to this access — code running within enclaves adheres to memory permissions and cannot change them. This means that an enclave will not be able to write to nonwritable memory, or turn nonexecutable memory executable. Figure 12 depicts code running within an enclave and demonstrates these different possibilities and constraints.

By accessing the VTL0 user mode memory, we can implement various useful techniques. By loading a malicious enclave into a target process we can stealthily monitor and steal sensitive information, or patch values within the process to change its behavior.

Implementing these techniques using an enclave comes with a significant advantage — as we described earlier, triggering APIs from an enclave allows us to evade the monitoring of EDRs. As the memory access in these techniques is performed by an enclave, they can remain invisible.

Executing VTL0 user mode code

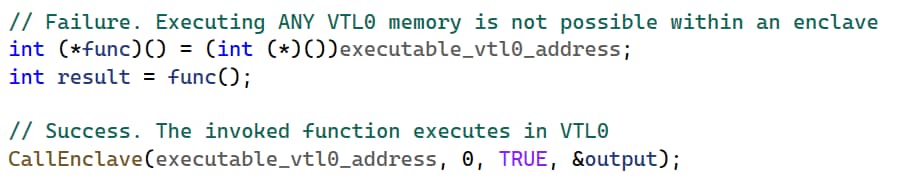

Although an enclave can read/write from VTL0 user mode memory given the appropriate permissions, code stored in VTL0 can never be executed within an enclave — even if it has execute permissions.

Despite not being able to run VTL0 code within an enclave, an enclave has the option to trigger it “remotely.” By using the CallEnclave API with a VTL0 address, an enclave can trigger the execution of VTL0 code in a normal user mode thread (Figure 13).

By getting the user mode process to “act on their behalf,” enclaves can indirectly access the system in ways that are normally not possible for them. For example, an enclave can trigger a VTL0 routine that reads from a file, creates a socket, and so forth.

It is important to note that running user mode code this way doesn’t have an advantage in terms of evasion — as the code runs like any other user mode code, it can be visible to EDRs.

Anti-debugging

Another interesting application for enclave malware is anti-debugging. The fact that code running within the enclave remains inaccessible to VTL0 applications, including debuggers, provides the malware with a significant advantage over them.

The reduction in the APIs that are exposed to enclaves means that not all traditional anti-debugging techniques will be available from an enclave. For example, the NtQueryInformationProcess or IsDebuggerPresent APIs, and all date and time APIs are not available to enclaves. Despite this, we still have some options.

First, we can rely on the enclave’s access to the VTL0 address space of the process, allowing it to read the process PEB manually and check the value of the “BeingDebugged” flag. If a debugger is detected, the process can be terminated by the enclave.

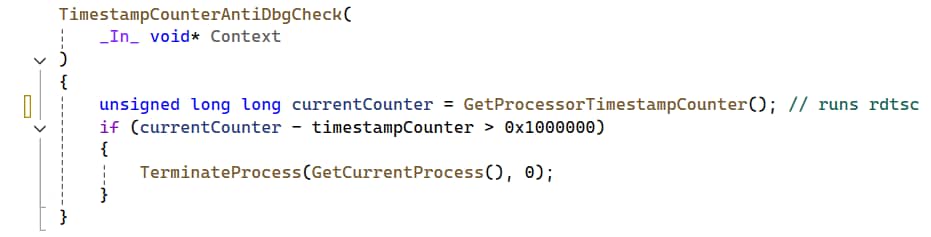

Another approach would be to implement a time-based anti-debugging technique (Figure 14). Although date and time APIs are not available to enclaves, they can still use the rdtsc assembly instruction. This instruction returns the number of processor clock cycles since boot. Using it, we can measure the time spent between different enclave calls, and terminate the process if a significant delay is detected.

By moving critical parts of our code to an enclave alongside an anti-debugging check, we can make a piece of malware that is almost completely bulletproof to dynamic analysis. The malware relies on the enclave to run properly, while the user mode process cannot patch the checks running inside it. Implemented correctly, this approach could only be defeated by debugging Hyper-V or the secure kernel.

BYOVE — Round 2

In a previous section, we attempted to exploit a vulnerable enclave module to achieve code execution in IUM. When we realized that this might not be possible, we thought we’d see if the concept of BYOVE could have other applications, and decided to further explore the vulnerable enclave module.

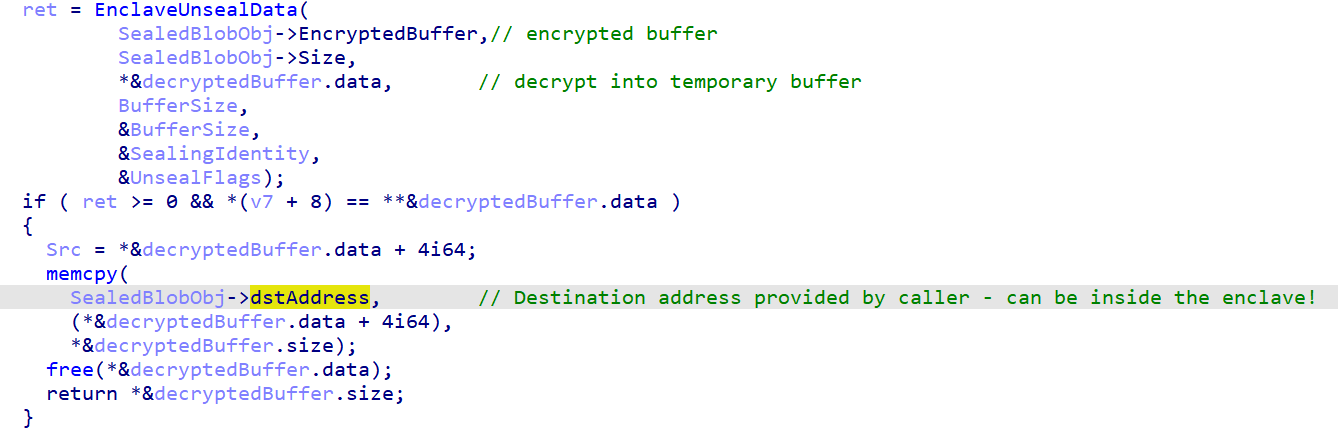

The vulnerability (CVE-2023-36880) stems from the SealSettings and UnsealSettings functions that are exported by the enclave module. The SealSettings function receives a pointer to a data buffer, encrypts it, and writes the results to a destination address provided by the caller. UnsealSettings works in a similar manner, decrypting a supplied buffer and writing it to a specified address.

The problem with both of these functions is that they do not validate either the destination address or the source buffer address, allowing them to point to any address within the process, including addresses inside the enclave itself. Figure 15 depicts the vulnerable code, showing UnsealSettings performing a memcpy to an arbitrary address supplied by the user.

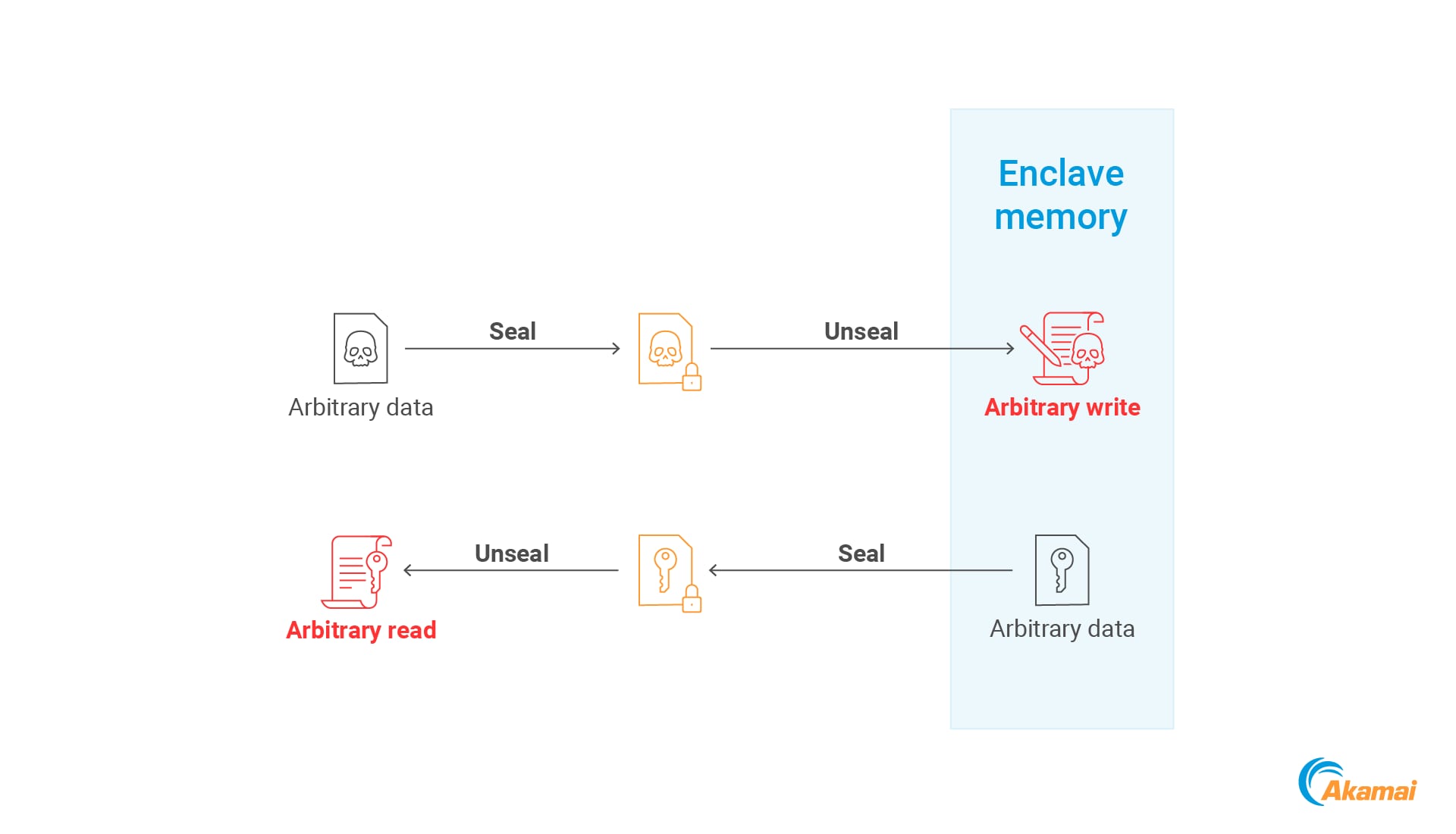

This vulnerability provides an attacker with two capabilities (Figure 16).

Arbitrary write inside the enclave: An attacker can call SealSettings to encrypt arbitrary data, then call UnsealSettings to point to a destination address inside the enclave. This results in the original data being written to the enclave memory.

Arbitrary read inside the enclave: An attacker can call SealSettings, while supplying an address within the enclave as the source buffer pointer. This will cause the enclave to encrypt data from the enclave memory and write it to an attacker-controlled location. The attacker can then decrypt this data by calling UnsealSettings, enabling them to read arbitrary data from the enclave.

Mirage — VTL1-based memory evasion

Although it didn’t enable us to execute code in VTL1, the arbitrary write primitive does provide two unique abilities:

To store arbitrary data in VTL1, where it becomes inaccessible to VTL0 entities

To write arbitrary data into the normal process address space (VTL0) from VTL1, preventing VTL0 entities from monitoring this operation

Moreover, as these capabilities are facilitated through the loading of a signed enclave module, they could be used by any attacker, without requiring them to sign anything themselves.

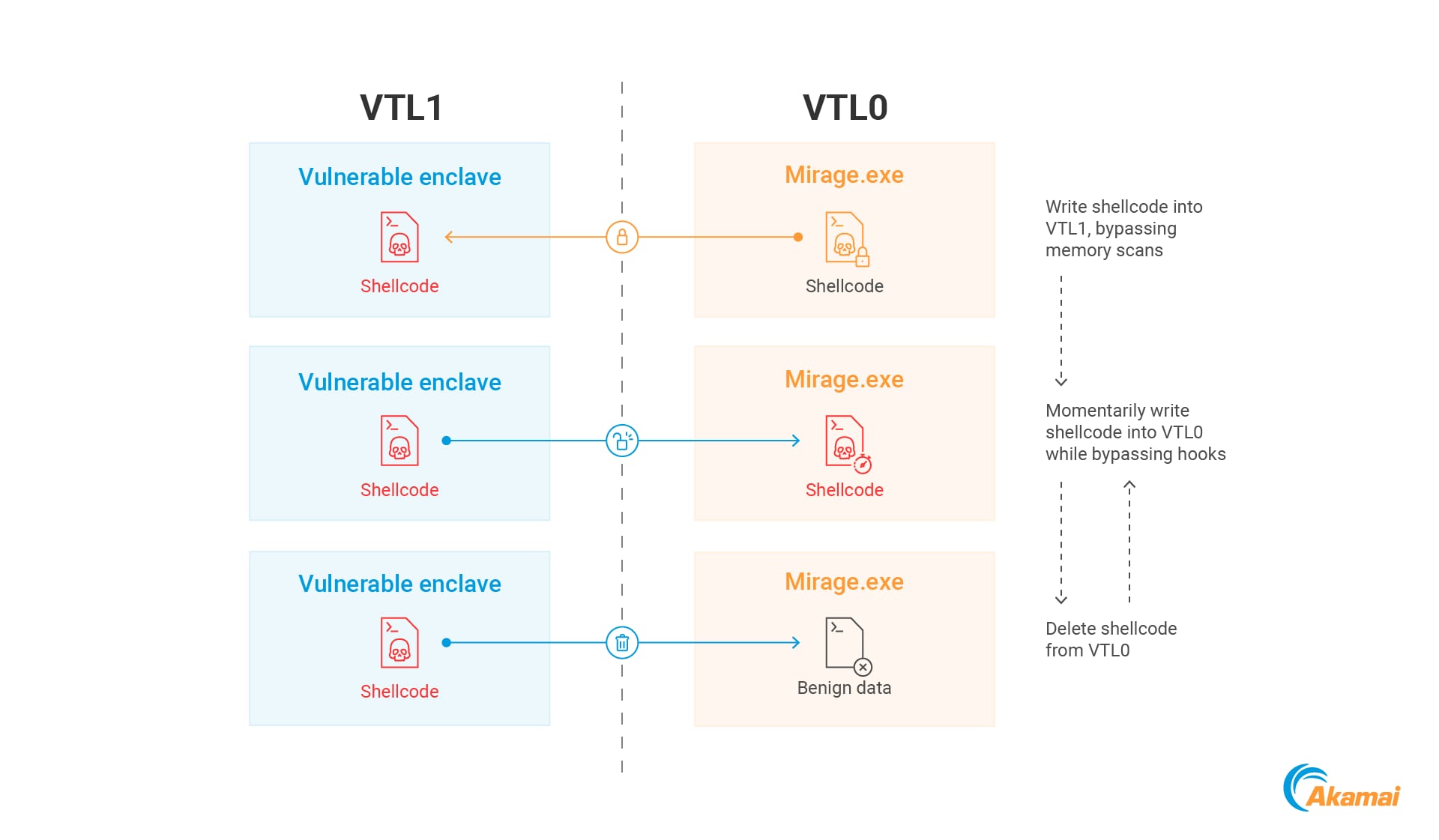

To demonstrate the potential of these capabilities, we came up with a memory scanning evasion technique that we dubbed “Mirage.” Mirage draws inspiration from Gargoyle, an evasion technique that creates a payload that continuously switches between a benign and a weaponized state (Figure 17).

While Gargoyle implements this by toggling between executable and nonexecutable memory, Mirage aims to achieve a similar result by transitioning between VTL1 and VTL0 memory — it stores shellcode in the VTL1 enclave memory, periodically transfers it back to VTL0 using the vulnerability, executes it, and then promptly erases it from VTL0 memory (Figure 18).

This approach has two main advantages. First, as the payload spends most of the time hidden in VTL1, it is resilient to memory scans and dumps. This is advantageous over the Gargoyle technique because during its dormant stage our payload is not only “stealthy” — but it is inaccessible.

The second advantage is that the writing of the shellcode into VTL0 is performed by the enclave. As we have previously described, EDRs cannot monitor code executing in VTL1, meaning that typical EDR hooks will not be able to intercept the shellcode as it is being written to memory.

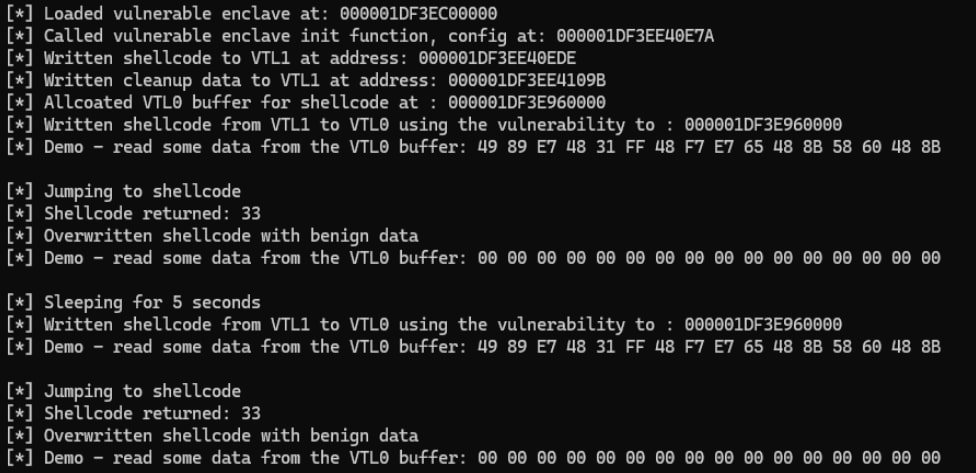

We developed a PoC for Mirage (Figure 19). This PoC is only meant to demonstrate the idea behind the technique, and is therefore not fully weaponized.

Detection

As of today, enclaves are used by a very limited number of applications. Even if they become more widely adopted, they will still only be loaded by specific processes, rather than by arbitrary ones. For example, calc.exe should probably never load a VBS enclave.

Because of this, anomalous enclave use can be a great detection opportunity. Defenders should capitalize on it by building a baseline of known, legitimate uses of VBS enclaves and by flagging any deviations from it. Enclave use can be identified in two ways: by monitoring enclave APIs and by detecting loaded enclave DLLs.

Enclave APIs

The following APIs are used to manage VBS enclaves by a hosting process, and will likely indicate that one is being loaded:

- CreateEnclave

- LoadEnclaveImageW

- InitializeEnclave

- CallEnclave

- DeleteEnclave

- TerminateEnclave

Loaded enclave DLLs

Another option to detect anomalous enclave use would be to detect the loading of environment DLLs that are typically used by them; that is, Vertdll.dll and ucrtbase_enclave.dll. As these DLLs should not be used by anything other than an enclave, their presence will indicate that the process likely uses one.

Conclusion

VBS enclaves provide an amazing tool for developers to protect sensitive sections of their applications, but — as we have just demonstrated — they can also be used by threat actors to “protect” their malware. Although this concept is mostly theoretical at this point, it is certainly possible that advanced threat actors will begin to use VBS enclaves for malicious purposes in the future.

Acknowledgments

We would like to acknowledge the work done by Matteo Malvica of Offsec and Cedric Van Bockhaven of Outflank, who recently conducted a research project that is very similar to this one. Please check out their first blog post in a two-part series, and stay tuned for part 2, which will be published after Insomni’Hack 2025.