How-To: Replaying Cellular Network Characteristics on Cloud Infrastructure

As researchers of web performance, we’ve always wanted to measure the quality of experience for loading web pages from the perspective of our users. Today, Real User Monitoring (RUM) systems allow web developers to utilize the browser-exposed Navigation and Resource Timing APIs through JavaScript injected into the HTML. Although this effort has been successful, and in fact many content providers and content delivery networks make extensive use of them, these APIs lack detailed visibility into the lower layers of TCP performance and its implications on the web page load time.

We often use the time to establish TCP connections as a surrogate for network performance, and the relatively recent network information API provides several hints such as the type of network connectivity (4G/3G/2G/Wi-Fi) to which the browser is connected, along with the estimated round-trip time to a connected server. However, such information still does not reveal any information related to the network congestion experienced by the lower-layer TCP stack when loading web pages. As a result, we struggle to understand the specific implications of network congestion on the web page load process and in turn, have difficulty building solutions to address the implications in a systematic manner.

The members of Akamai’s Foundry — an applied R&D team — performed a large-scale investigation to understand the network characteristics of a major US-based cellular carrier and their implications on the TCP stack deployed on Akamai’s infrastructure for serving web requests. The goal of this blog post is to introduce a tool that web researchers and developers can use to replay the characteristics of cellular networks and perform detailed analysis of web performance under various (realistic) cellular network conditions.

Several studies (Goel, PAM'17; Marx, WIST'17; and Goel, MobiCom'16) have already made extensive use of the tool to investigate the performance of HTTP/2 in lossy cellular network conditions.

Before I begin to describe how to set up the tool on a cloud infrastructure, I would encourage anyone who would like to learn the inner workings of the tool to check out our technical paper on this subject.

Step-by-step instructions to set up the tool

Introduction

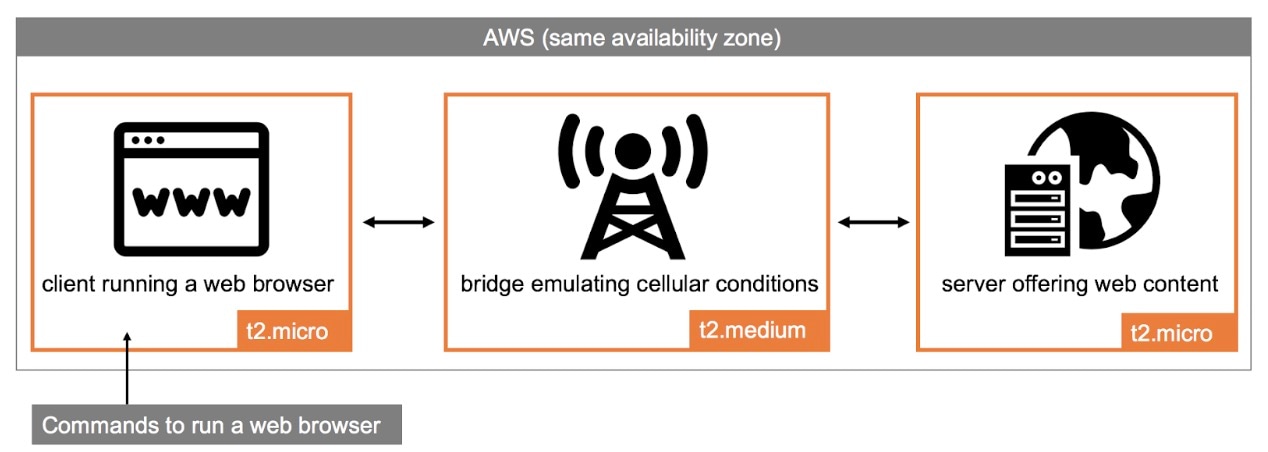

As with any web performance measurement exercise, we need a client machine with a web browser installed, a server machine with a web server installed, and a bridge that will emulate the cellular latency, packet loss, and bandwidth between the client and the server. This setup is depicted in the diagram below.

Now, this seems like a typical setup that one could use to simulate various network conditions, especially using Linux Traffic Control (TC) to modify latency, loss, and bandwidth between the client and the server. In fact, research studies have been making extensive use of such setups to throttle connections by adding static latency or packet loss. While these static network conditions have their uses, they often do not reflect reality.

Our tool is designed to push updates to TC in a way that emulates the dynamic nature of cellular networks in terms of latency, loss rates, the frequency at which the loss happens, and the bandwidth on the network links between the client and the server. Such a setup offers a more realistic replay of cellular network conditions compared to traditional methods of randomly injecting loss during communication because our tool relies on real-world traffic captured from Akamai’s content delivery servers.

Creating AWS EC2 Instances

Step 1) Assuming you have an AWS account, from the EC2 dashboard, create three EC2 instances, representing a client, a server, and a bridge. One important thing to keep in mind is that all three instances must be created under the same availability zone and under the same virtual private cloud (VPC), but under different /24 subnets. I used Ubuntu Server 16.04 LTS (HVM), SSD Volume Type - ami-6e1a0117 for setting up the three instances.

Step 2) Now, let’s create two t2.micro instances (): one for the client and the other for the server. Note that you do not need public IP addresses for these two instances, as we want them to be accessed from the bridge instance.

Step 3) Create a t2.medium instance for the bridge that comes with three network interfaces, where the interface eth0 will be used to connect the bridge to the Internet, eth1 will be used to connect the bridge to the client, and eth2 will be used to connect the bridge to the server. Note that when you create this instance, it will by default have only the eth0 interface attached to it. We will add eth1 and eth2 after starting the instance. Also, remember to request a public IP for this instance.

Preparing the bridge instance

Step 4) Before we can add eth1 and eth2 to the bridge instance, we will need to create these interfaces and attach them to the bridge instance from the “Network Interfaces” portal in the AWS console.

Step 5) When creating the eth1 and eth2 interfaces, select the client's subnet and the server's subnet in the "Subnet" field, respectively.

Step 6) One by one, attach the two interfaces to the bridge instance

Step 7) Make sure you disable the "Source/Destination Check" under the “Actions” dropdown for each interface. This is needed in order for the client to be able to talk to the server in our setup.

Step 8) Connect to the bridge using your ssh key and run the following commands:

$> ip addr

You should see four network interfaces: loopback, eth0, eth1, and eth2. However, you will not see an IP address attached to eth1 and eth2 network interfaces.

To attach IP addresses to the interfaces, we need to connect the instance with the dhcp server. Let’s do that by running:

$> sudo dhclient eth1

$> sudo dhclient eth2

Step 9) Run the below command and you should see an IP address attached to the two interfaces

$> ip addr

Step 10) Enable packet forwarding between eth1 and eth2 via the command:

$> sudo sysctl -w net.ipv4.ip_forward=1

Preparing the client and server instances

Step 11) To connect with the client and server via bridge, you will have to copy the .pem key file to the bridge. Once you connect to the client and server, on each of the two instances, do the following:

We will need to change the default gateway used on the client and server and point it to the bridge. On the client instance, set the default gateway to be the eth1 IP address of the bridge. On the server instance, set the default gateway to be the eth2 IP address of the bridge.

To do so, first, run

$> sudo route add default gw <IP>

command to add the bridge as a gateway and then remove the previous default gateway by

$> sudo route del default gw <IP>

Step 12) Now, try pinging the client from the server and vice-versa. You should see ICMP responses unless the security groups attached to the three instances prevent the communication.

Step 13) Run tshark on the bridge to check to make sure that the packets between the client and server instances are going through the bridge. That is, from client to server, packets should flow as

eth0 of client → eth1 of bridge → eth2 of bridge → eth0 of server

Replaying cellular network characteristics

Now it’s time to configure the bridge to emulate cellular network behavior between the client and the server instances in terms of loss rates, the frequency at which the loss happens, latency, and bandwidth.

Step 14) Download the emulation script on the bridge from our GitHub repository available publicly at https://github.com/akamai/cell-emulation-util.

Step 15) There are two types of replay one could perform using the tool. The first is based on the network quality of experience, which could potentially represent 2G/3G/3.5G/4G network configurations. The second is based on how often the TCP stack interprets network congestion and reduces the congestion window.

The general command to replay cellular network characteristics is as follows:

$> ./cellular_emulation.sh <type_of_simulation> <condition>

Where, <type_of_simulation> can be either loss_based or experience_based. The values for <condition> will depend on the <type_of_simulation>. For example, when emulating experience-based network characteristics, use noloss, good, fair, passable, poor, and very poor. When emulating loss_based network characteristics, use good, median, or poor.

Step 16) For experimentation purposes, one can configure telemetry/chrome headless to automate page loads on the client instance that loads web pages from an Apache server installed on the server instance.

That’s all, folks! Now you have your own testbed to emulate various cellular network conditions in the cloud. You can drive a web browser on the client in the cloud, and see how it responds to loading content from a server over a hypothetical cellular Internet connection. I hope you will be able to use the tool to improve your experimentation capabilities.

Huge thanks to Moritz Steiner, Stephen Ludin, and Martin Flack (all from Akamai) for their help in refining the capabilities of the tool and providing feedback on an early version of this article. -Utkarsh Goel